Prerequisites for NFVIS Clustering Capability for Cisco CSP

Ensure that you configure NTP, so that the system time on all nodes of the cluster is synchronized.

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

|

Feature Name |

Release Information |

Description |

||

|---|---|---|---|---|

|

NFVIS Clustering Capability for Cisco CSP |

Cisco NFVIS 4.8.1

|

This feature enables you to combine three nodes into a single cluster definition so that, all the member nodes display configuration information about all the virtual machines deployed in the cluster. Each member node contributes to a user-specified disk size (same value for all the members) of their total disk size to form a shared and clustered file system. The data is replicated across all the cluster members. This clustering capability is resilient against single node failures. |

Ensure that you configure NTP, so that the system time on all nodes of the cluster is synchronized.

To create a cluster for storage virtualization, you can use only three Cisco CSP devices with the same configuration. Configure an IP address on the new bridge. The IP addresses should match one of the three IP addresses used for creating the cluster. We recommend that you configure the 1x10 GigE NIC for data traffic. and Port channel or physical network interface card pNICs.

When a cluster is created, the Maximum Transmission Unit (MTU) on the cluster bridge is automatically set to 9000. Ensure that the switch present in the network that connects to the PNIC supports an MTU of 9000.

A cluster cannot be created on NFVIS hosts that have virtual machines deployed on them. If a system has virtual machine deployments, export and delete the virtual machine, before creating the cluster.

The backup from an old host cannot be restored once the cluster is created, and the backup for a new host cannot be created after a cluster is created.

When a cluster is created, all the nodes in the cluster share their storage with each other. Other NFVIS configurations such as additional bridges, OVS networks, users, groups, TACACS/RADIUS, and SNMP are not synchronized and must be created on each cluster node, separately.

This feature does not support storage migration for virtual machines. Cold virtual machine migration between datastores (intdatastore, extdatastore1/2, and shared datastore) is not supported.

You cannot add, delete, or replace an existing cluster with a new NFVIS host. If you require a new NFVIS host, in case of faulty hardware, delete the exisiting cluster and create a new cluster with the new host.

When a device in the network is down, the virtual machines running on the device also go down and become inaccessible. Before Cisco NFVIS 4.8.1, there are two ways to recover the virtual machines:

Recover the virtual machine from a back-up. The drawback of this method is that the backup could be outdated depending on when the backup was taken.

Deploy the virtual machine in an active-standby mode. The drawback of this method is when the virtual machine is down, the standby takes over. However, this would require two separate licences, one for the active virtual machine and one for the standby virtual machine.

Starting from Cisco NFVIS 4.8.1, the NFVIS clustering capability is introduced. This feature allows you to form a cluster of 3 nodes. The disks of all virtual machines deployed on the cluster are replicated on all the cluster nodes. This enables you to perform a cold migration of one virtual machine to a different node in the cluster when the virtual machine's node goes down.

Use a dedicated pNIC to enable the flow of cluster data traffic between the nodes, and this pNIC port should be attached to a newly created bridge for the cluster traffic to flow. Configure an IP address on the new bridge. The same IP address is one of the three IP addresses used for creating the cluster.

We recommend that you configure the 1×10 GigE NIC for data traffic.

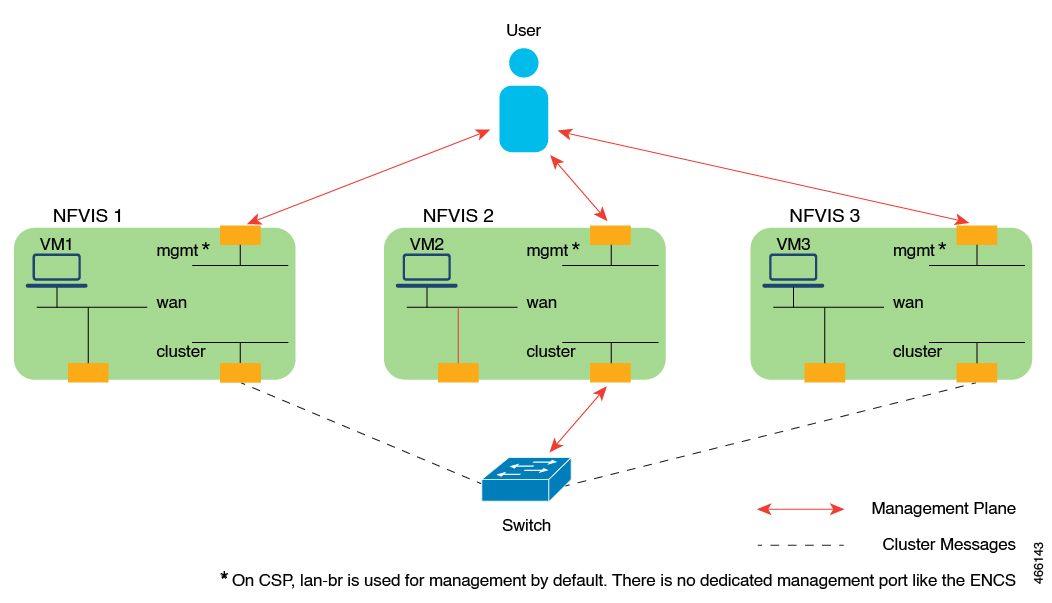

The following diagram illustrates a dedicated pNIC that enables the flow of cluster data traffic between the nodes. The dedicated pNIC port is attached to a newly created bridge for the cluster traffic to flow.

Before creating the cluster, ensure to complete the following steps on all the participating nodes:

nfvis# config terminal

Entering configuration mode terminal

nfvis(config)# system time ntp preferred_server YOUR-NTPD-IP

nfvis(config)# end

Uncommitted changes found, commit them? [yes/no/CANCEL] yes

Commit complete.

nfvis#nfvis# config t

Entering configuration mode terminal

nfvis(config)# bridges bridge cluster-br

nfvis(config-bridge-cluster-br)# ip address 198.51.100.1 255.255.255.0

nfvis(config-bridge-cluster-br)# port eth1-1

nfvis(config-port-eth1-1)# end

Uncommitted changes found, commit them? [yes/no/CANCEL] yes

Commit complete.

nfvis# Note |

Ensure that the chosen PNIC and IP can communicate with each other. |

To create a cluster:

Send the create cluster API call to all the Cisco NFVIS that must be added to a cluster for storage virtualization.

Note |

|

After receiving the call, each server validates whether sufficient disk space is allocated for storage clustering. The server also ensures that the space utilization of the datastore is less than 80% . After the disk size validation, a reply is sent indicating that the server has received the API call. When the cluster formation is successful, a notification is pushed from the leader node to indicate that storage virtualization is complete.

nfvis# config terminal

Entering configuration mode terminal

nfvis(config)# cluster cluster1 datastore intdatastore size 10

nfvis(config-cluster-1)# node 209.165.201.1 address-type ipv4

nfvis(config-node-209.165.201.1)# exit

nfvis(config-cluster-2)# node 209.165.201.2 address-type ipv4

nfvis(config-node-209.165.201.2)# exit

nfvis(config-cluster-3)#node 209.165.201.3 address-type ipv4

nfvis(config-node-209.165.201.3)# commitThe cluster distributed file system is created, and it serves as a storage location for virtual machine registration and deployment. For information on verifying cluster creation, see Verify Storage Virtualization.

To register and deploy VMs on the cluster, specify gluster as the placement option. The VM’s disk details are replicated across all the other nodes on the cluster.

The following information is available to all the nodes and can be used while migrating the VM:

The running configuration of image registration

Flavor

Deployment

The following parameters should be unique across the cluster (for both cluster VMs and local VMs):

Flavor name

Deployment names

SRIOV networks

int-mgmt IPs

Port numbers used for port forwarding

During VM registration and deployment, if any of the above mentioned parameters aren't unique, the deployment is rejected.

nfvis(config)# vm_lifecycle images image centos src file:///data/intdatastore/uploads/Centos_7.tar.gz properties property placement value gluster

nfvis(config-property-placement)# commit

Commit complete.nfvis(config)# vm_lifecycle tenants tenant admin deployments deployment centosvm vm_group dep1 image centos flavor centos-small bootup_time -1 vim_vm_name centosvm placement zone_host host gluster

nfvis(config-placement-zone_host)# commit

Commit complete.virtual machines deployed on the cluster can be migrated to a different node on the cluster, either after they are shut down, or while the node on which they were deployed is down. Specify the API for the source and destination nodes for migration. You can choose to migrate all virtual machines from a source node or specify a list of virtual machines to migrate.

The virtual machine migration API is sent to any of the working nodes. If the host is up during migration, stop the virtual machine.

The virtual machine disk is replicated in the destination node, the virtual machine comes up in the Day-N state. After the migration, the source node is active, and created on the destination node. If the source node is down during the migration, the clean-up on the source node happens when the node comes back up.

nfvis# cluster cluster1 migrate-deployment source-node 209.165.201.1 destination-node 209.165.201.2 all-deployments

nfvis# cluster cluster1 migrate-deployment source-node 209.165.201.1 destination-node 209.165.201.2 deployment-list [ centosvirtual machine4 centosvirtual machine5 ]

For information about verifying virtual machine migration, see Verify Storage Virtualization.

Virtual Machines can be exported to any local/external datastore/NFS mount path, and can be imported from the /mnt/gluster/uploads folder.

Note |

You cannot export Virtual Machines to the /mnt/gluster/uploads folder. |

nfvis# vmExportAction exportName asaexport exportPath intdatastore:/uploads/ vmName ASAvDep.ASAvMgrpnfvis# vmImportAction importPath /mnt/gluster/uploads/backup_router.vmbkp

(or)

nfvis# vmImportAction importPath gluster:/uploads/backup_router.vmbkpDeleting a cluster is similar to cluster creation. The API for cluster deletion has a “no” prefix followed by cluster-name. The delete cluster API should be sent to all the nodes in the cluster to delete the cluster.

Delete the virtual machine images and deployments on the cluster before deleting the cluster.

Note |

After a delete cluster API is sent to a node, sending another create cluster API to the same node does not add the node back to the cluster. |

nfvis(config)# no cluster cluster1Use the following commands to manage the files in the glusterFS storage.

nfvis# system file-copy source /data/intdatastore/uploads/test_file_in_intdatastore.txt destination /mnt/gluster/uploadsscp into /mnt/gluster/uploads/

nfvis# scp user@172.16.0.1:/nobackup/userdir/test.py gluster:test.py

scp from /mnt/gluster/uploads/

nfvis# scp gluster:test.py user@172.16.0.1:/nobackup/userdir/

scp from outside

nfvis# scp -P 22222 test.txt admin@172.16.0.2:/mnt/gluster/uploads/

nfvis# show system file-list disk gluster

SI NO NAME PATH SIZE TYPE DATE MODIFIED

--------------------------------------------------------------------------------------

1 TinyLinux.tar.gz /mnt/gluster/uploads 17M VM Package 2022-01-18 16:21:03

nfvis# system file-delete file name /mnt/gluster/uploads/test.txt Note |

The file-copy and file-delete commands only work for the following folders:

|

The following support commands can be used to debug NFVIS clustering:

nfvis# support show etcd

Possible completions:

all-information Display all etcd information

cluster-health Display cluster health

member-list Display members of the cluster

service-status Display status of etcd services

nfvis# support show gluster

Possible completions:

all-information Display all glusterfs information

mount Display the glusterfs mount information

peer-status Display the glusterfs peer connectivity information

service-status Display status of glusterfs services

volume-info Display the glusterfs volume information

nfvis# show cluster

cluster cluster1

node-address 209.165.201.1

cluster-state ok

creation-time 2022-01-18T15:32:23-00:00

details "Gluster peers Healthy"

disk-usage total 10.0

disk-usage available 10.0

DEPLOYMENT

ADDRESS DEPLOYMENT STATE

----------------------------------------

209.165.201.1

209.165.201.2

209.165.201.3nfvis# show cluster

cluster cluster1

node-address 209.165.201.1

cluster-state degraded

creation-time 2022-01-18T15:32:23-00:00

details "One Node:

209.165.201.3 in the cluster is down"

disk-usage total 10.0

disk-usage available 10.0

DEPLOYMENT

ADDRESS DEPLOYMENT STATE

----------------------------------------

209.165.201.1

209.165.201.2

209.165.201.3

nfvis# show cluster

cluster cluster1

node-address 209.165.201.1

cluster-state down

creation-time 2022-01-18T15:32:23-00:00

details "Etcd is inactive"

disk-usage total 10.0

disk-usage available 10.0

DEPLOYMENT

ADDRESS DEPLOYMENT STATE

-------------------------------------

209.165.201.1

209.165.201.2

209.165.201.3nfvis# show cluster migration-status

cluster cluster1

migration-status start-time 2022-01-19T14:48:28

migration-status source-node 209.165.201.1

migration-status destination-node 209.165.201.2

migration-status status MIGRATION-SUCCESS

migration-status details ""

DEPLOYMENT STATUS LAST UPDATE DETAILS

--------------------------------------------------------------------------------------------------

CentosVM MIGRATED 2022-01-19 22:48:56.897 Migrated VM[CentosVM] to new host[209.165.201.1]

OTHER56 MIGRATED 2022-01-19 22:48:57.214 Migrated VM[OTHER56] to new host[209.165.201.2]

Note |

In NFVIS 4.8, the show cluster command only displays the latest migration status. |

| Description | Notification | Seen on |

|---|---|---|

| Cluster creation in progress |

|

All nodes |

| Cluster creation success |

|

Leader node |

| Cluster creation failure |

|

All nodes |

| Cluster deletion in progress |

|

All nodes |

| Cluster deletion success |

|

Node where deletion succeeded |

| Cluster deletion failure |

|

Node where deletion failed |

| VM migration initiated |

|

Destination node for migration |

| VM migration completed |

|

Destination node for migration |

| VM migration failed at source |

|

At source if validation fails at source |

| Description | Syslog | Sent from |

|---|---|---|

| Cluster creation in progress |

|

All nodes |

| Cluster creation success |

|

Leader node |

| Cluster creation failed |

|

All nodes |

| Cluster deletion in progress |

|

All nodes |

| Cluster deletion success |

|

Node where the deletion succeeded |

| Cluster delete failed |

|

Node where the deletion failed |

| VM migration initiated |

|

Destination node for migration |

| VM migration completed |

|

Destination node for migration |

| VM migration failed at source |

|

At source if validation fails at source |