- Executive Summary

- Navigator

- Audience

- Document Objective and Scope

- Use Cases/Services/Deployment Models

- Solution Overview

- SEL FLISR Use case Validation Call Flow Sequence

- Cisco SEL FLISR Use case – Urban Topology

- Urban FLISR Topology - SEL device to Cisco device mapping

- FLISR Fault scenario - Fault with Lock Out

- FLISR Fault scenario – Open Phase

- FLISR Fault scenario – Loss of Source

- Cisco SEL FLISR Use case – Rural Topology

- SEL FLISR Rural Topology - FLISR Fault scenario - Fault with LockOut

- SEL FLISR Rural Topology - FLISR Fault scenario – Open Phase

- SEL FLISR Rural Topology - FLISR Fault scenario – Loss of Source:

Distribution Automation - Feeder Automation Design Guide

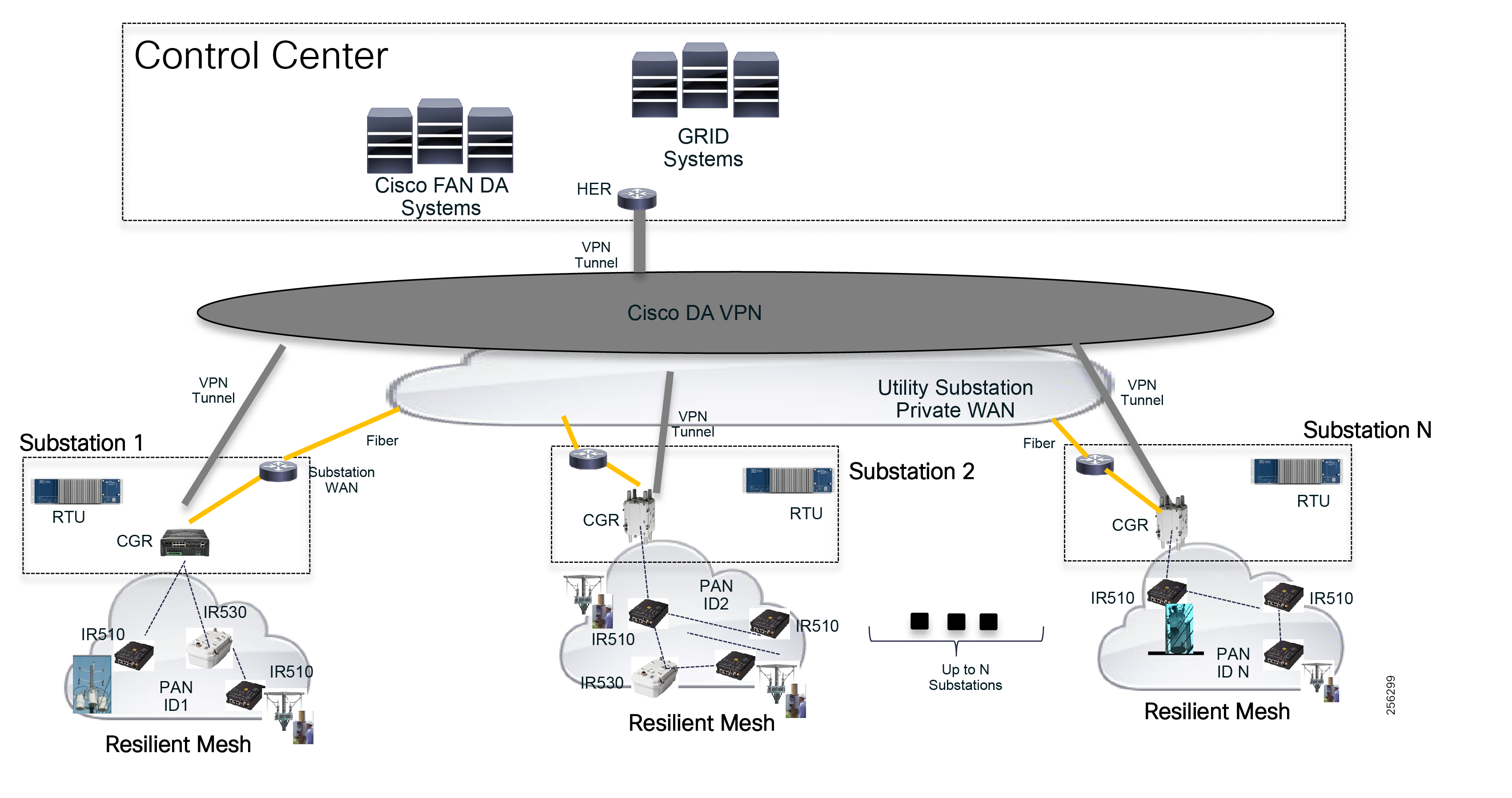

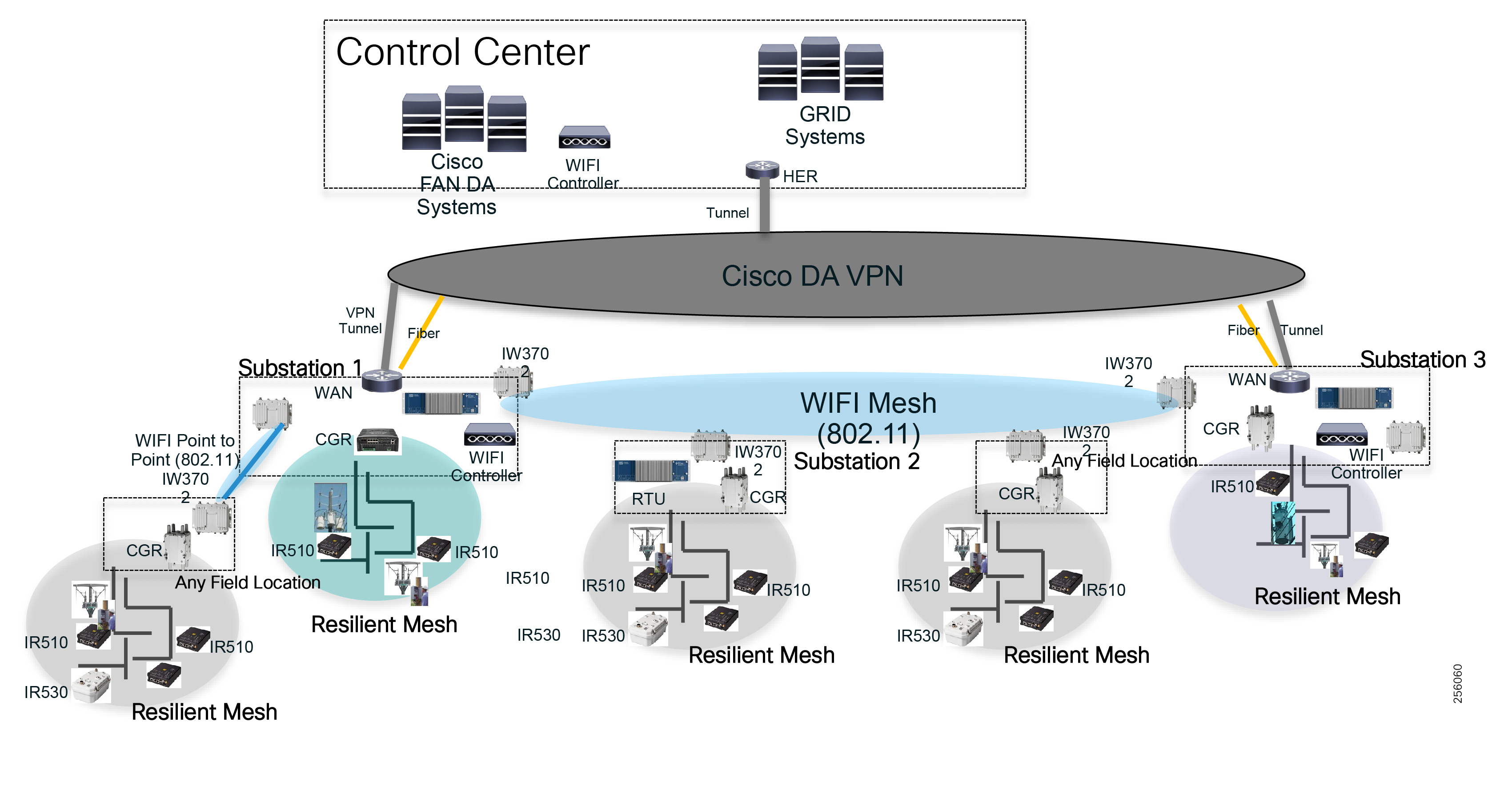

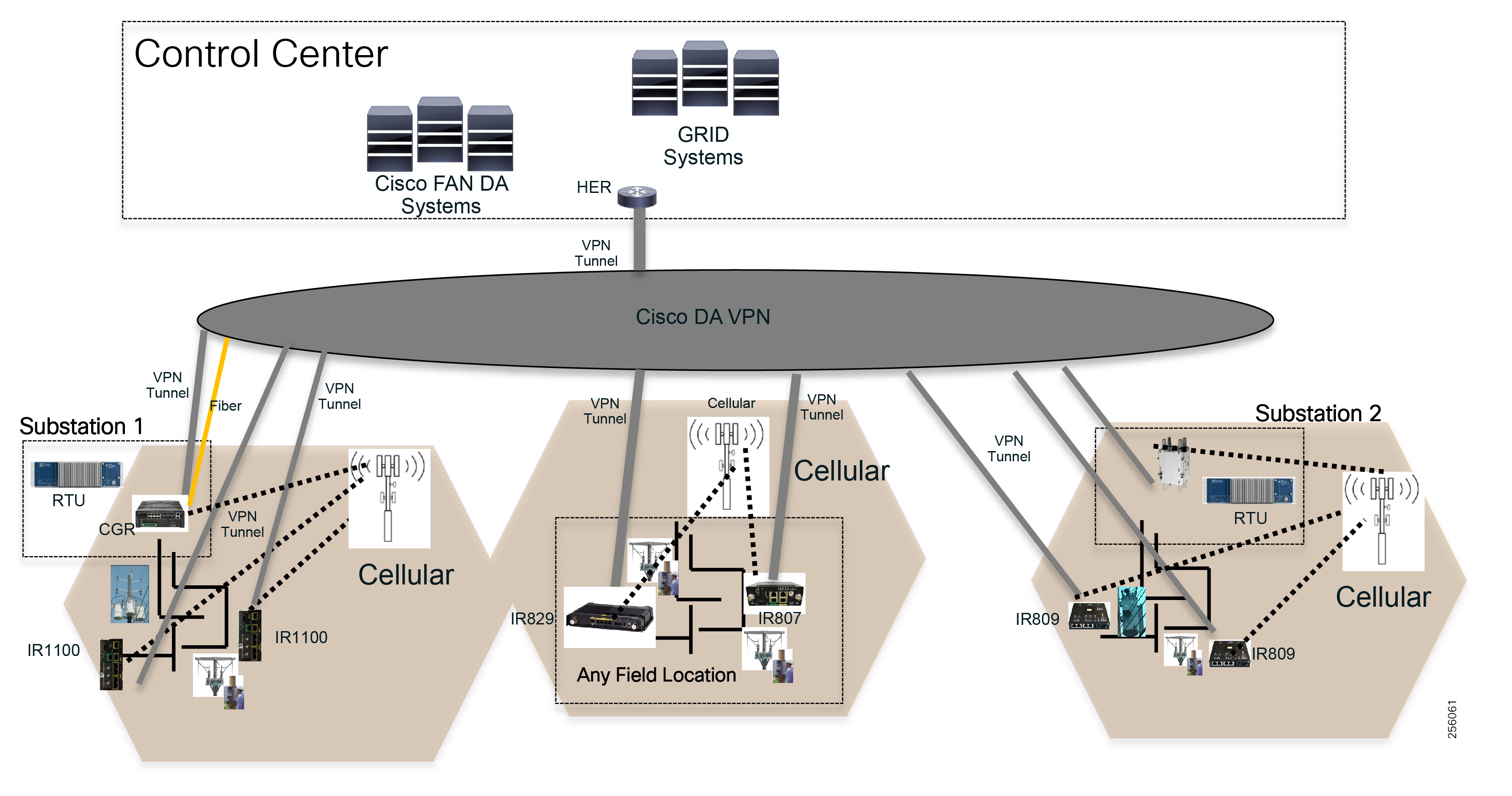

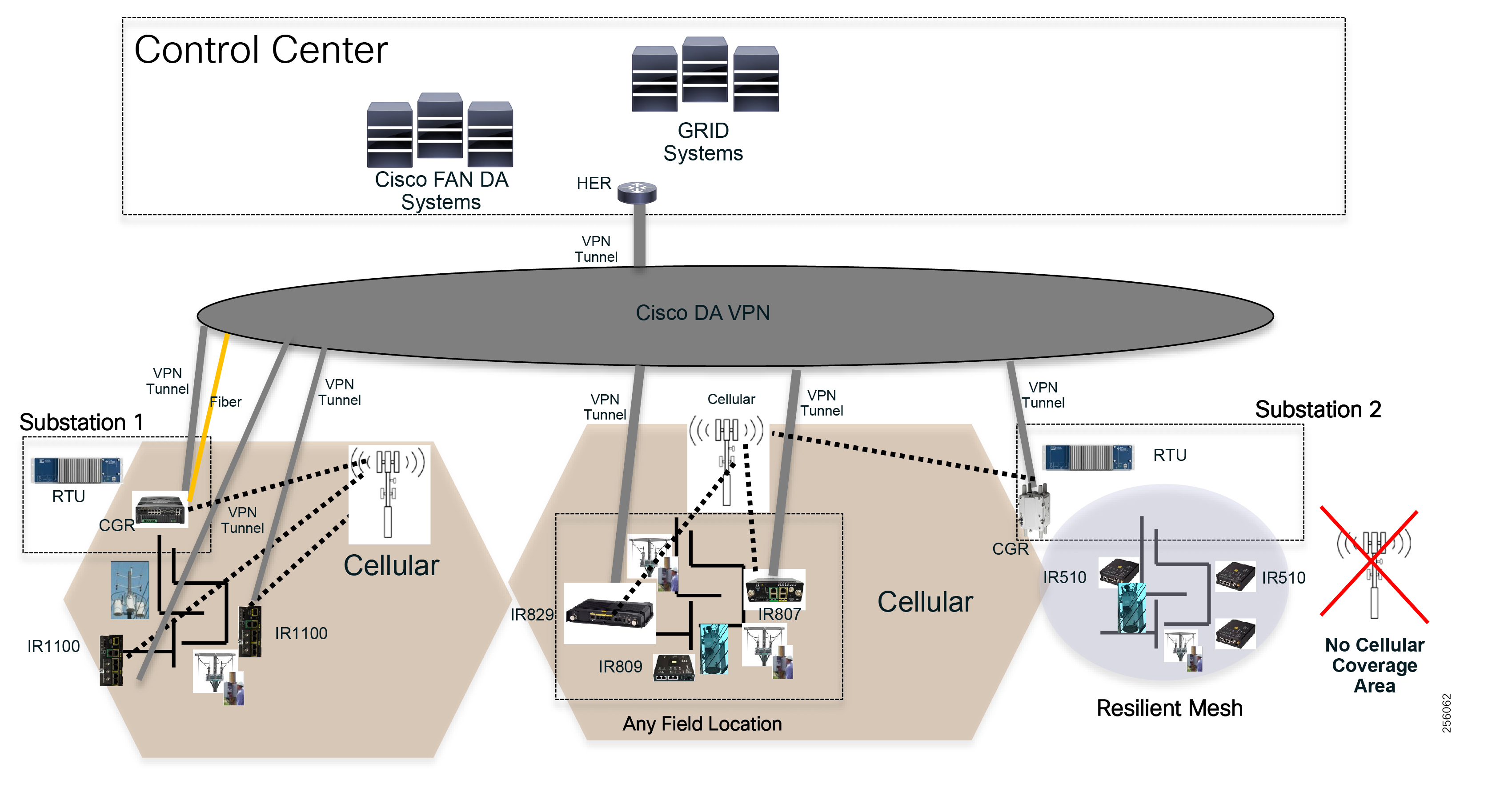

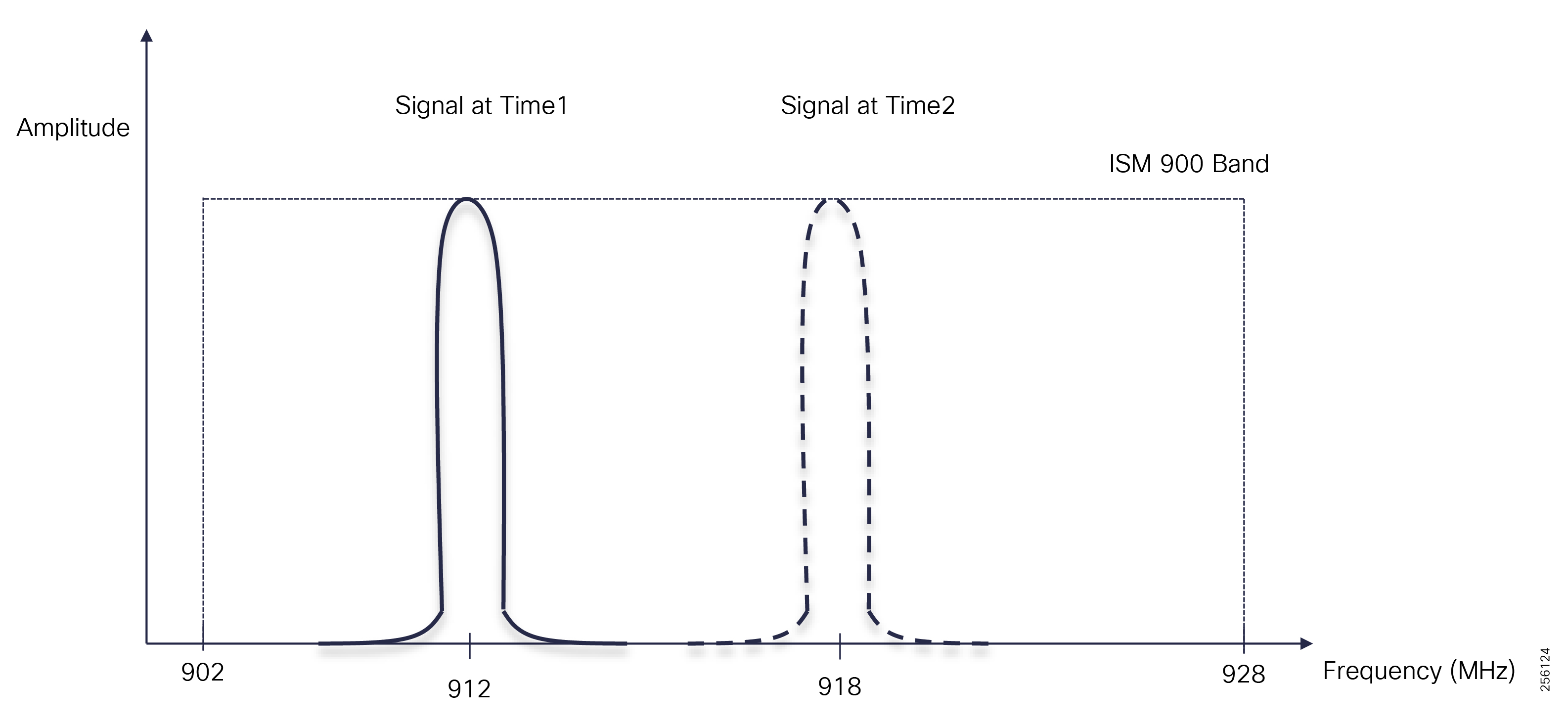

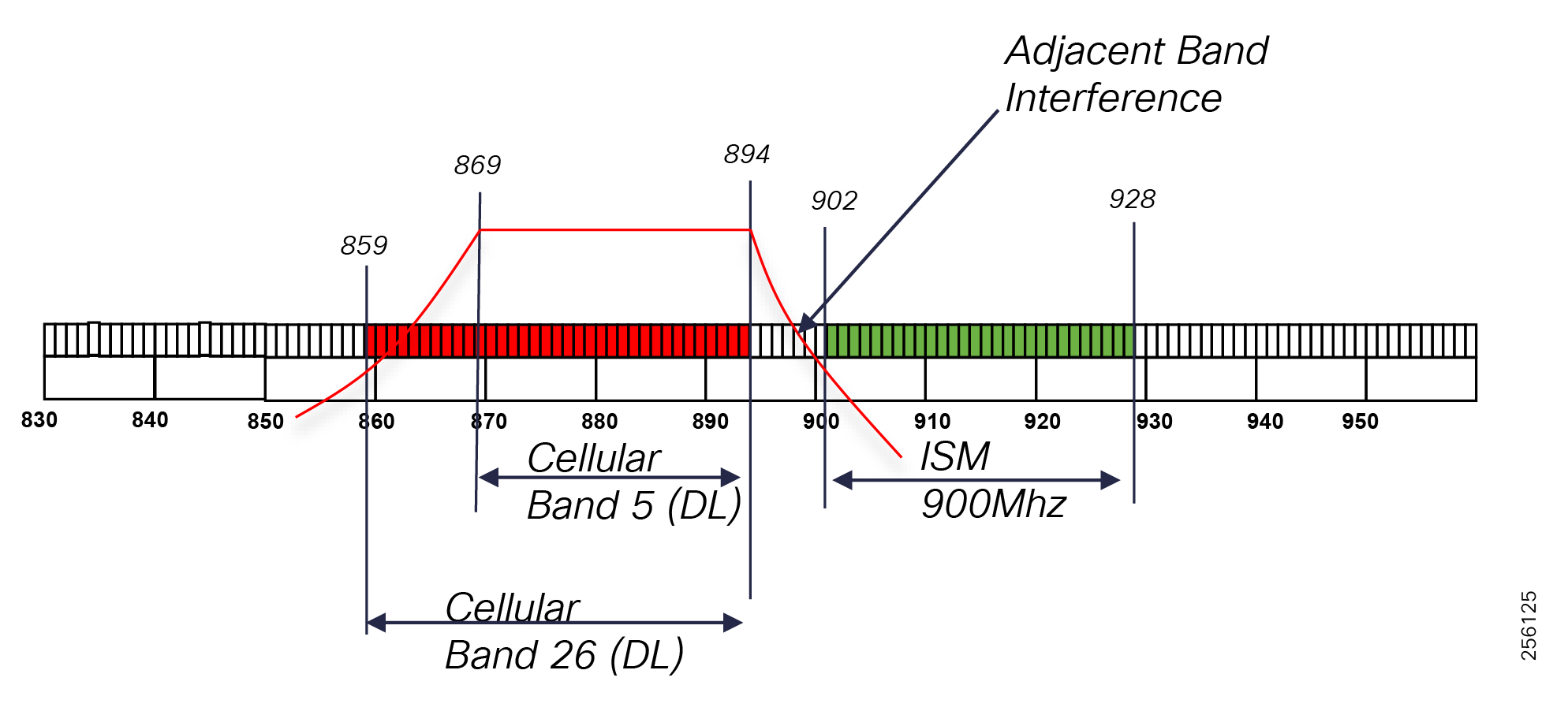

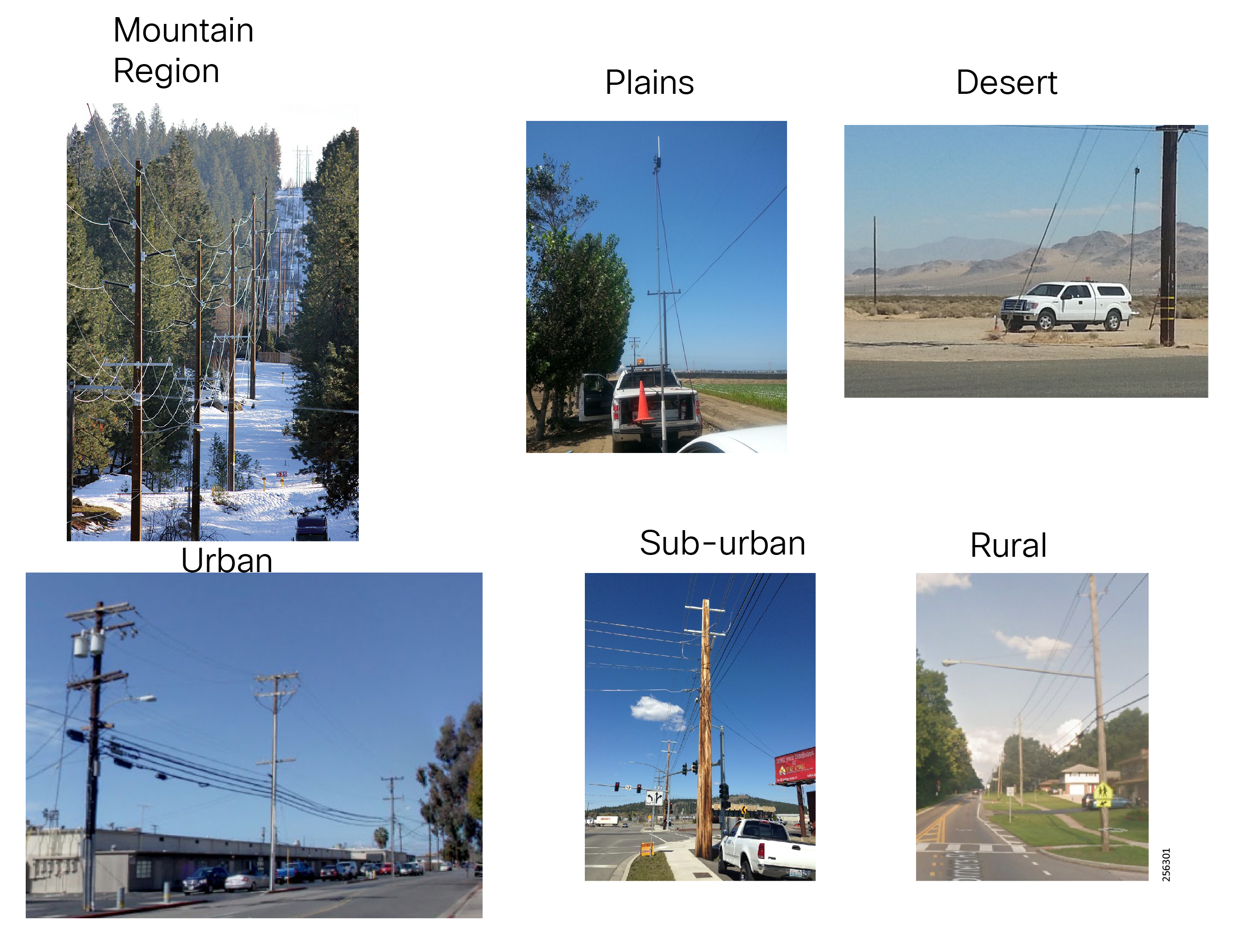

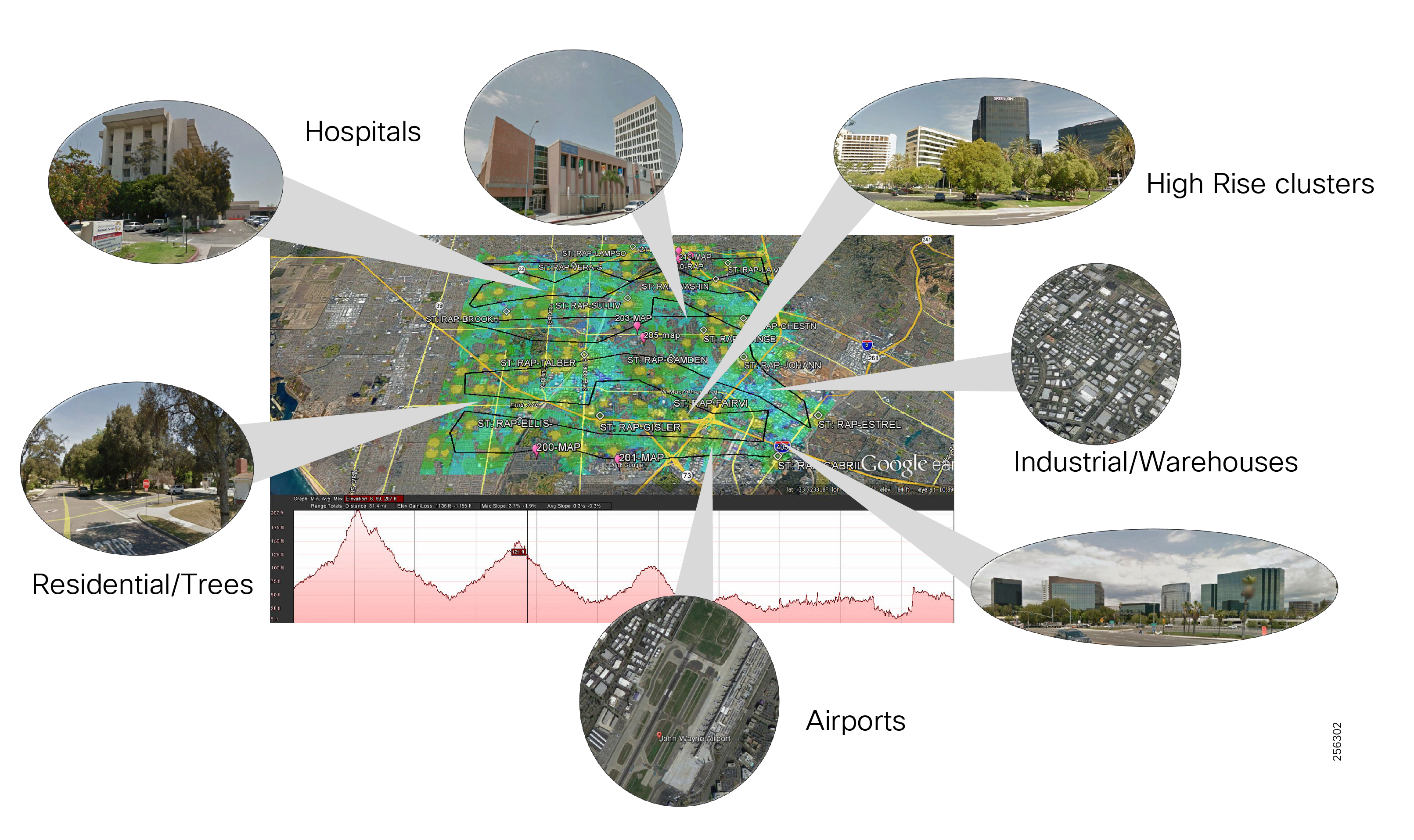

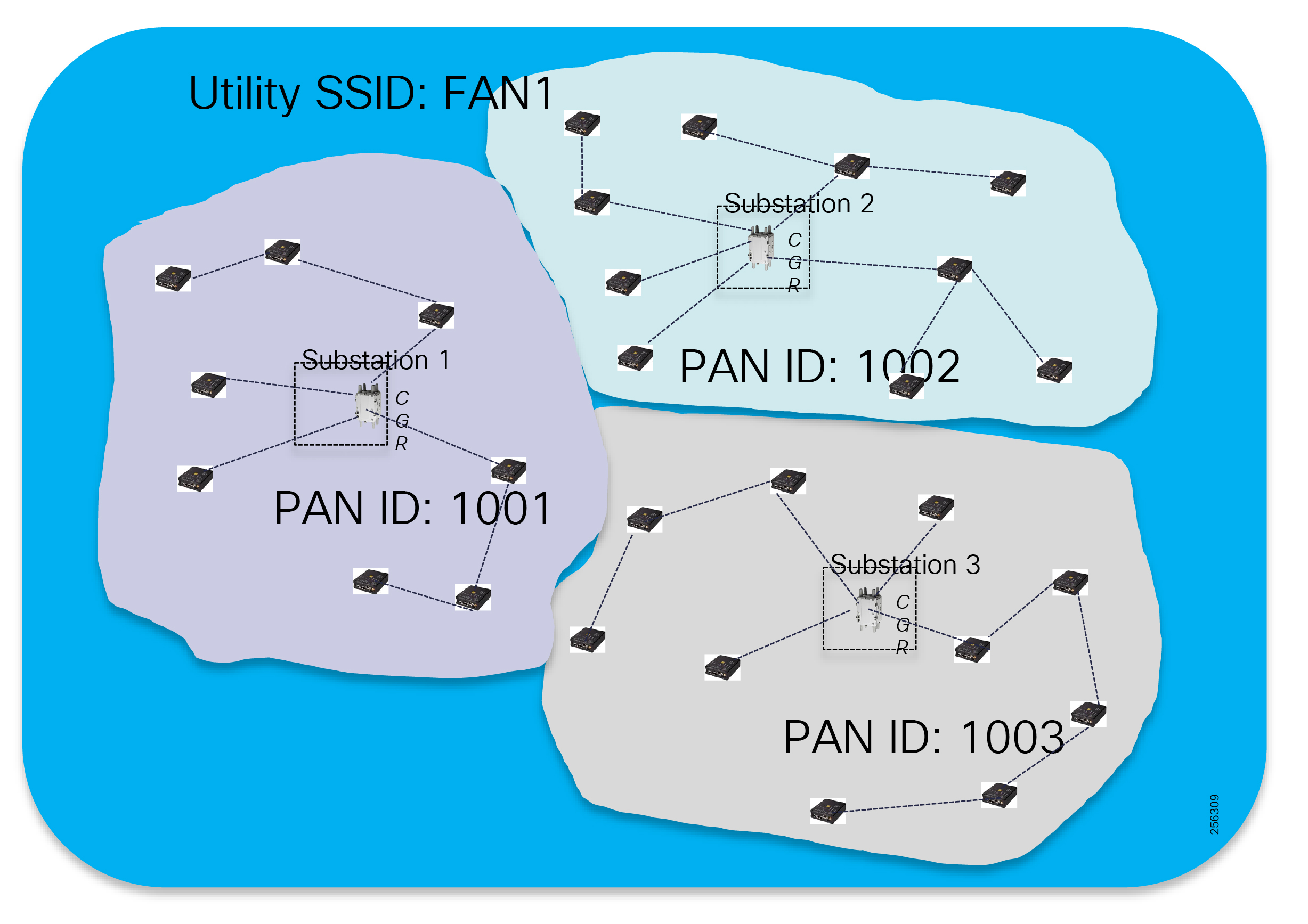

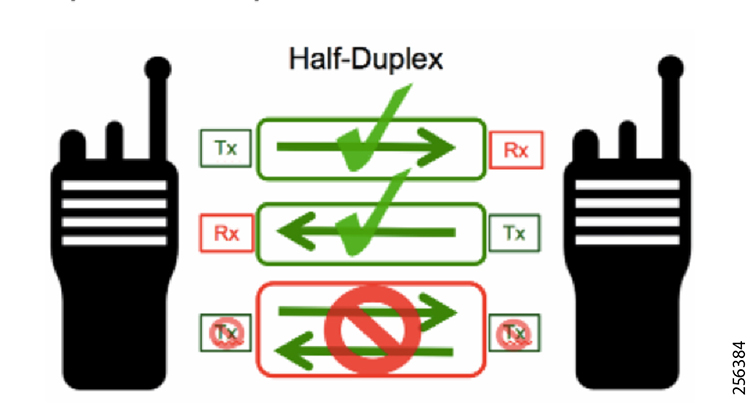

The Cisco Distribution Automation - Feeder Automation Design Guide provides a comprehensive explanation of the entire end-to-end Cisco Smart Grid Field Area Network (FAN) solution design, which was developed for the Utility Industry in the Americas region and leverages the license free spectrum: ISM band 902 - 928 MHz for last mile connectivity of the Distribution Network Grid devices. The document describes the two most common Distribution Automation use cases for monitoring and control of Distribution electrical lines equipment: Volt/VAR and Fault Location, Isolation, and Service Restoration (FLISR). It also includes information about the system's architecture, solution components, product choices, design models, and design considerations. This design targets implementations that will use the customer's Substation Private WAN as backhaul for the Resilient Mesh Network to transport data from grid devices in the field to the Control and Operation Centers. The document concludes with a high-level overview of a Feeder Automation Design based on Public Cellular Service that leverages Cisco's Cellular Industrial Routers (IR) Series products.

Executive Summary

Several key business drivers underlie the optimization of the distribution grid enabled by this solution. A pervasive, highly available, and well designed communications network will help enable increased reliability and availability while also reducing OpEx.

Cisco Systems is addressing the networking needs of the utility industry. Specifically, in this Distribution Automation - Feeder Automation Design Guide, the communications solutions that address the utility distribution grid with use cases such as SCADA transport, FLISR, and line voltage-monitoring enabling applications such as Volt/VAR Control are being highlighted. Field devices like transformers can offer predictive maintenance opportunities that will help eliminate customer outages and expensive unscheduled repairs and truck rolls.

The Cisco Distribution Automation validated solution, which is part of the Cisco portfolio of industry-leading, validated, and secure networking solutions for substation automation, Utility WAN, and Field Area Network Advanced Meter Infrastructure (FAN AMI), provides the following unique capabilities for distributed control and protection operations:

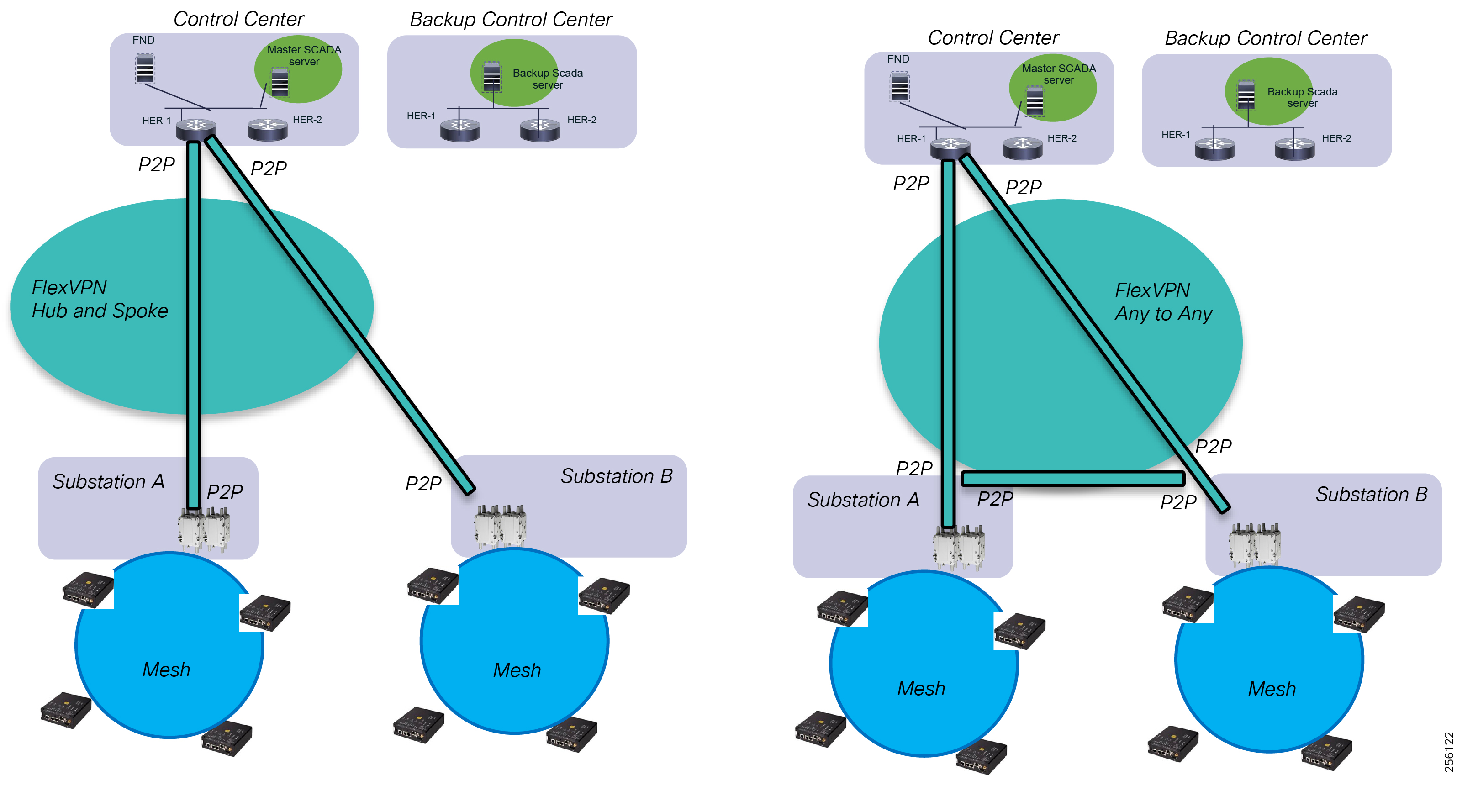

■![]() Cisco Resilient Mesh and cellular networking with FlexVPN technologies that are cost-effectively built to scale for the large number of Distribution Automation devices being enabled in the distribution grid

Cisco Resilient Mesh and cellular networking with FlexVPN technologies that are cost-effectively built to scale for the large number of Distribution Automation devices being enabled in the distribution grid

■![]() An IT-preferred security architecture, including hardware and software certification management, firewall, and malware protection with robust encryption to help ensure secure network communications and edge applications

An IT-preferred security architecture, including hardware and software certification management, firewall, and malware protection with robust encryption to help ensure secure network communications and edge applications

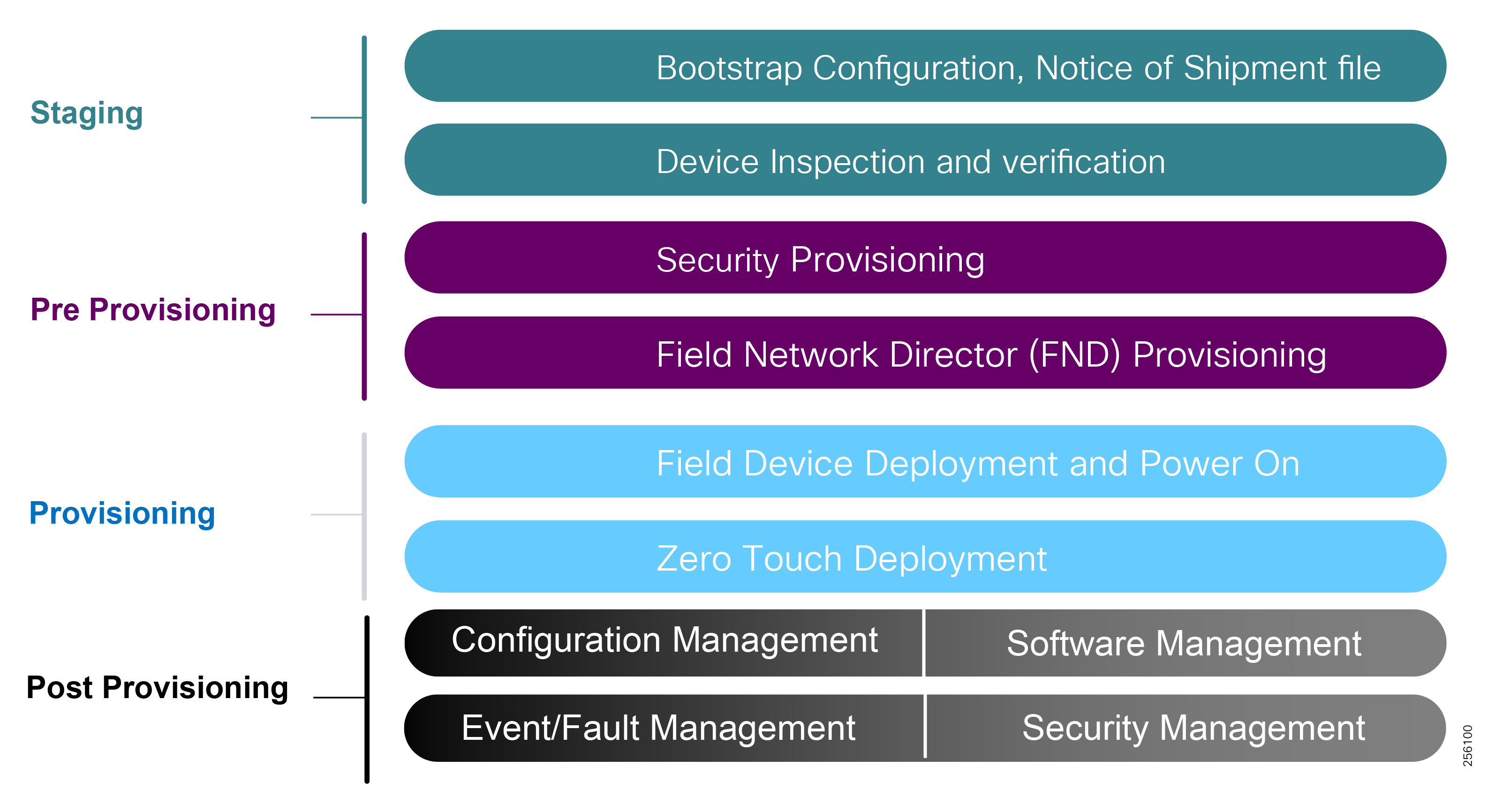

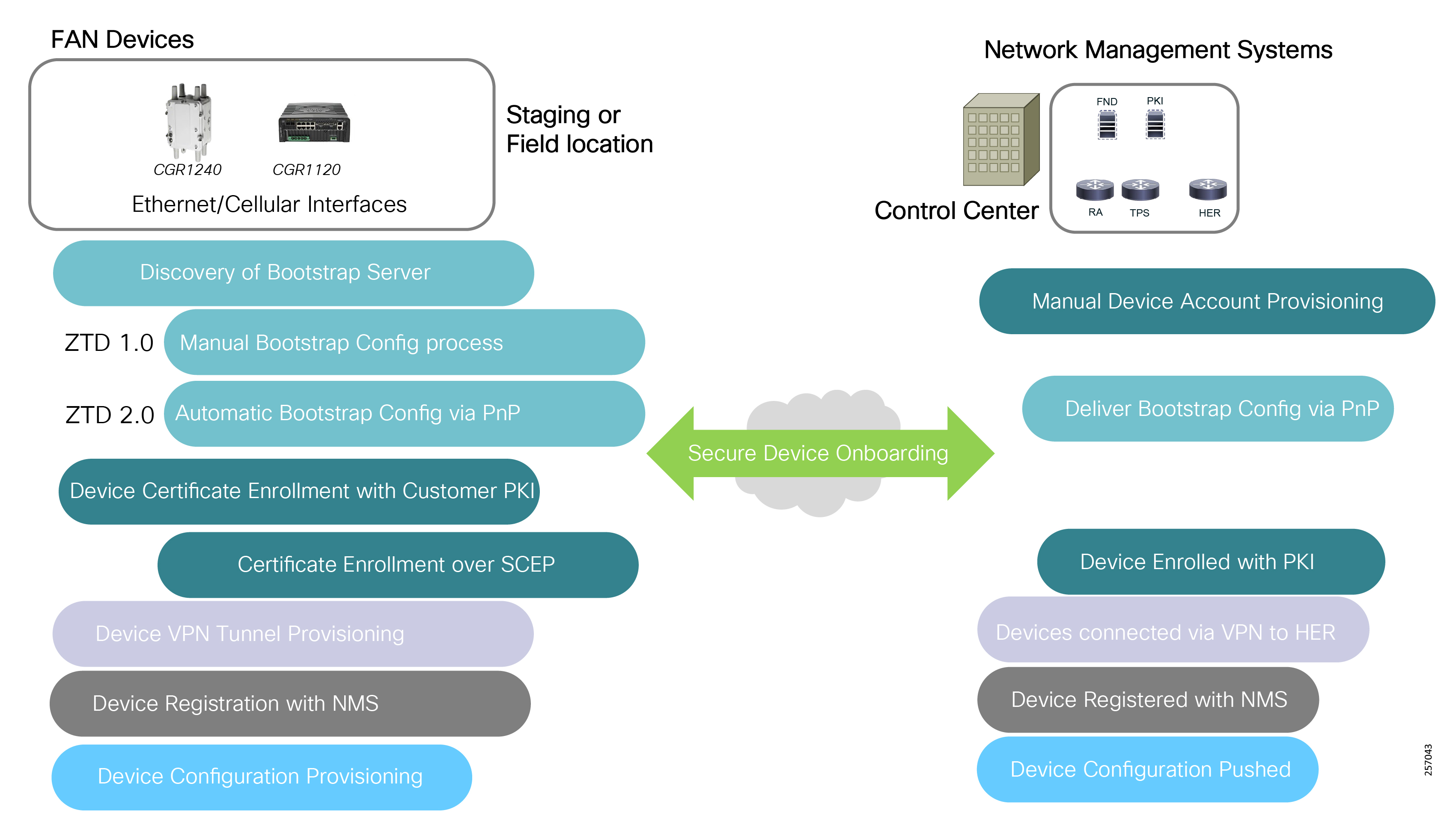

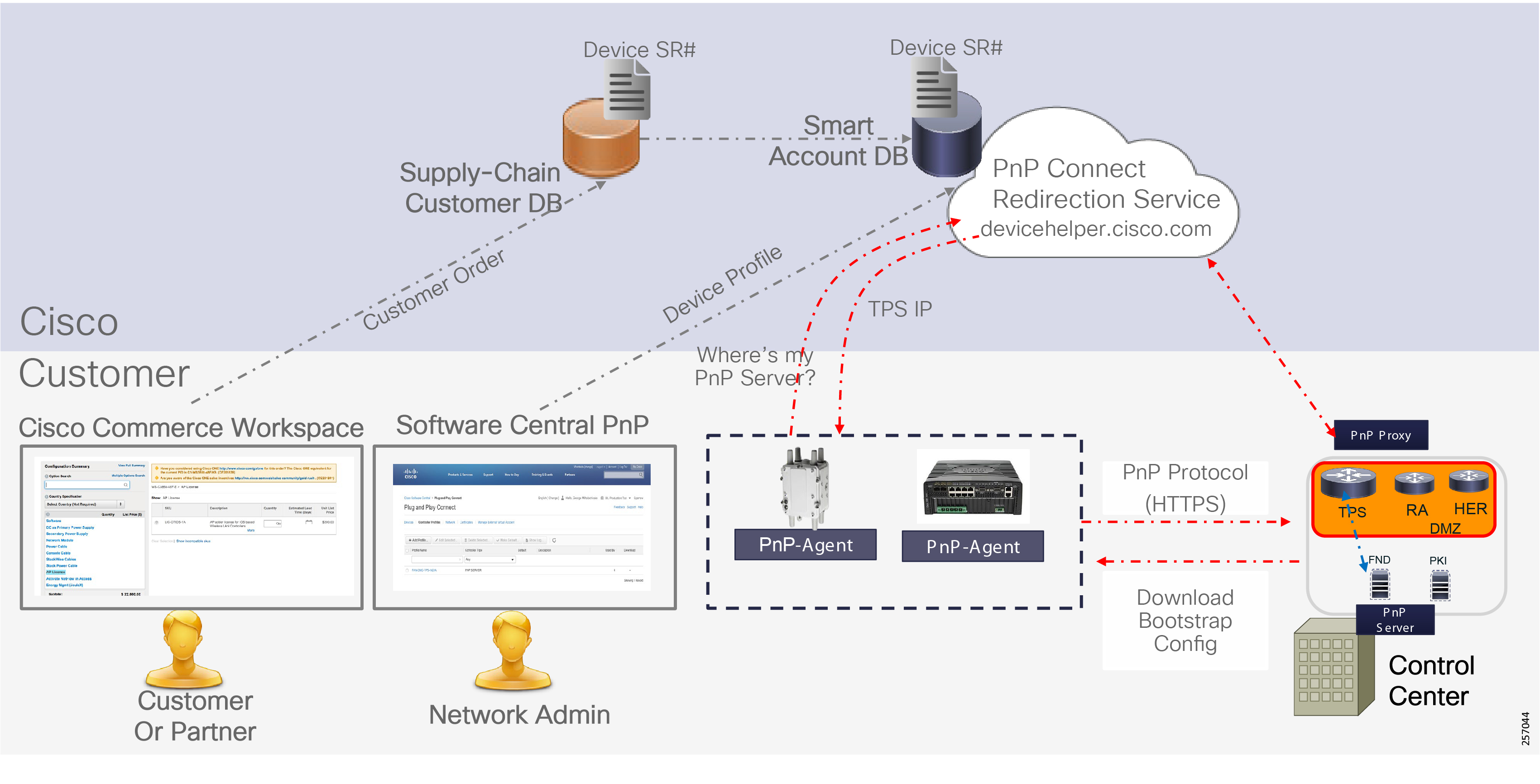

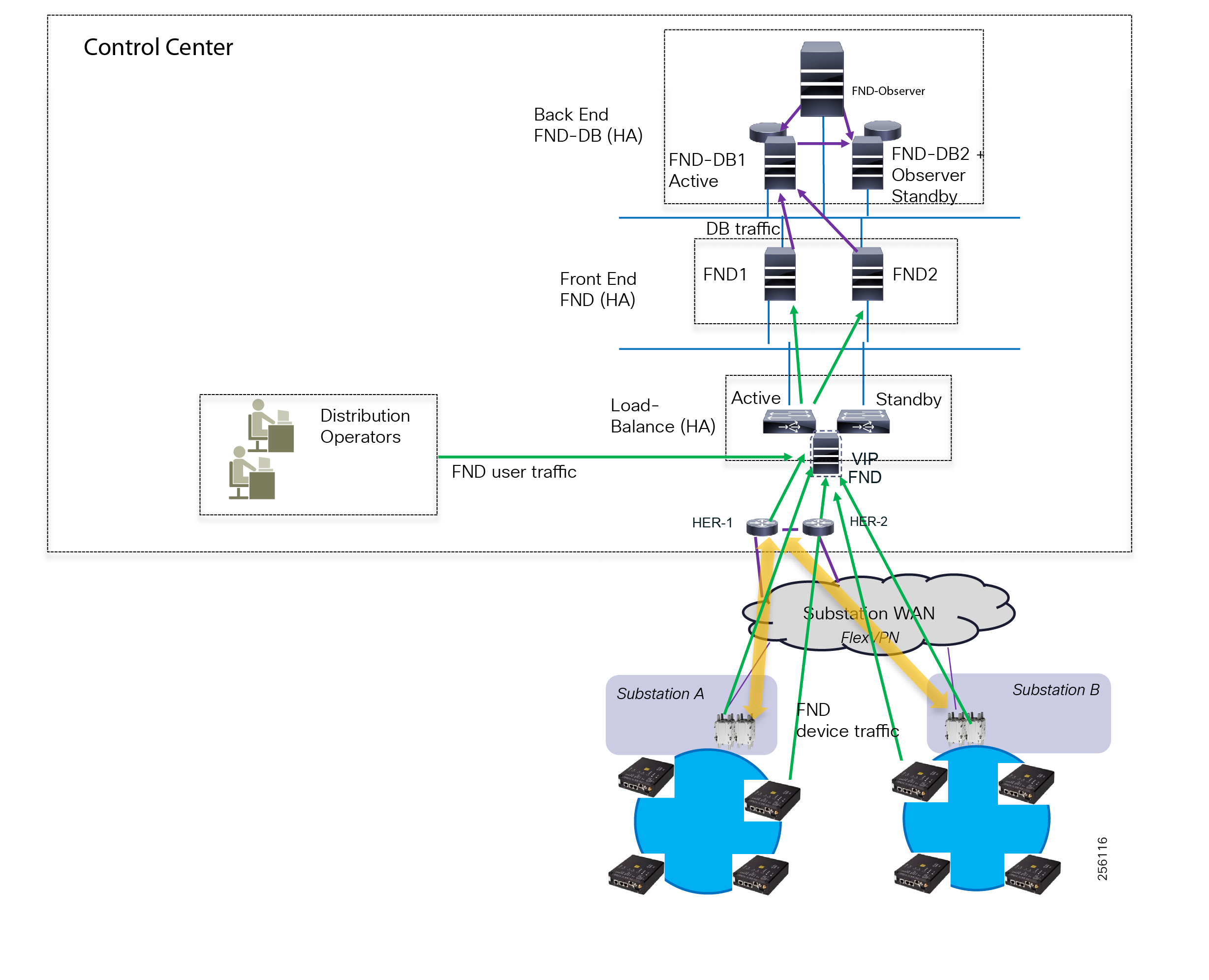

■![]() Enhanced management and serviceability by Cisco Field Network Director (FND) with Zero Touch Deployment (ZTD) and plug-and-play (PnP) functionality to help enable deployment and enhance operations

Enhanced management and serviceability by Cisco Field Network Director (FND) with Zero Touch Deployment (ZTD) and plug-and-play (PnP) functionality to help enable deployment and enhance operations

■![]() High availability that is designed in the headend and Wide Area Network (WAN), with redundant control center support

High availability that is designed in the headend and Wide Area Network (WAN), with redundant control center support

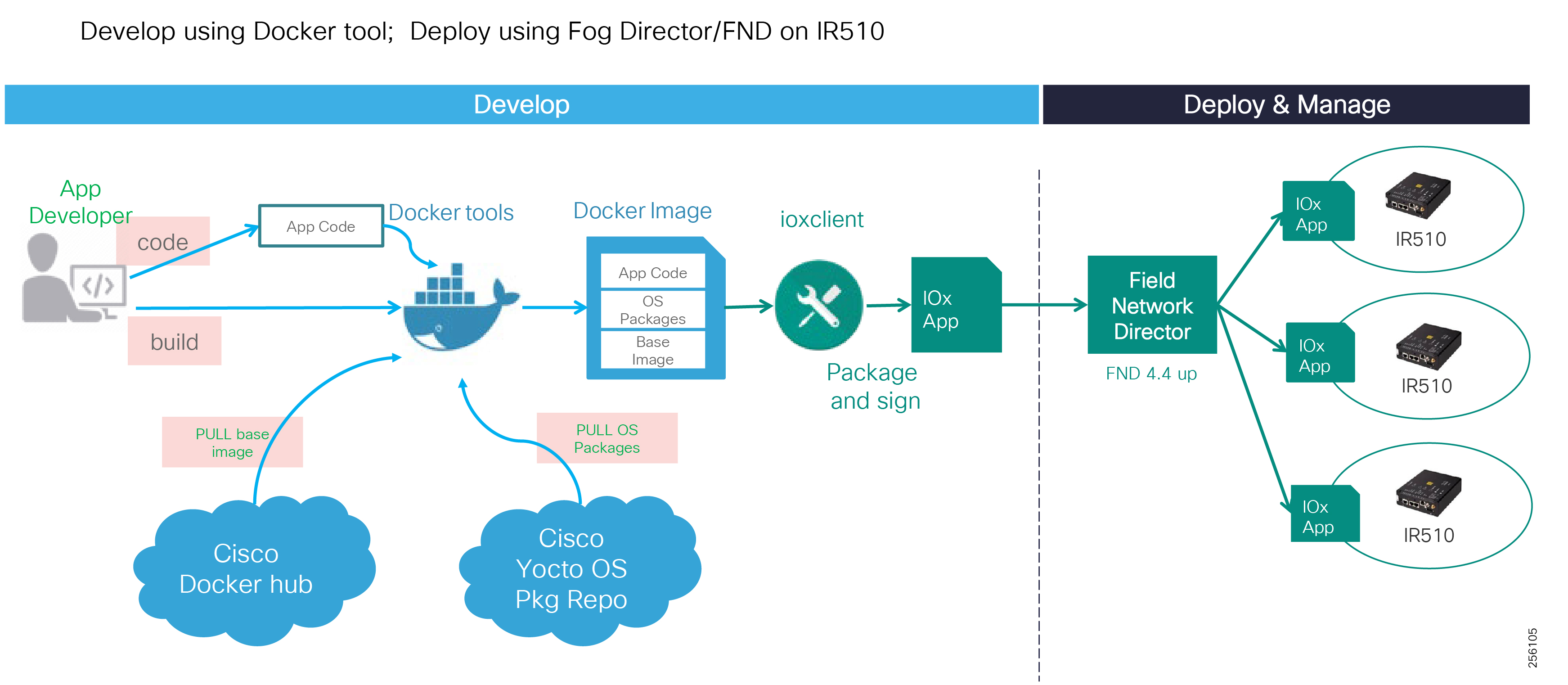

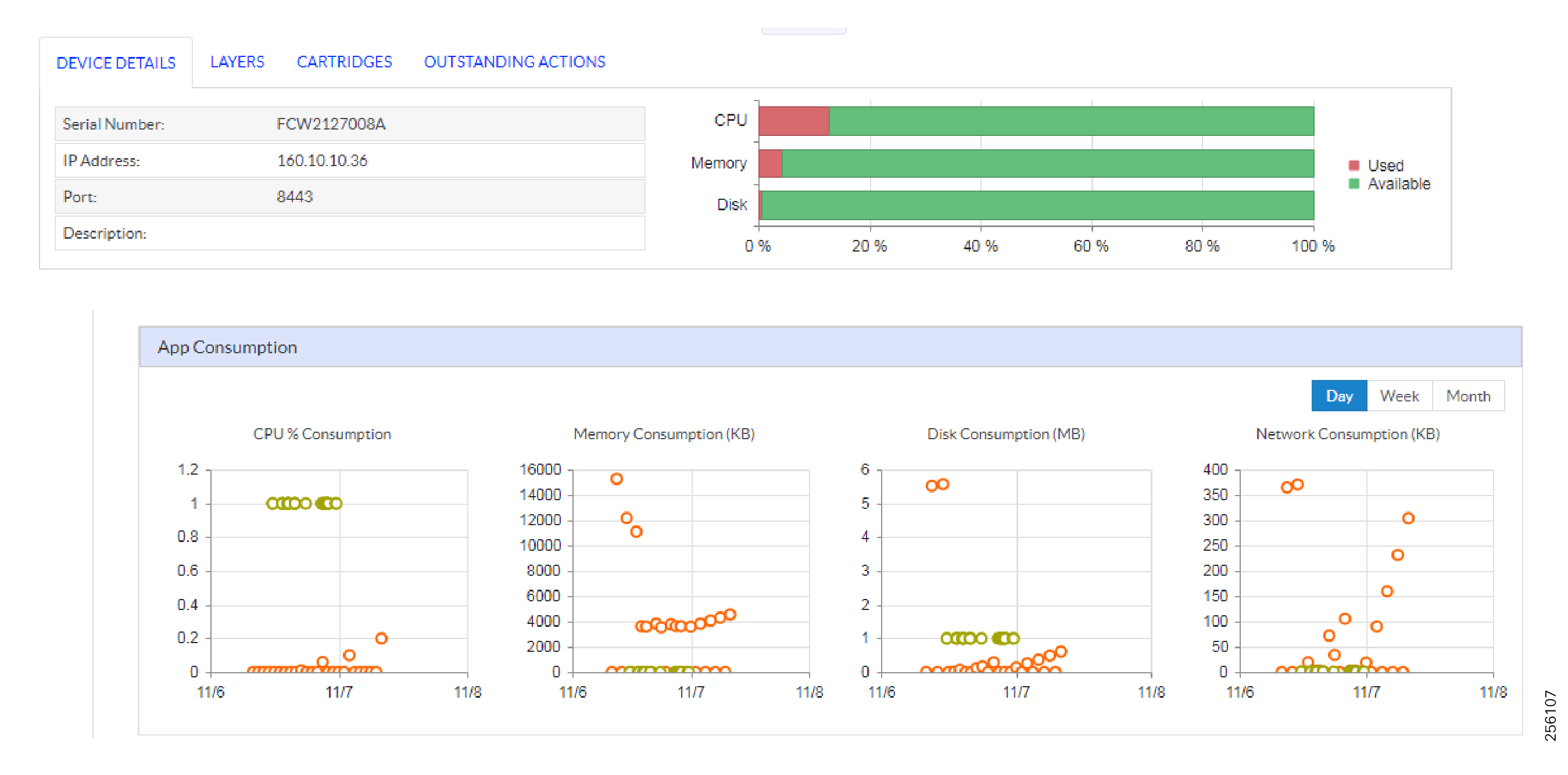

■![]() Edge application capabilities within FND lifecycle-managed Cisco equipment that include deployment, monitoring, upgrading, and troubleshooting

Edge application capabilities within FND lifecycle-managed Cisco equipment that include deployment, monitoring, upgrading, and troubleshooting

■![]() End-to-end testing and validation, which are completed and documented with various Distribution Automation device vendors and use cases

End-to-end testing and validation, which are completed and documented with various Distribution Automation device vendors and use cases

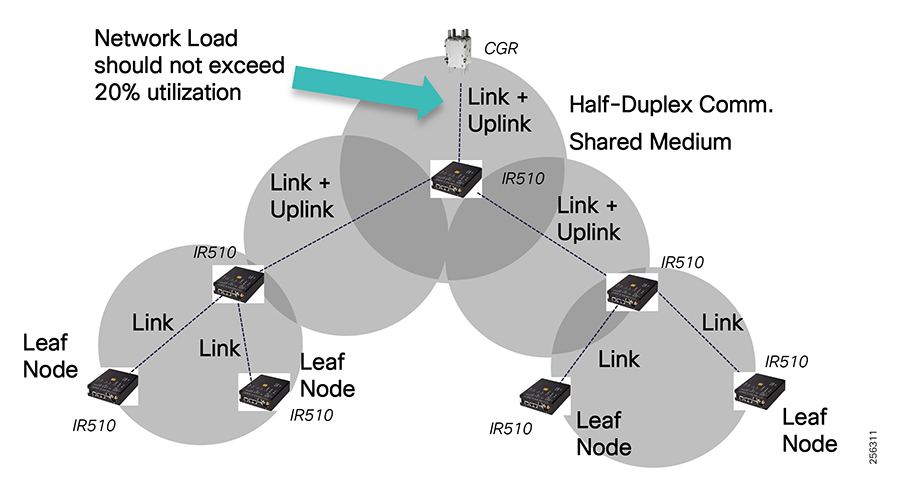

The recent enhancements to Cisco Resilient Mesh have increased by nearly tenfold the available bandwidth on the 900mhz field area network over the first generation, thus also reducing the latency between hops, helping enable peer-to-peer communication, and equipping the network with enhanced security features. Cisco has transformed a previously low performance wireless mesh network that was designed for smart metering into a network that is suitable for Distribution Automation use cases.

Cellular can be applied to areas or use cases where extremely high performance is needed. Since they are managed under a single highly usable Field Network Director (FND) system, the customer will receive a consistently intuitive management experience.

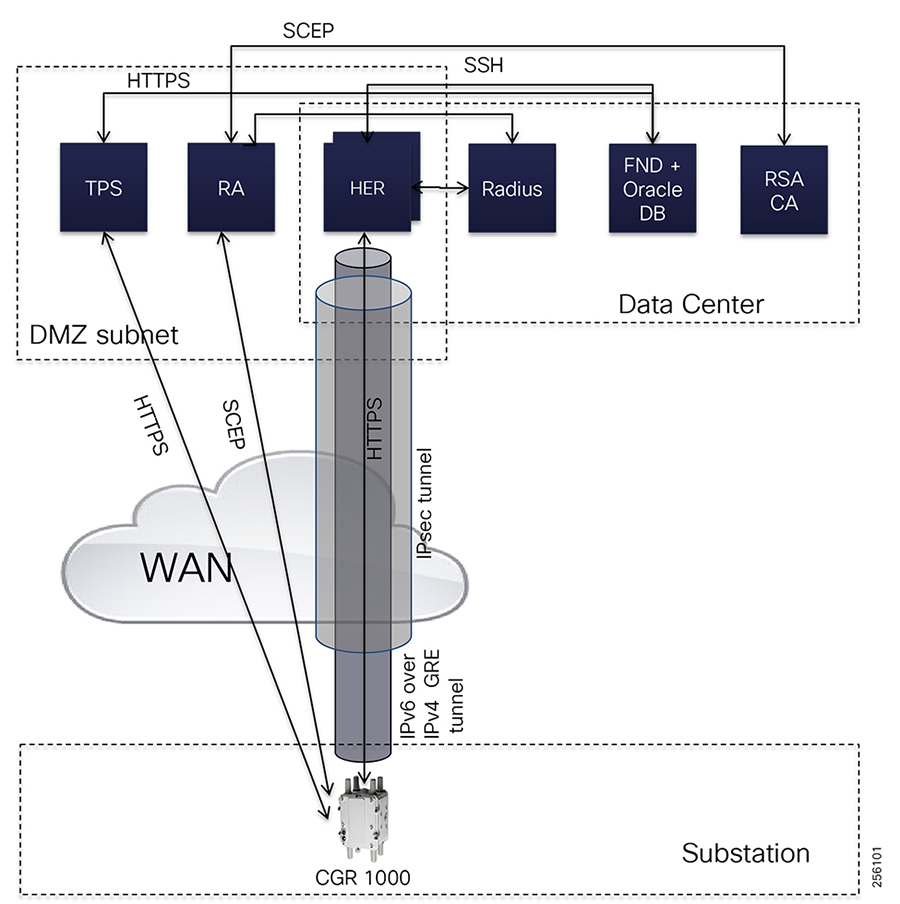

As a foundational element to any Cisco network, this DA architecture leverages enhanced security from the control center to the edge of the distribution network. The result is a reliable, scalable, and highly available DA network via wired and wireless, and a cellular WAN that supports large-scale DA deployments and secures communications to redundant control centers.

Deployment, ongoing operation, and management is simplified via standards-based protocols and ZTD tools for proven large scale DA network provisioning. This is all addressed in detail as part of this design guide.

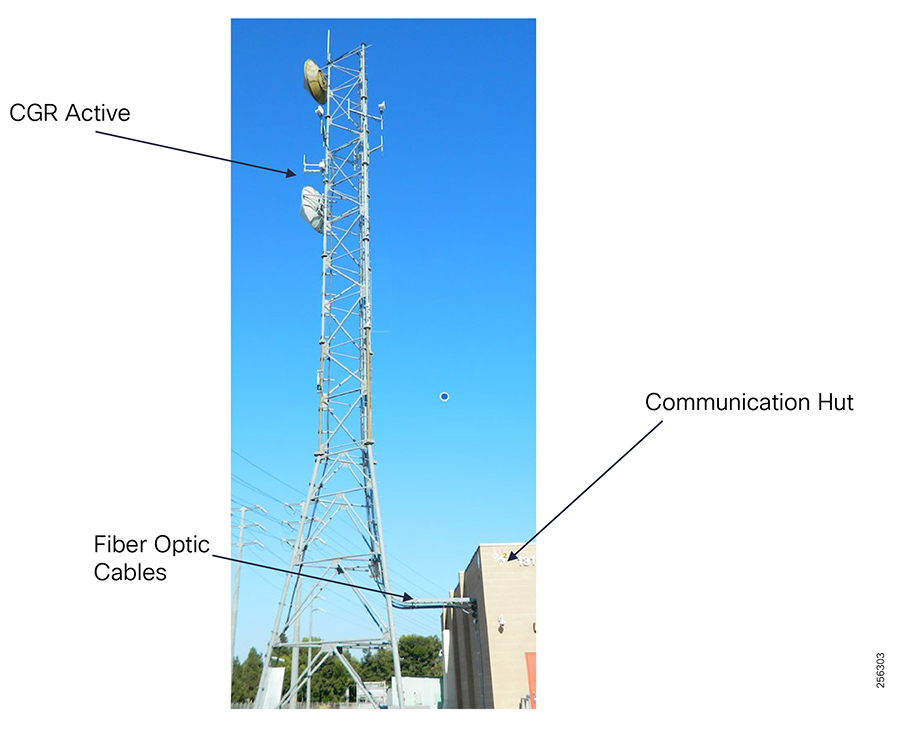

This document covers this DA communications solution, which is based on industry-leading innovations in Cisco Resilient Mesh and cellular networking technologies that are built into the Cisco CGR 1240 and CGR 1120 Connected Grid Routers; the Cisco IR510 and IR530 Wi-Sun Mesh Industrial Routers product family; the IR807, IR809, and IR1101 Industrial Router cellular gateways; and the Cisco FND management system.

Navigator

The table describes the chapters in this document:

|

|

|

Review of the Utility Industry Distribution Use Cases: Volt/VAR and FLISR. It is intended for readers who are unfamiliar with the industry DA applications. |

|

Introduction to Cisco solution's product portfolio, product characteristics, and usage guidance for product selection based on utility footprint with reference links to documentation across the three main tiers: NAM, WAN, and Energy Operations Center or DC. |

|

Describes at a high level the different industry DA architectures (centralized versus distributed) and the three Cisco FAN design options available to support these DA architectures. |

|

Design Considerations for DA Feeder Automation Deployments Based on 900MHz ISM Band Solution |

Explains in detail the Cisco FAN Distribution Automation design based on 900Mhz ISM band Spectrum. It also contains the design specifications and functional description of aspects such as RF communication, network infrastructure, routing, security, and QoS across the FAN tiers. |

DA Feeder Automation using Cellular Service (3G/4G) Solution |

Overview of the Cisco FAN DA design based on Public Cellular Service solution. |

Audience

The intended audience for this guide is comprised of, but is not limited to, system architects, network/compute/systems engineers, field consultants, Cisco Customer Experience (CX) specialists, partners, and customers.

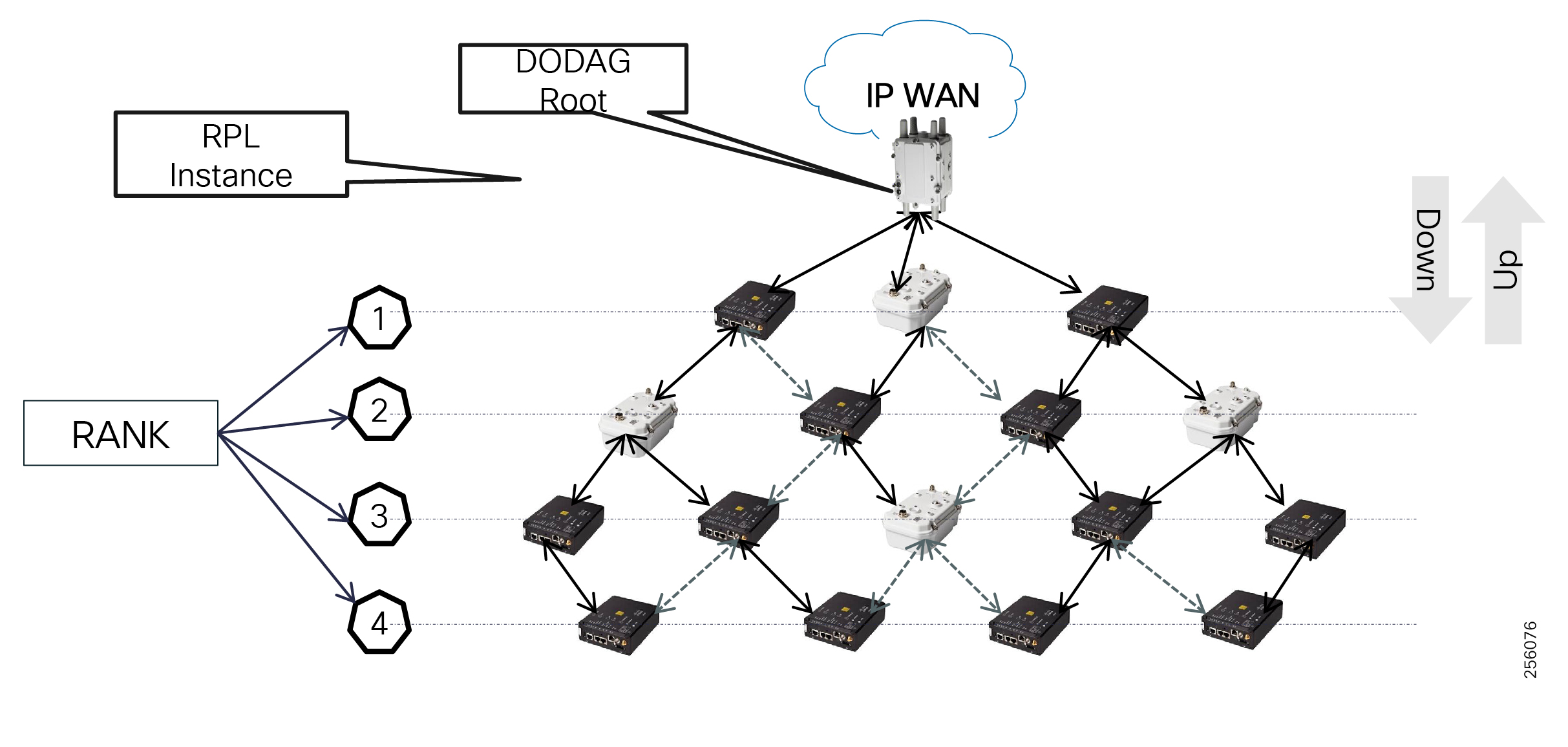

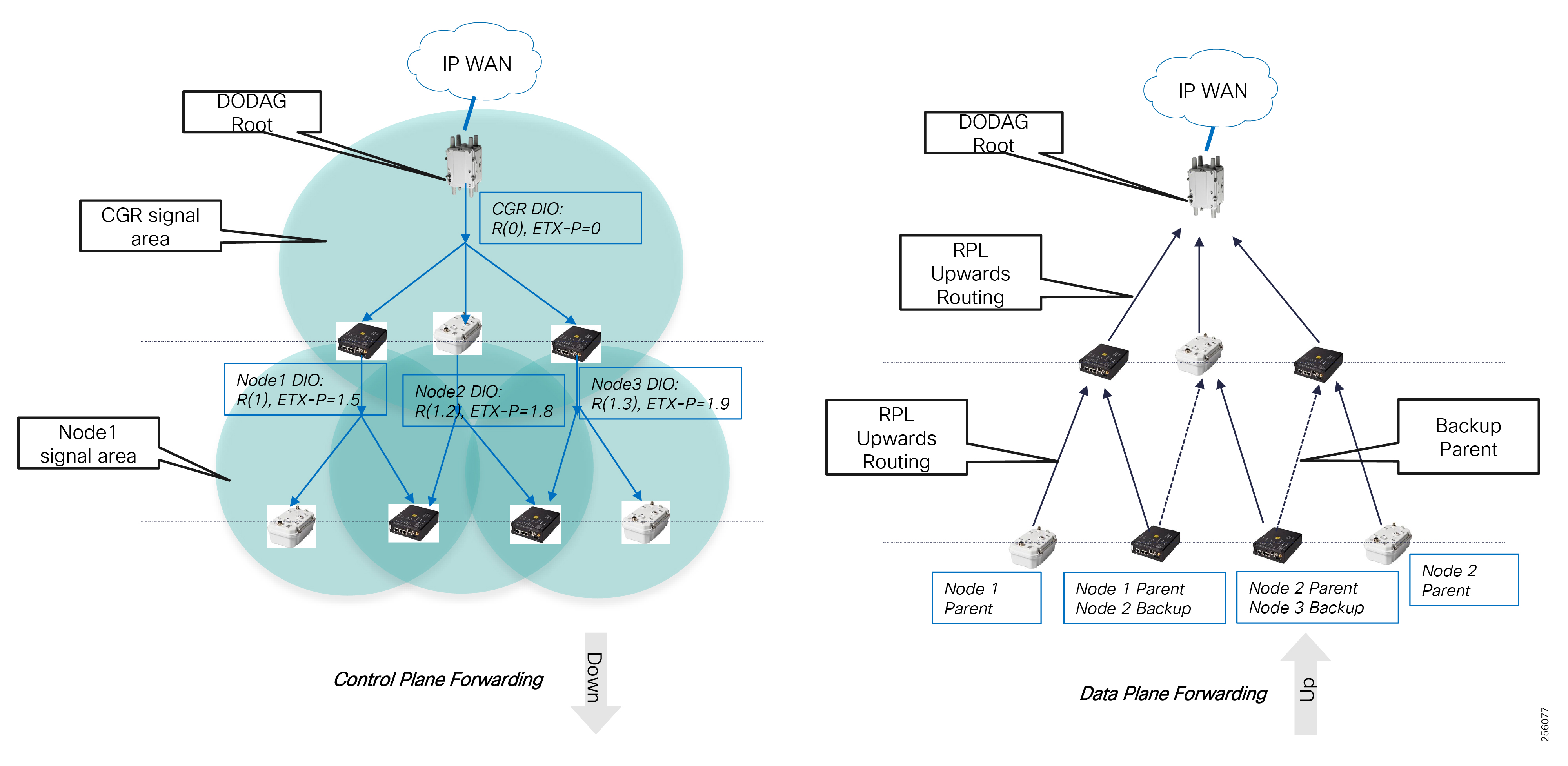

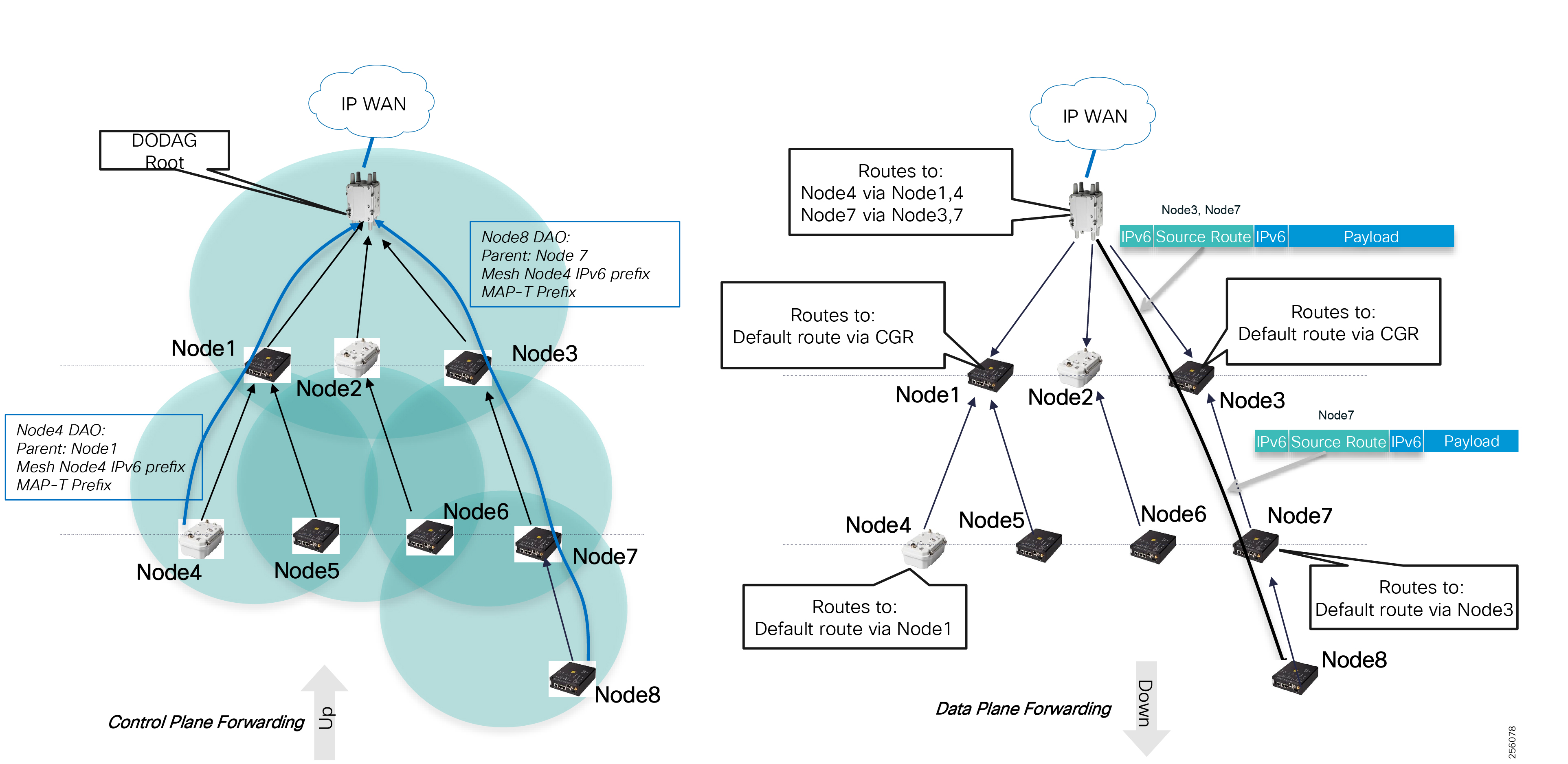

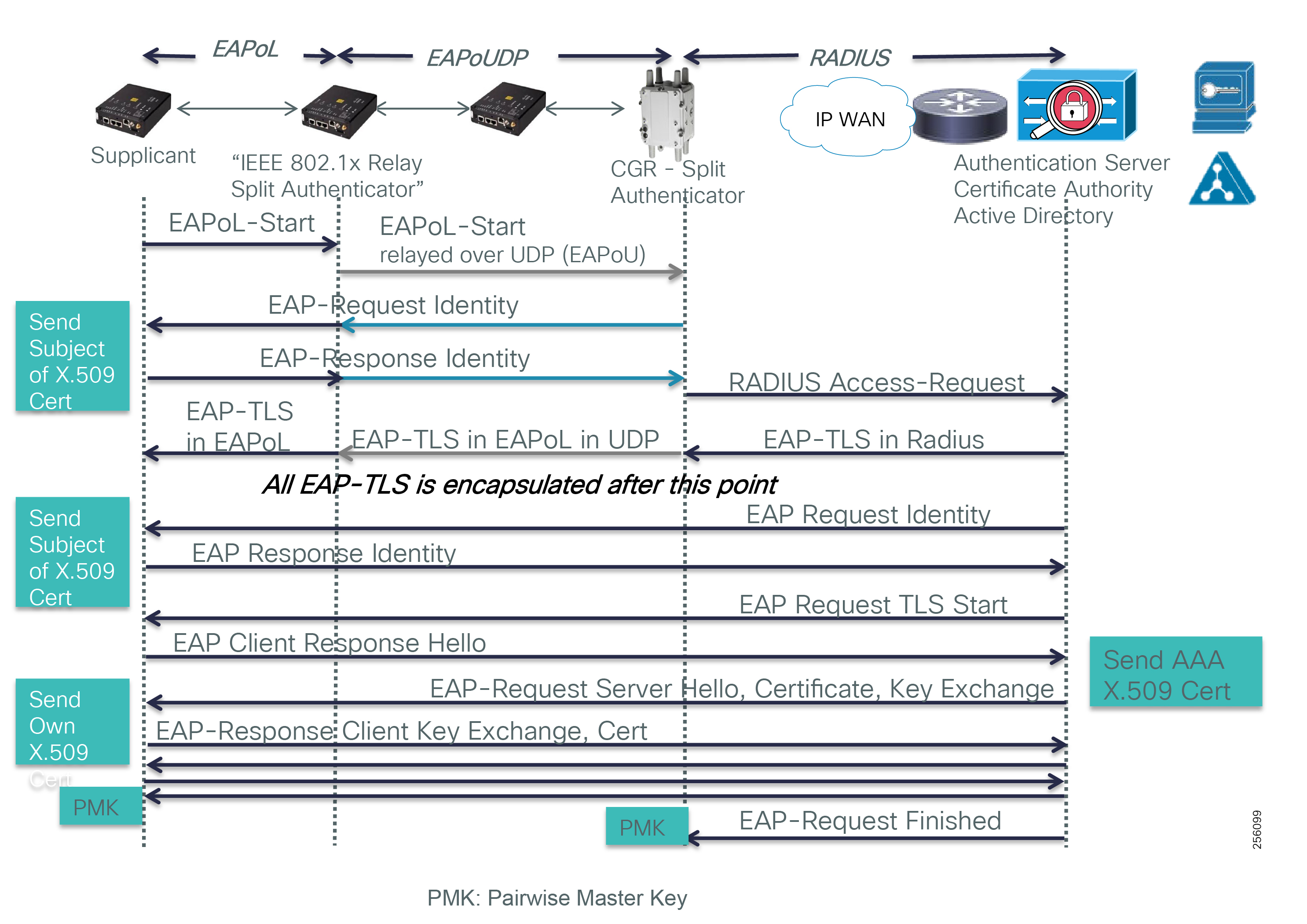

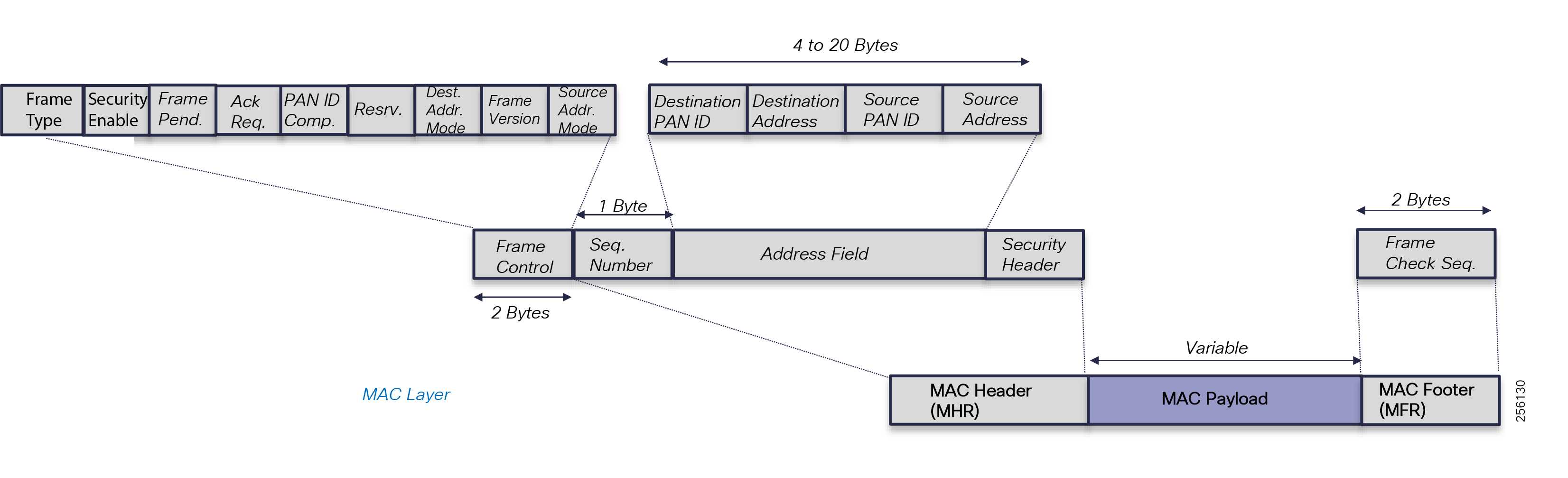

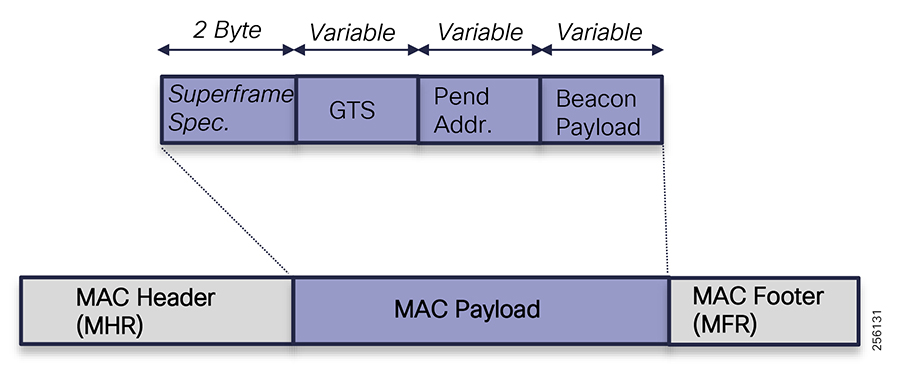

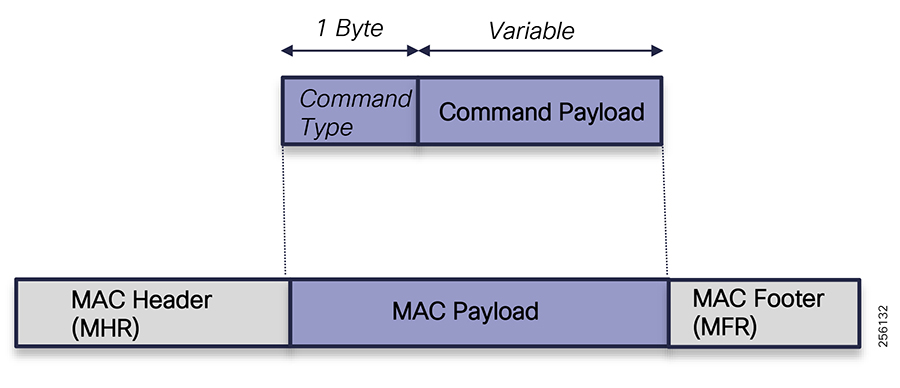

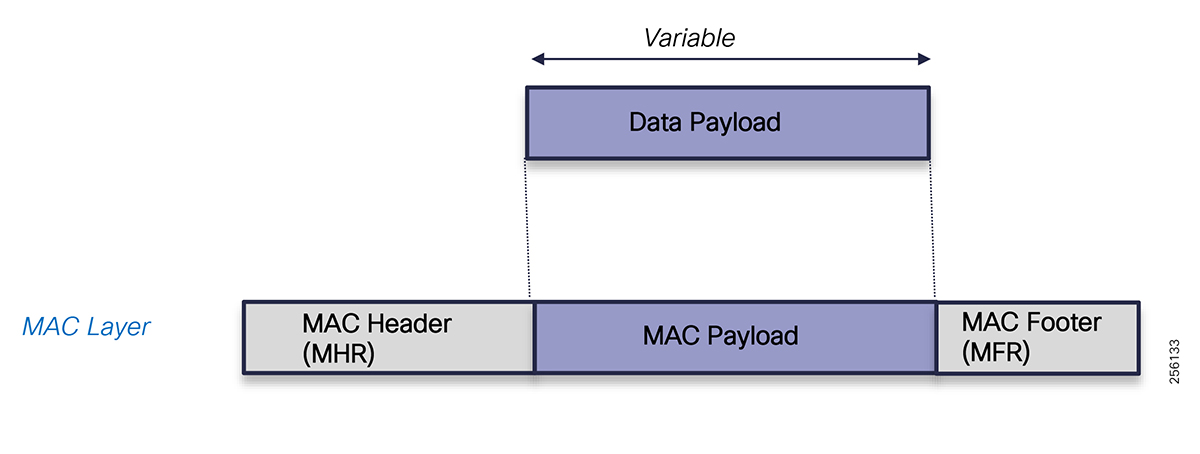

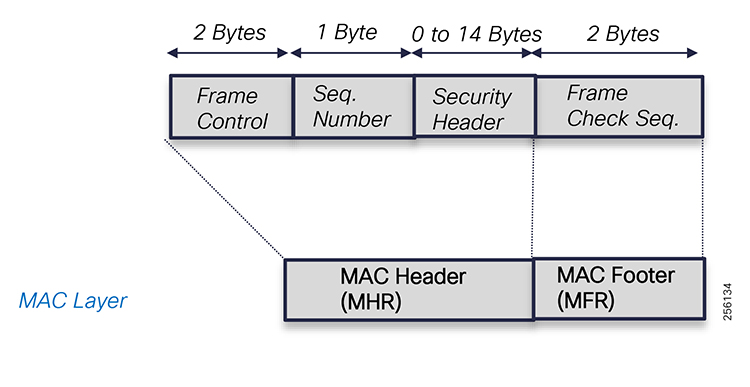

The solution encompasses multiple technology domains from infrastructure to switching and routing to security and network management. Readers should be familiar with the following transport technologies: Radio: IEEE802.15.4 based on 900MHz ISM band, IEEE 802.11 Wi-Fi and Cellular 3G/4G, IEEE 802.3 Ethernet, and overlay Virtual Private Networks: FlexVPN. The solution uses the following industry standard protocols: IPv4 and IPv6, 6LoWPAN, RPL, BGP, NAT (MAP-T), IKEv2, 802.1x, 802.11i, SNMP, and CoAP, including others.

Document Objective and Scope

This design guide provides a comprehensive explanation of the Cisco FAN system design based on standard unlicensed ISM 900MHz radio band frequency for Utilities Distribution Automation applications. It includes information about the system's architecture, possible deployment models, and guidelines for implementation and configuration. The guide also recommends best practices and potential issues when deploying the reference architecture.

Use Cases/Services/Deployment Models

This guide addresses the following technology use cases:

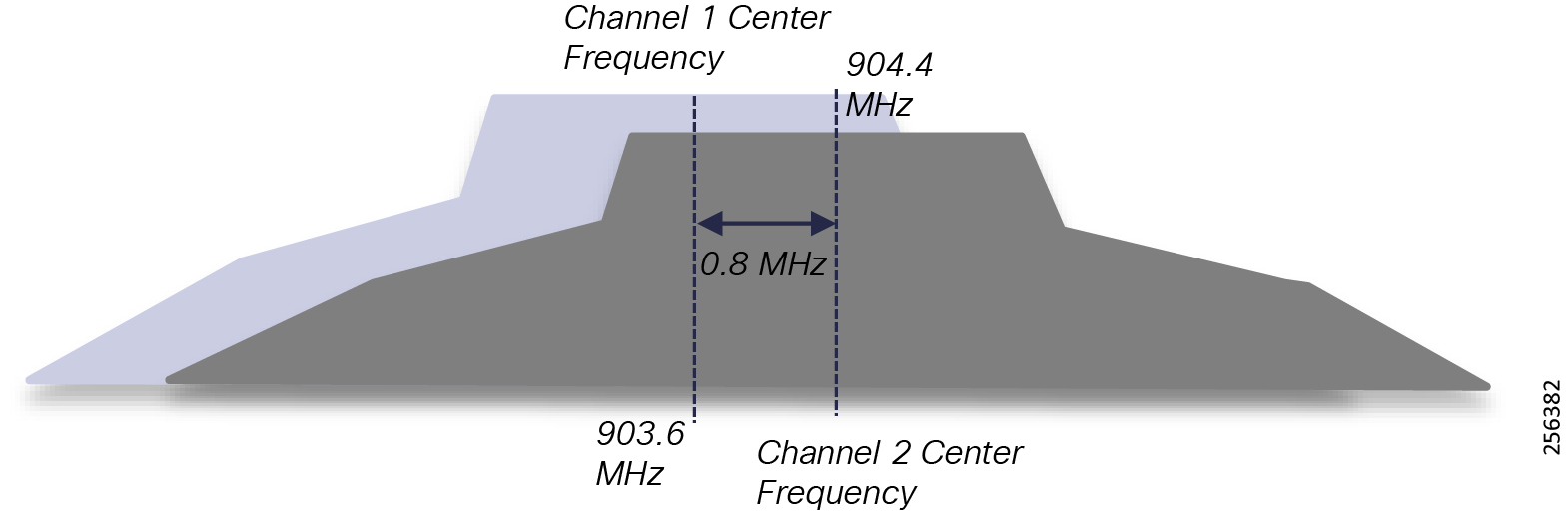

■![]() DA grid devices connectivity using unlicensed frequency radio: ISM 902-928MHz band available in certain countries in the Americas market, standard IEEE 802.15.4g/e based on Option 2 - OFDM modulation with higher physical data rates of up to 1.2Mbps.

DA grid devices connectivity using unlicensed frequency radio: ISM 902-928MHz band available in certain countries in the Americas market, standard IEEE 802.15.4g/e based on Option 2 - OFDM modulation with higher physical data rates of up to 1.2Mbps.

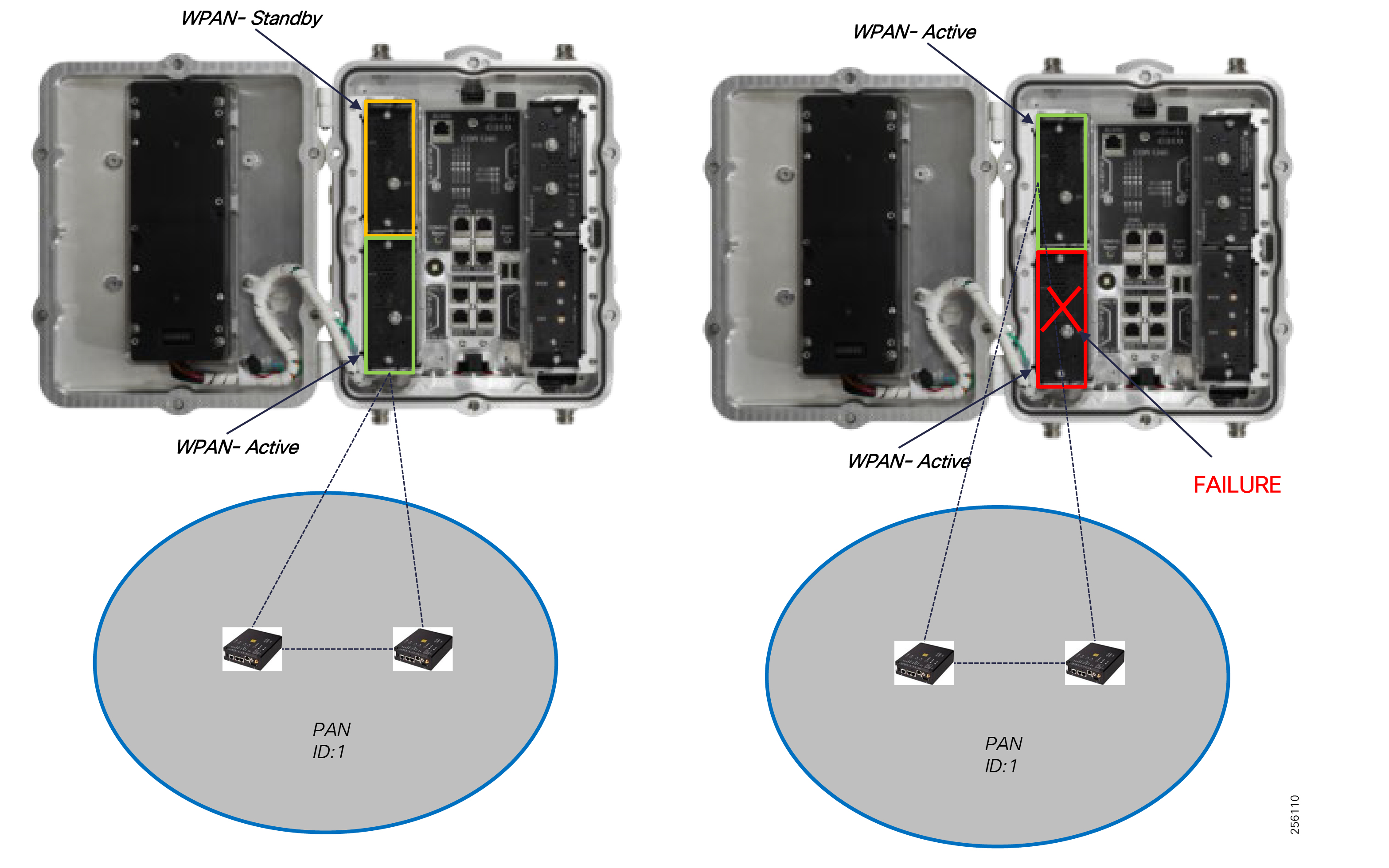

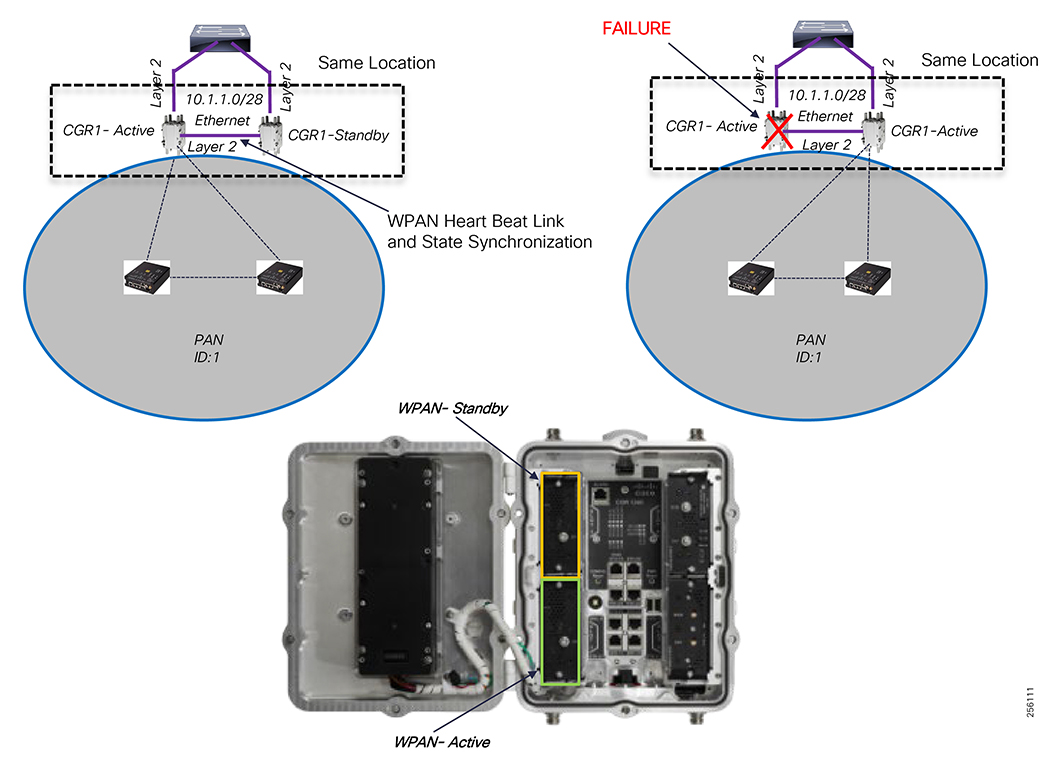

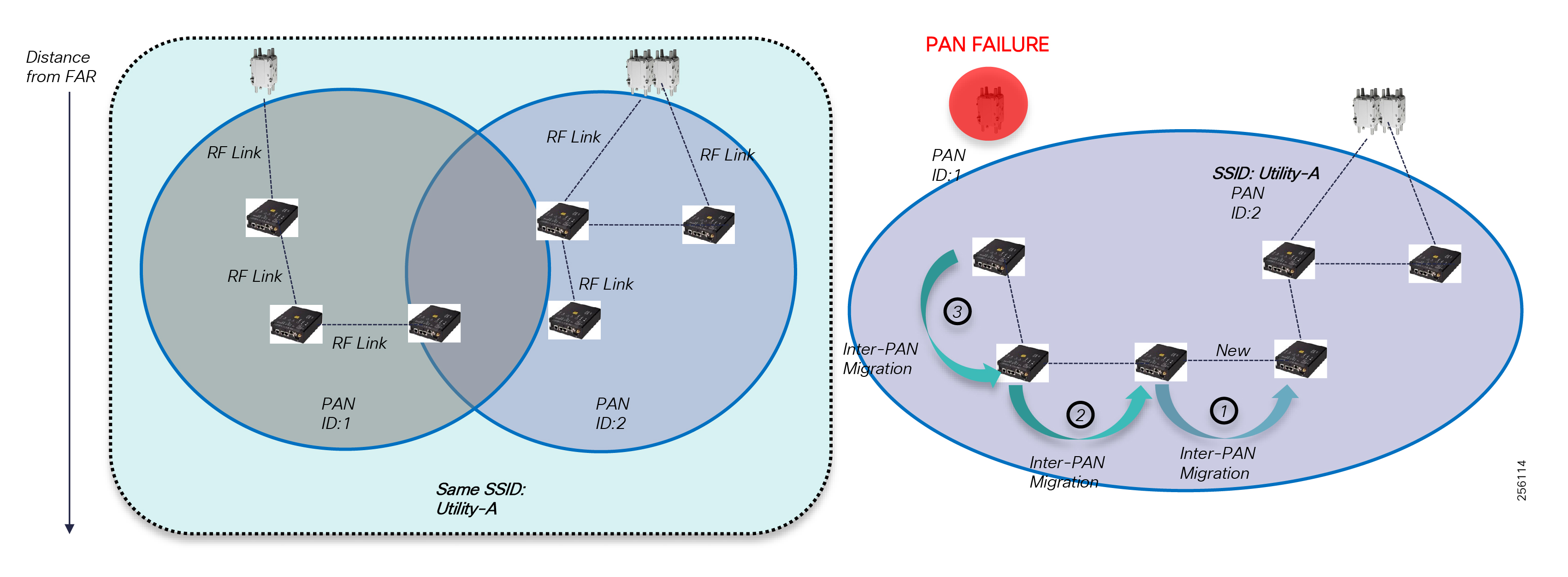

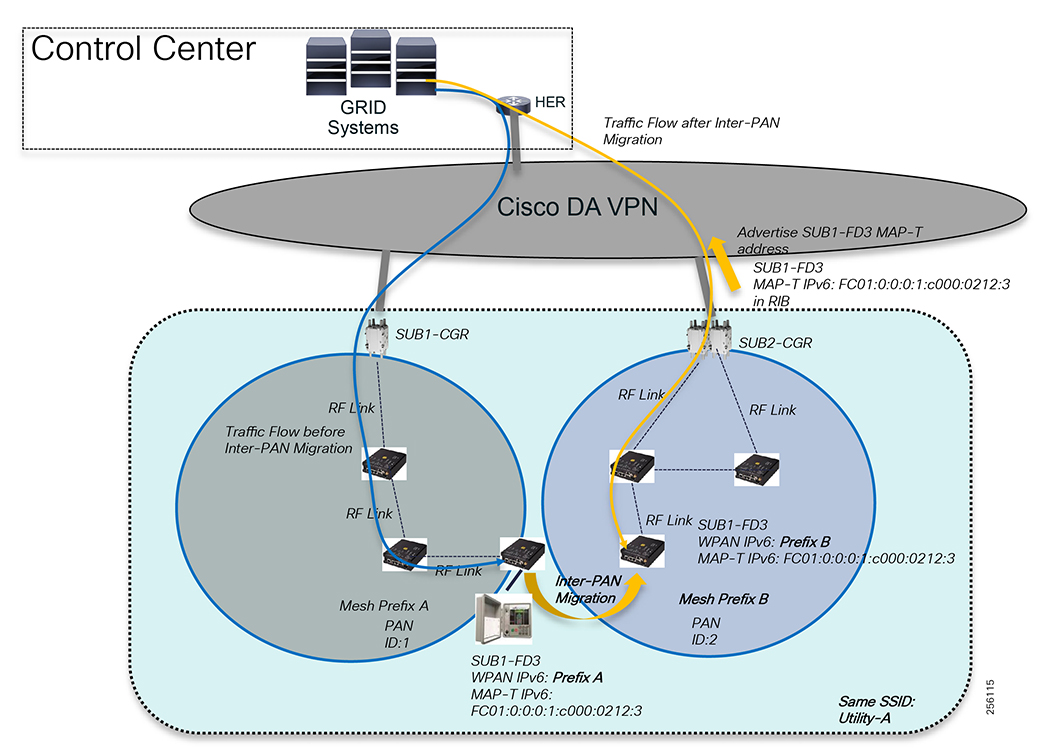

■![]() Radio Optimization features: Adaptive Modulation, Adaptive Data Rates, and High Availability for mesh coordinator.

Radio Optimization features: Adaptive Modulation, Adaptive Data Rates, and High Availability for mesh coordinator.

■![]() Edge Software Optimization features: for customer application edge deployment with dedicated resources.

Edge Software Optimization features: for customer application edge deployment with dedicated resources.

■![]() Advanced mesh IPv6 routing with peer-to-peer communication.

Advanced mesh IPv6 routing with peer-to-peer communication.

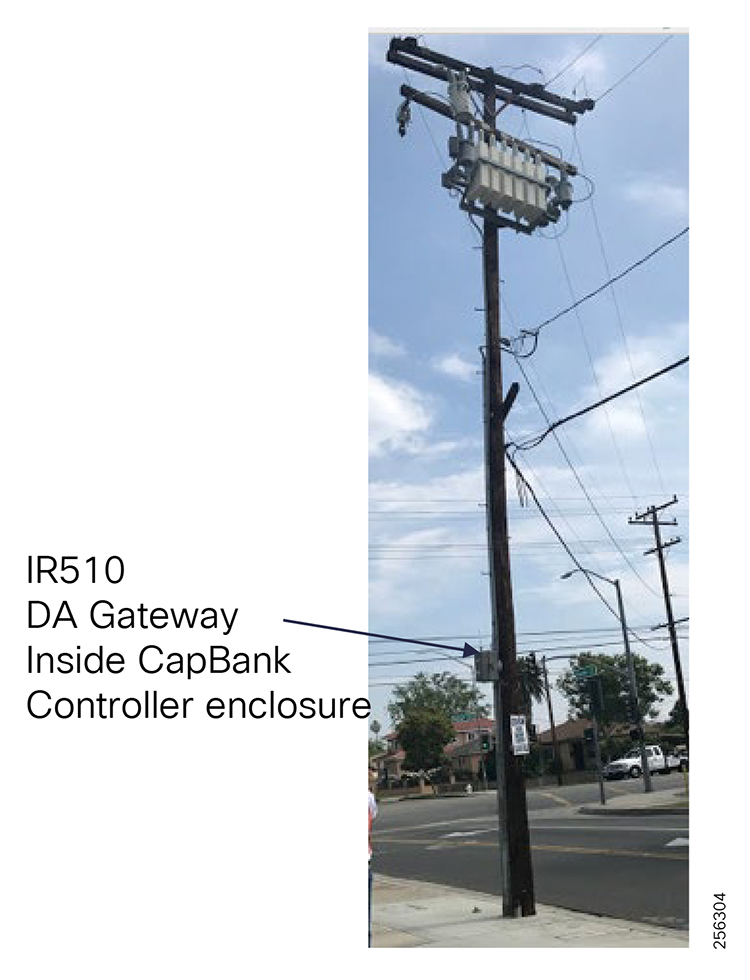

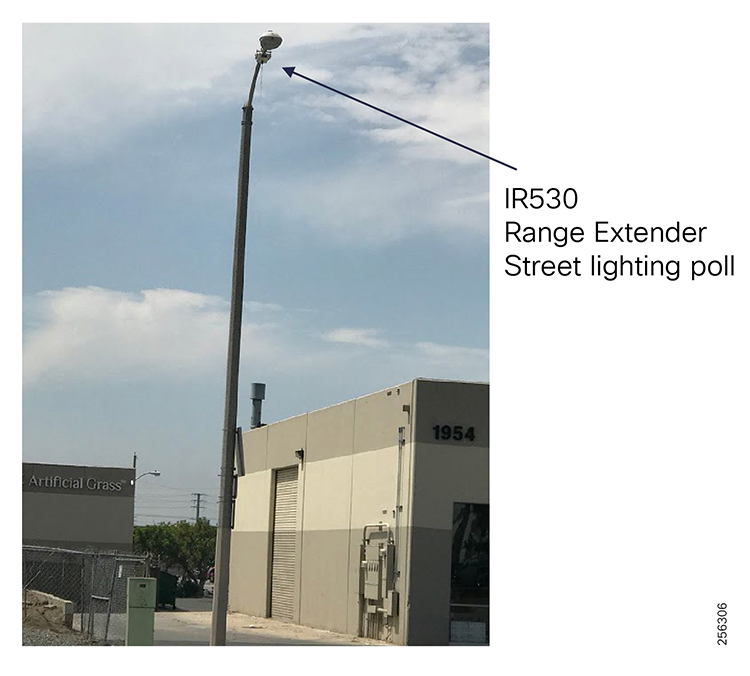

■![]() New products release CGR 1000 Wireless Module (WPAN), IR510 DA Gateway, and IR530 DA Range Extender.

New products release CGR 1000 Wireless Module (WPAN), IR510 DA Gateway, and IR530 DA Range Extender.

Distribution Automation Architecture for Utilities

This chapter includes the following major topic:

■![]() Distribution Automation Use Cases

Distribution Automation Use Cases

Cisco Systems has taken a holistic approach to Distribution Automation, and, in this release, the focus will be the Utility Distribution system. The goal of Distribution Automation in the Utility grid is real-time adjustment to changing loads, distributed generation, and failure conditions within the Distribution grid, usually without operator intervention. The IT infrastructure includes real-time data acquisition and communication with utility databases and other automated systems. Accurate modeling of distribution operations supports optimal decision making at the control center and in the field. This heavily depends on a highly reliable and high performing communications infrastructure. This document address these communications requirements as an architecture and addresses the key use cases below.

Distribution Automation technologies are commercially available for wide scale utility deployments. The key for the utility is to identify and unlock the value that these solutions provide. Applications that may have the greatest potential are those that directly affect operations and efficiency such as management of peak load via demand response, predictive technologies for advanced maintenance or equipment replacement and secure communications for equipment, and system restoration technologies.

Automated control of devices in distribution systems is the closed-loop control of switching devices, voltage controllers, and capacitors based on recommendations of the distribution optimization algorithms. These closed loop systems often have rigorous communications systems requirements that vary from manufacturer to manufacturer and by application. The communications system must meet the most rigorous standards and do so at scale. Volt/VAR control is one of the key applications to optimize the distribution grid for the utility.

A utilities fault may occur when a short circuit between two-phase lines occurs or for other reasons. The fault in any one of the lines can affect a large number of customers. Before the fault on the line can be corrected, it has to be identified and isolated from the large utility network. This identification and isolation is done by placing reclosers in the network. The reclosers are in turn connected to the recloser controller. The recloser controller is a connected gateway, which establishes a connection to the control center.

When a fault is identified, the reclosers perform the trip operation and the fault is isolated from the larger network. This trip operation can be automated or can be sent from the control center. Once the fault is corrected, the close operation on the circuit, which is done from the control center, can be executed. This is commonly referred to as FLISR, and is also one of the key use cases for a utility in a grid optimization effort.

This Distribution Automation architecture address the utility requirements for Volt/VAR and FLISR via a robust communications infrastructure that addresses the two predominant distribution automation schemes:

In Europe, portions of South America, and Asia, the distribution scheme is based on a more centralized transformer design and is commonly referred to as the Secondary Substation.

In North America, portions of South America, and along the Pacific Rim, the distribution scheme is based on a decentralized transformer model and this scheme will be referred to throughout this document as a Feeder Network.

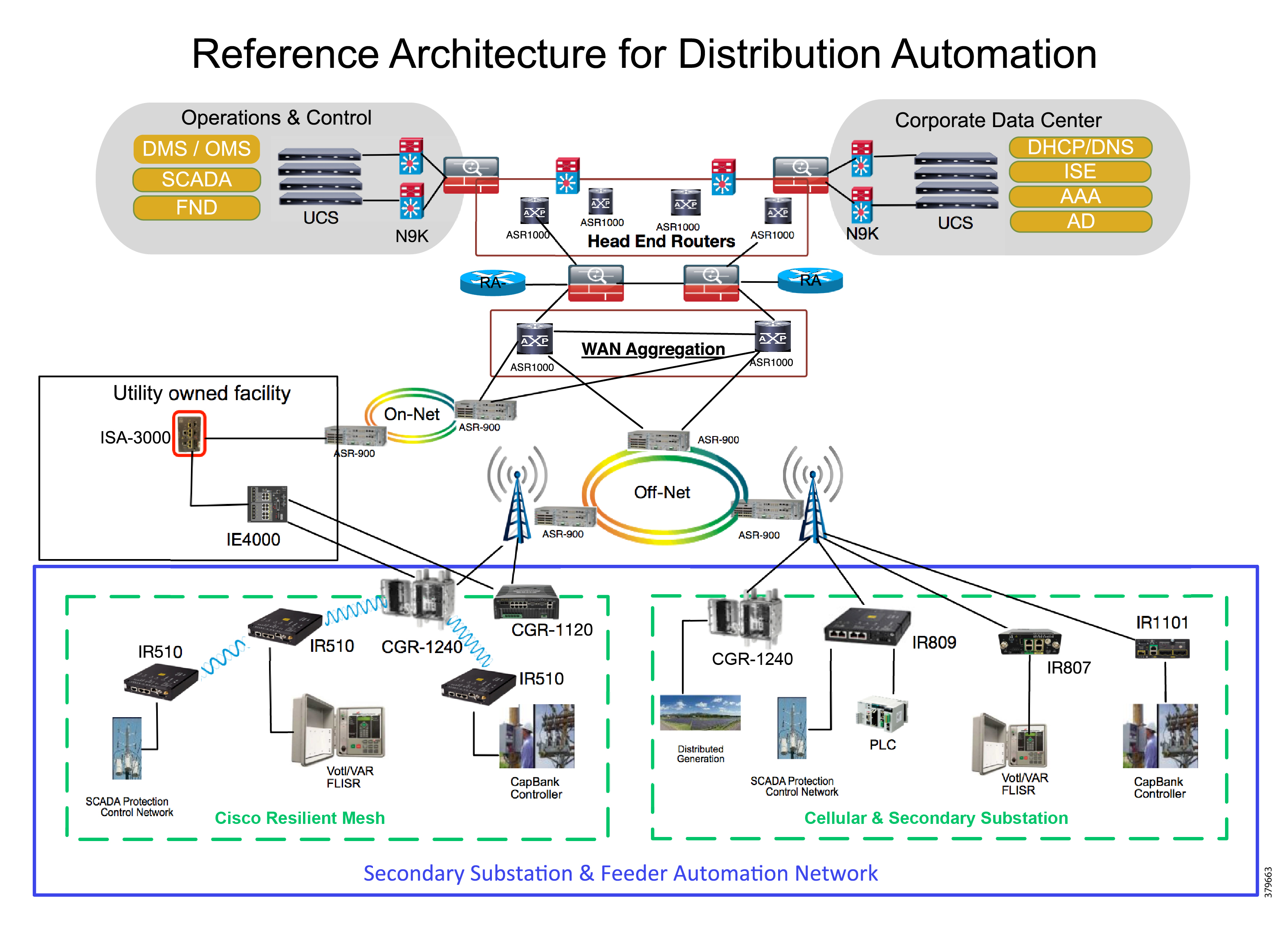

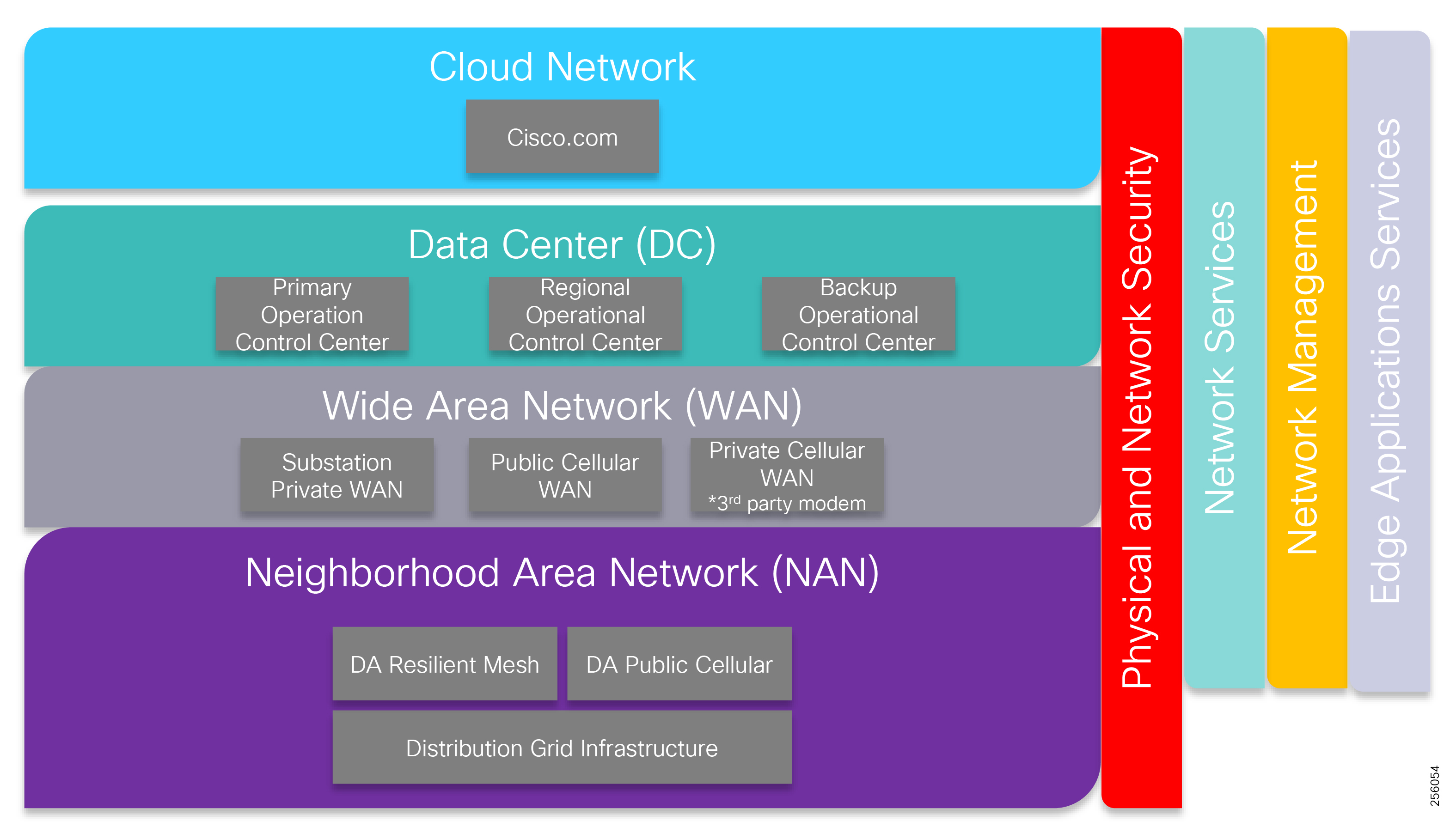

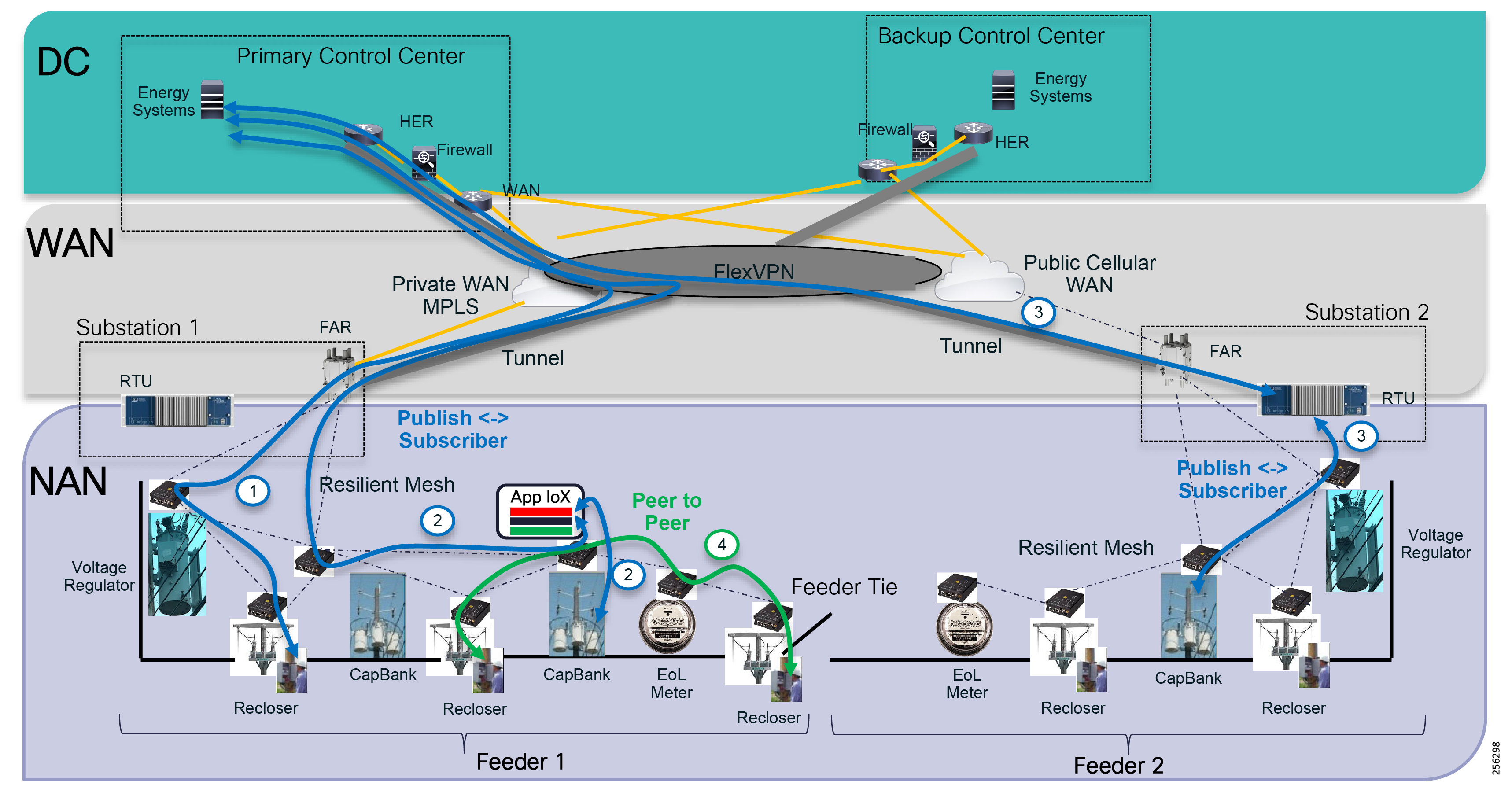

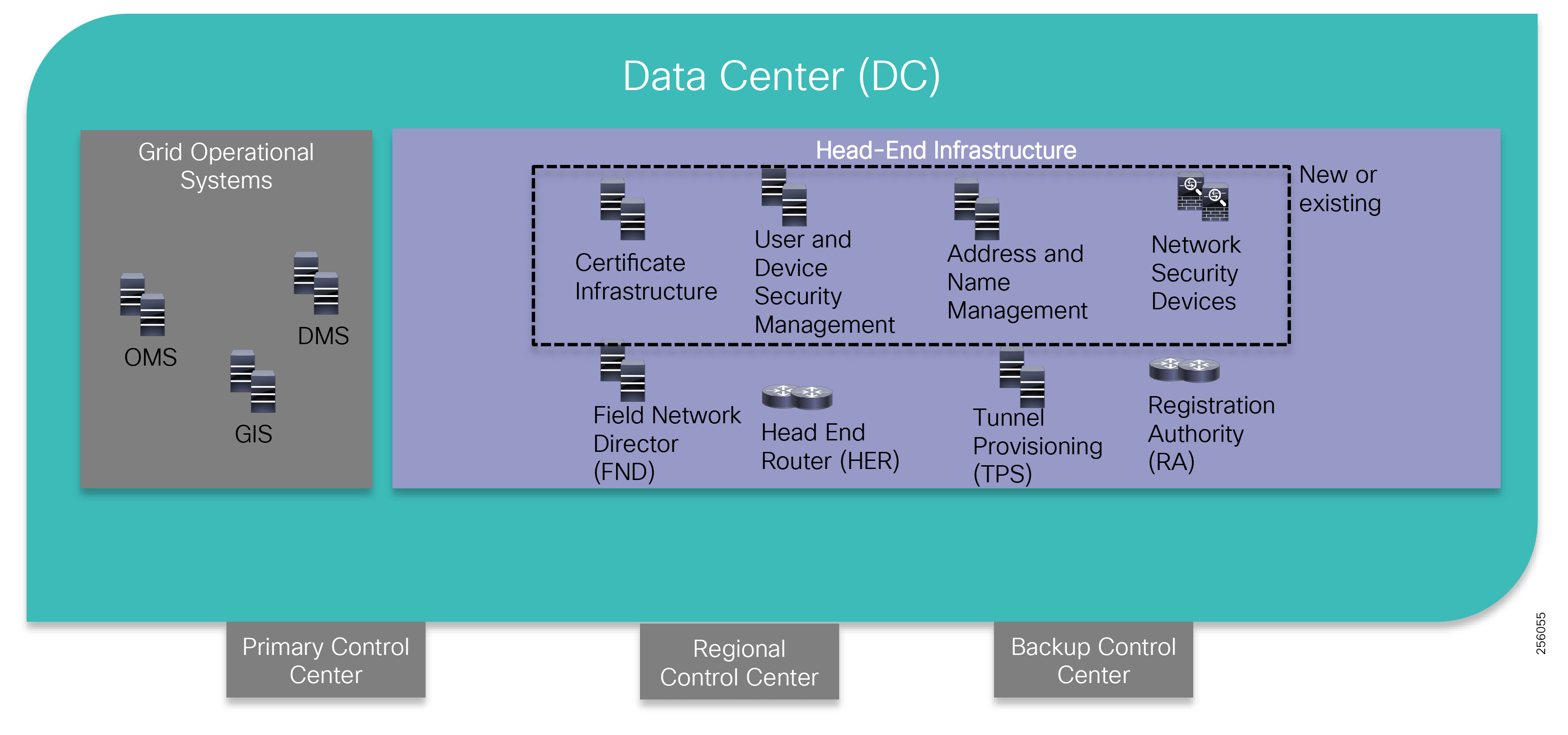

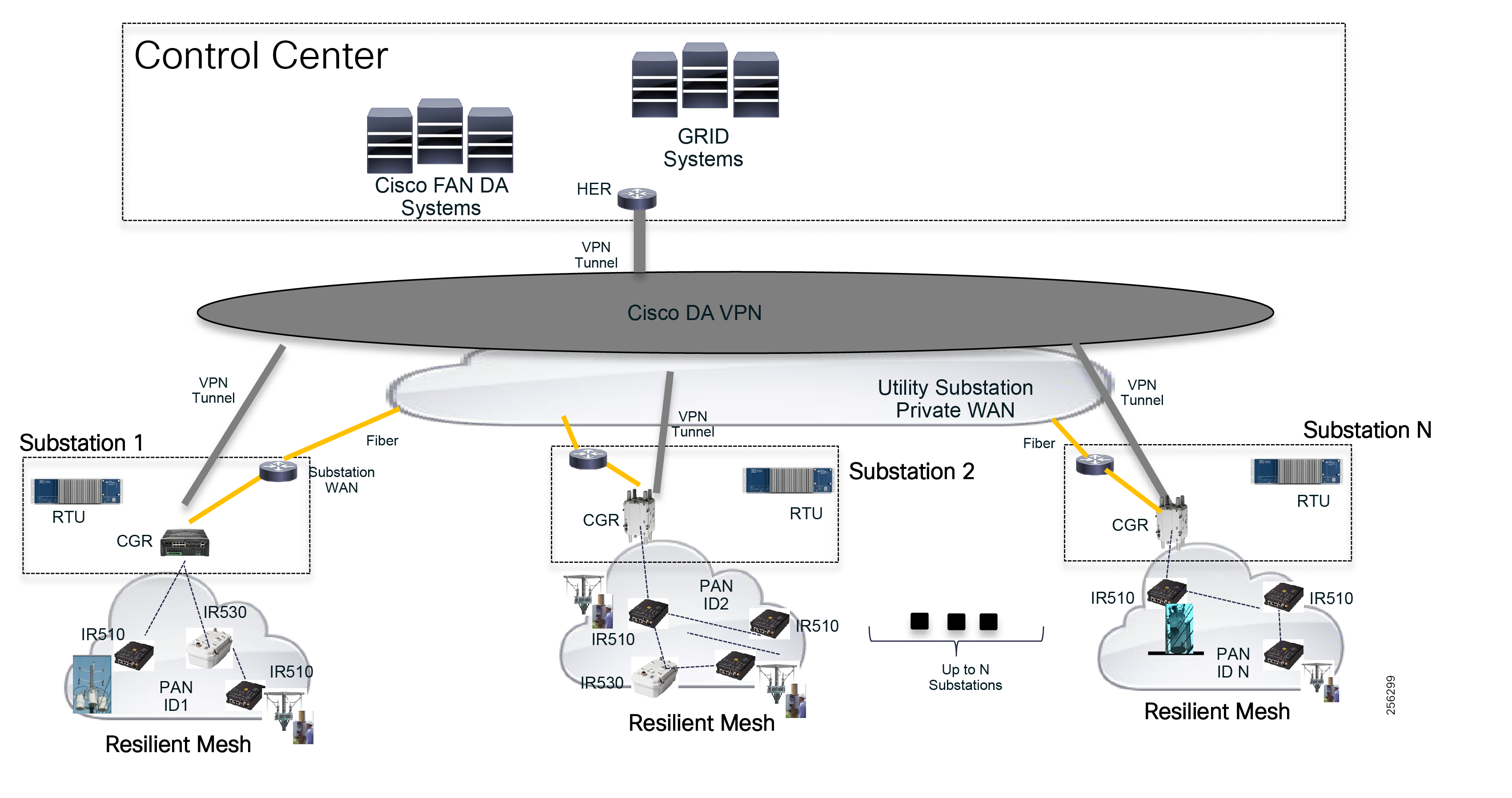

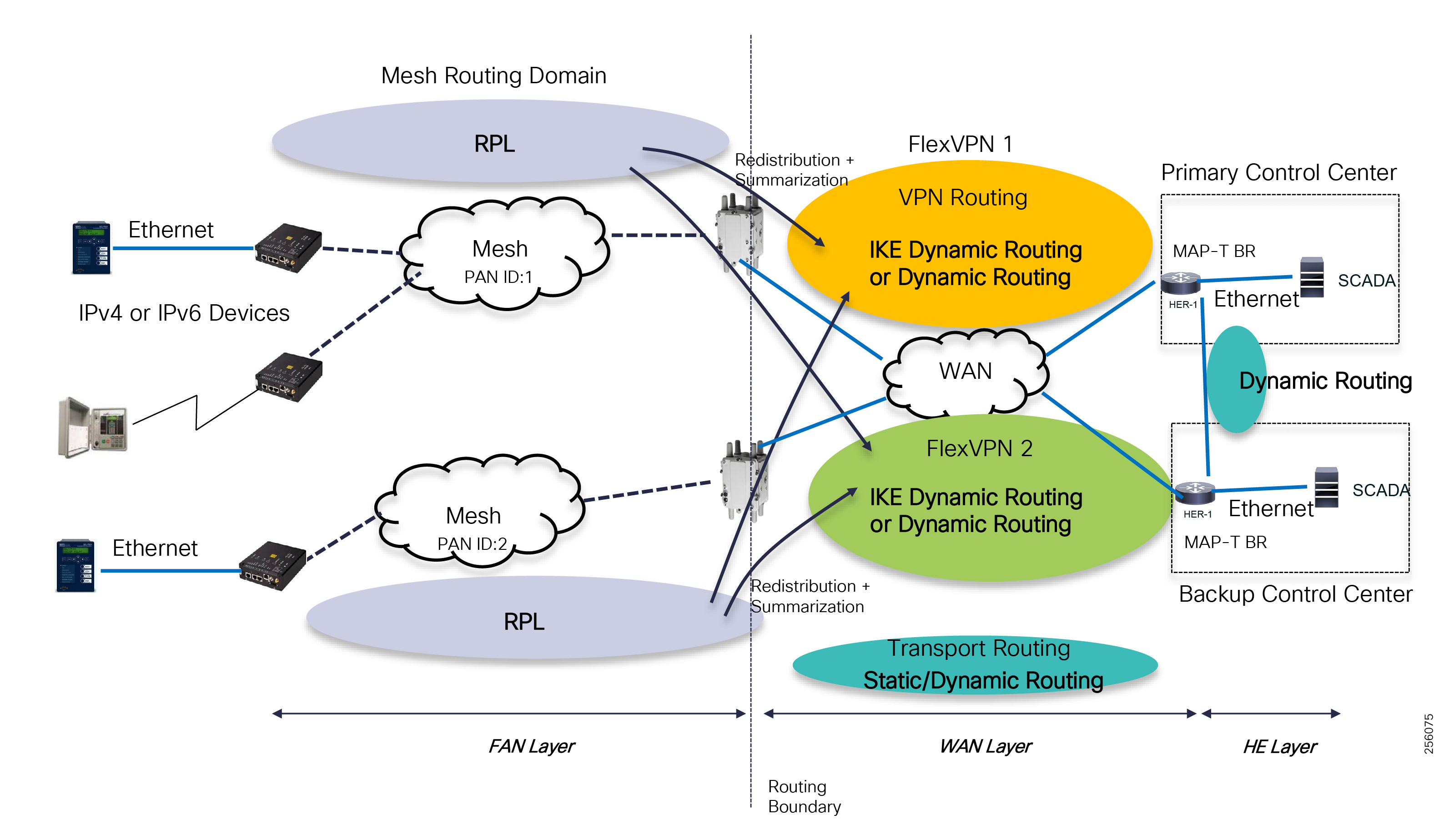

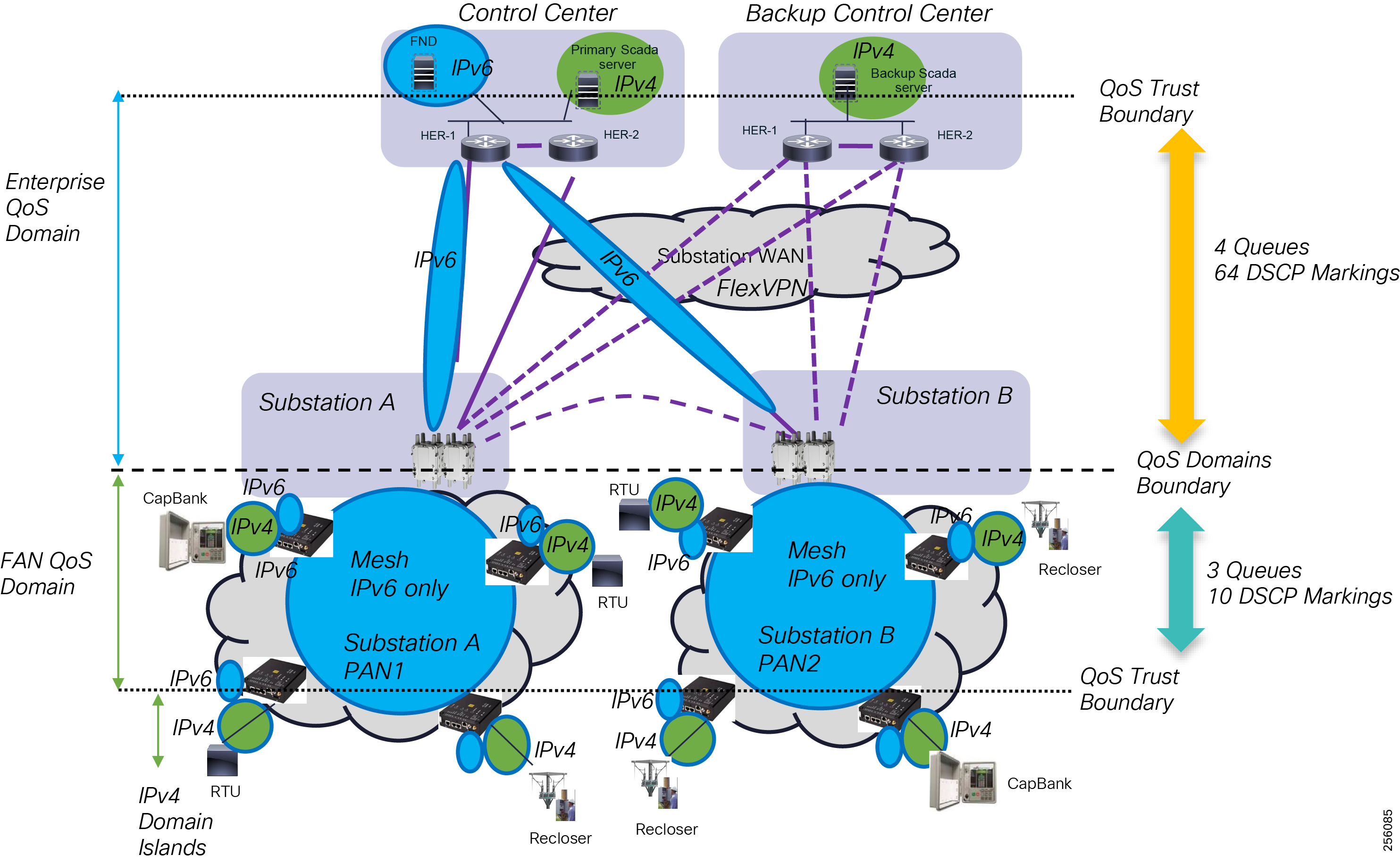

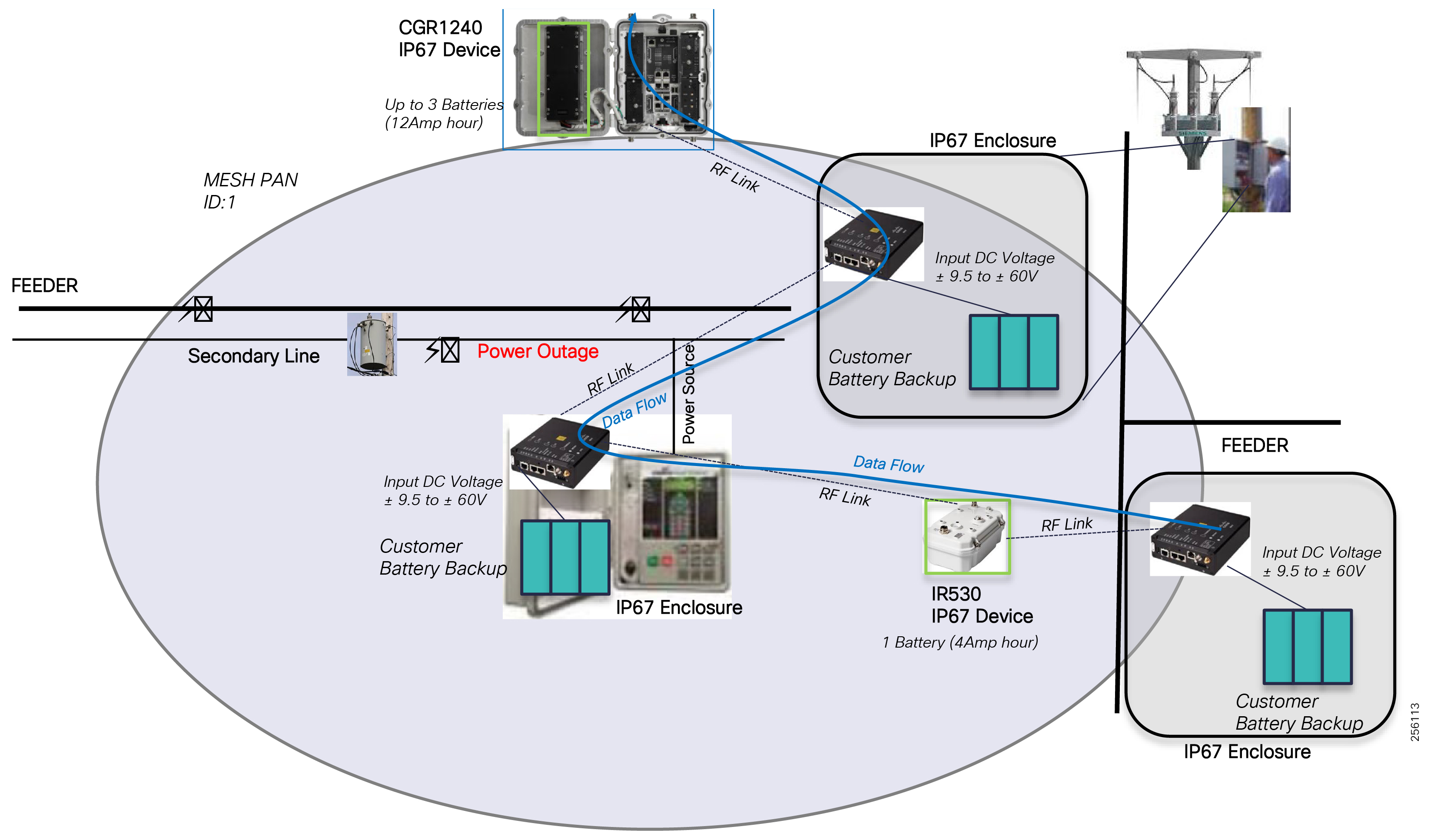

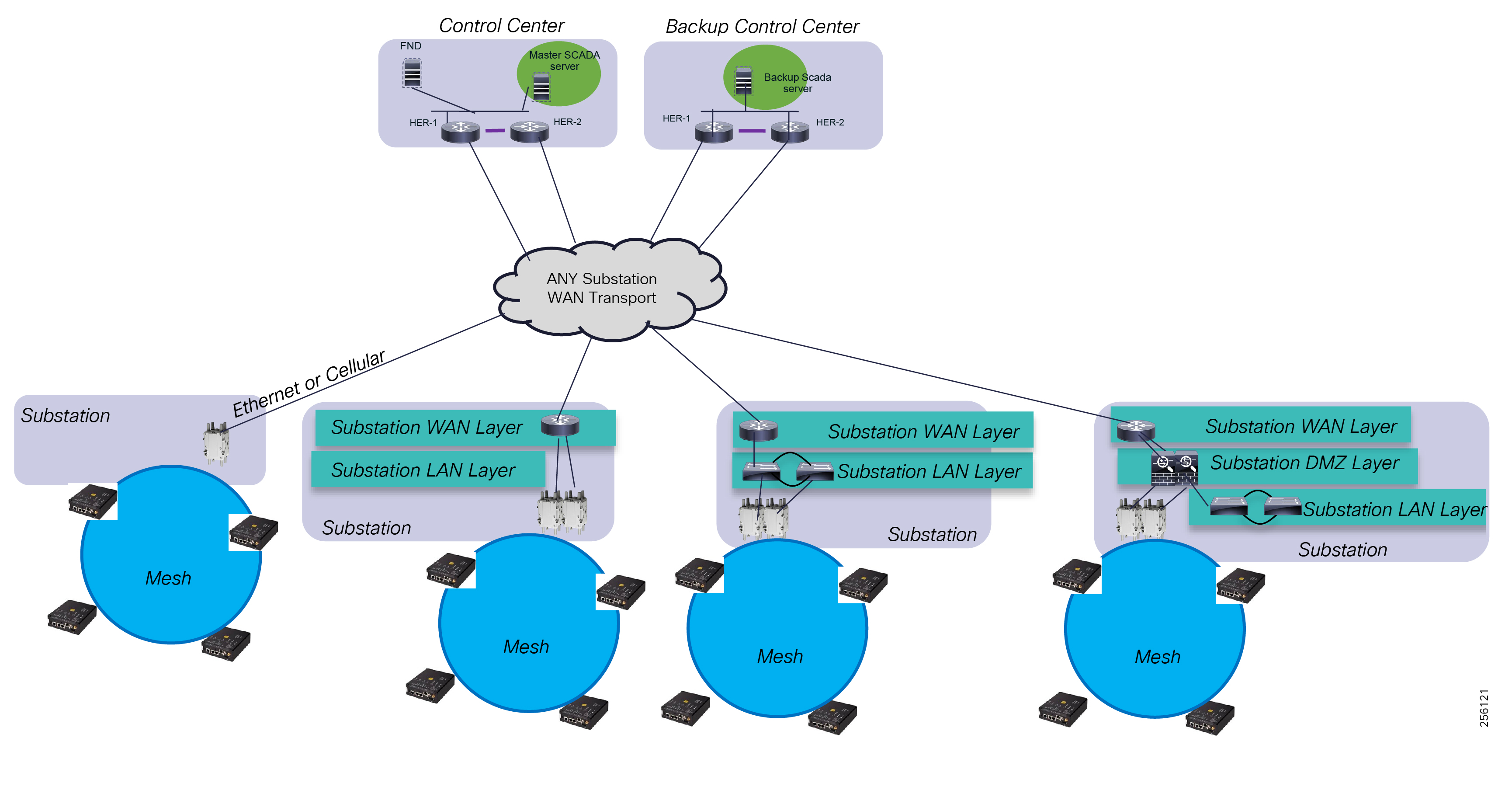

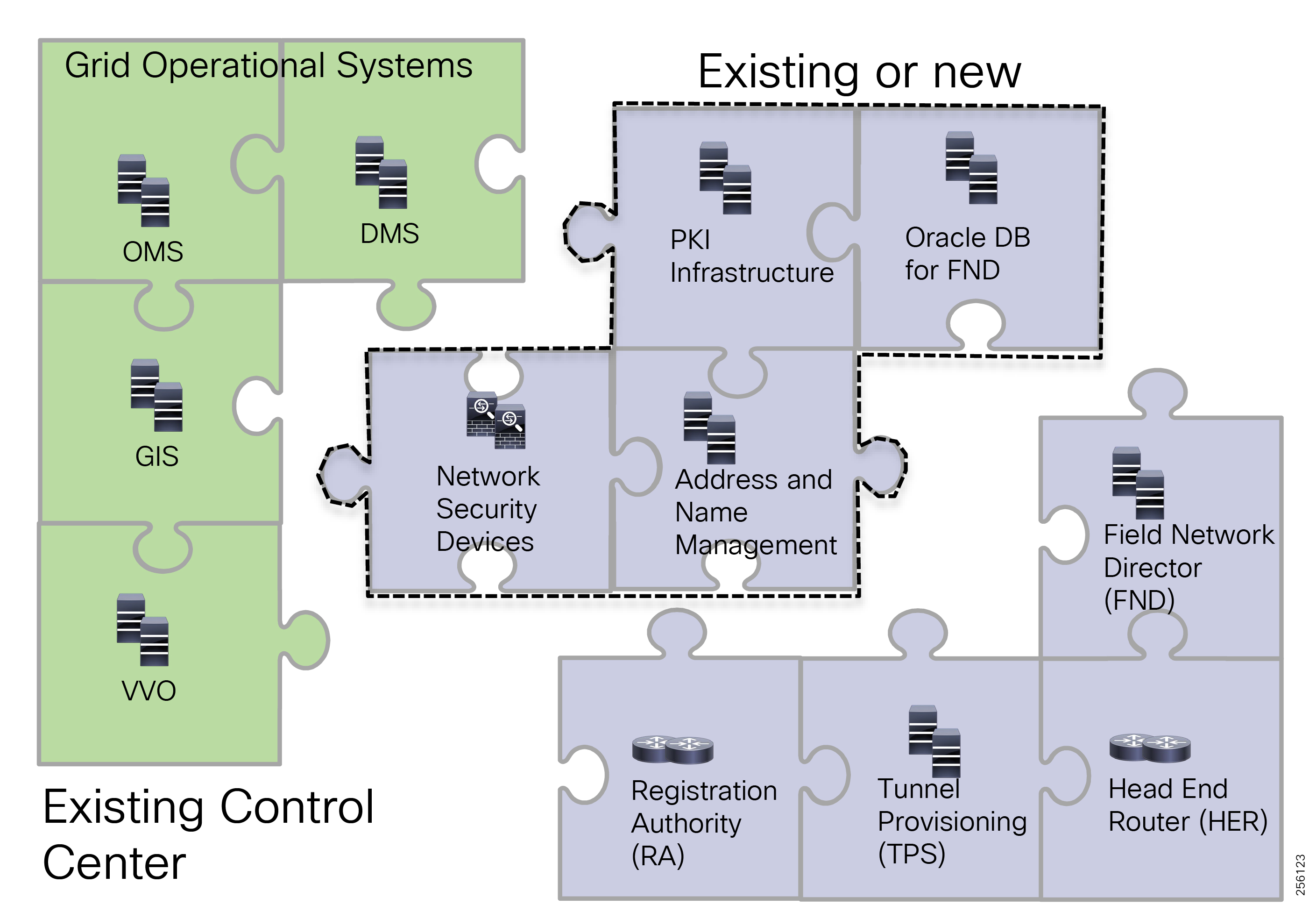

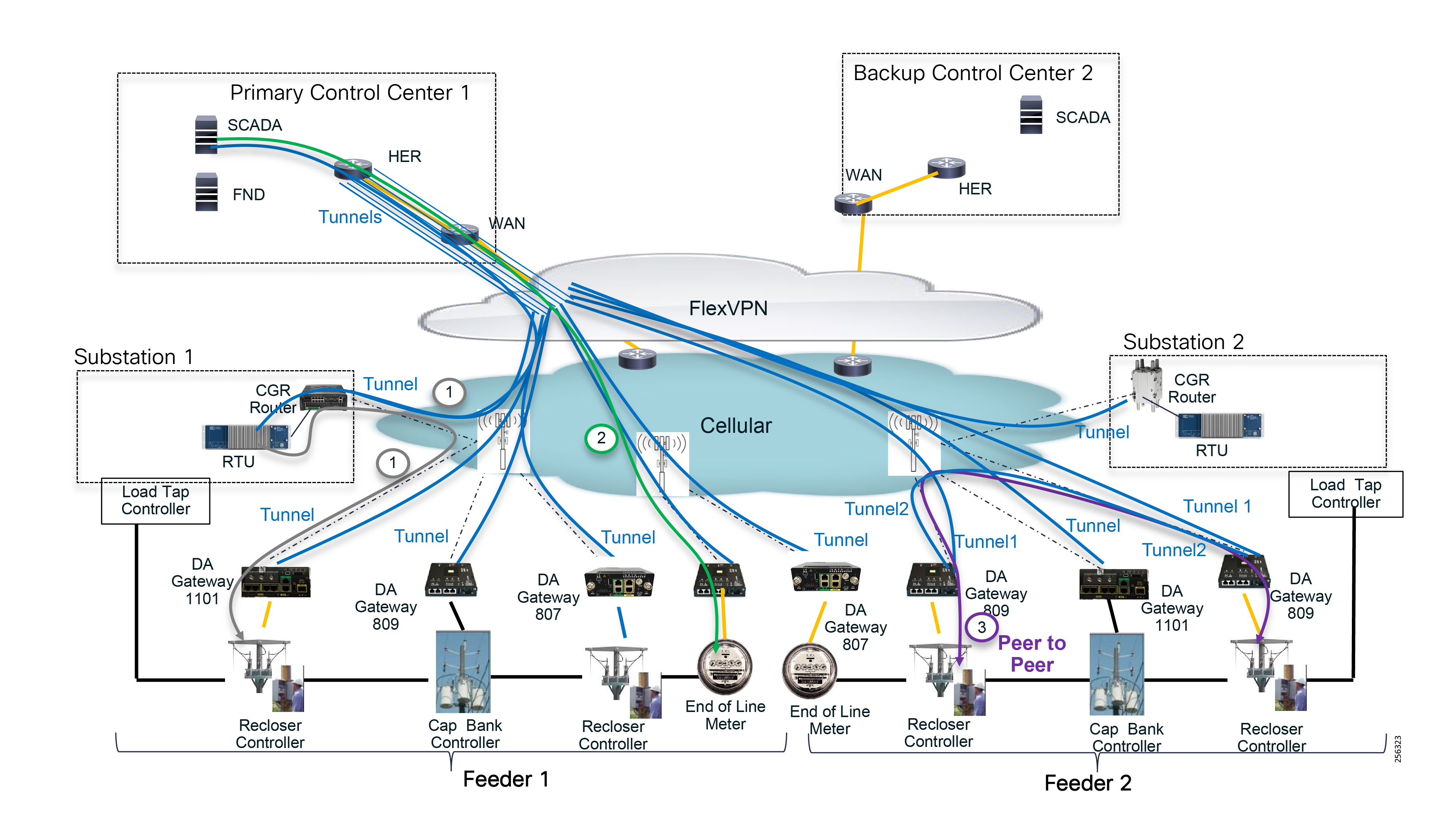

The architecture in Figure 1 leverages the latest technologies and recent enhancements to best address use cases and these topologies with a variety of cell-based gateways for the Secondary Substation as well as a combination of 900 Mhz mesh and cell gateways at the edge. The architecture addresses the requirements for these edge services and communications, including the edge as NAN, the backhaul as WAN, and the Operations and Control Centers commonly referred to as the Headend.

Figure 1 Reference Architecture for Distribution Automation

The Headend provides aggregation and security for and between the distribution automation applications typically at the Utility control center. This architecture leverages a secure WAN aggregation for scalability since feeder sections may scale to hundreds or more devices with the DA network scaling to thousands of feeder segments and Secondary Substation networks with over 100,000 nodes.

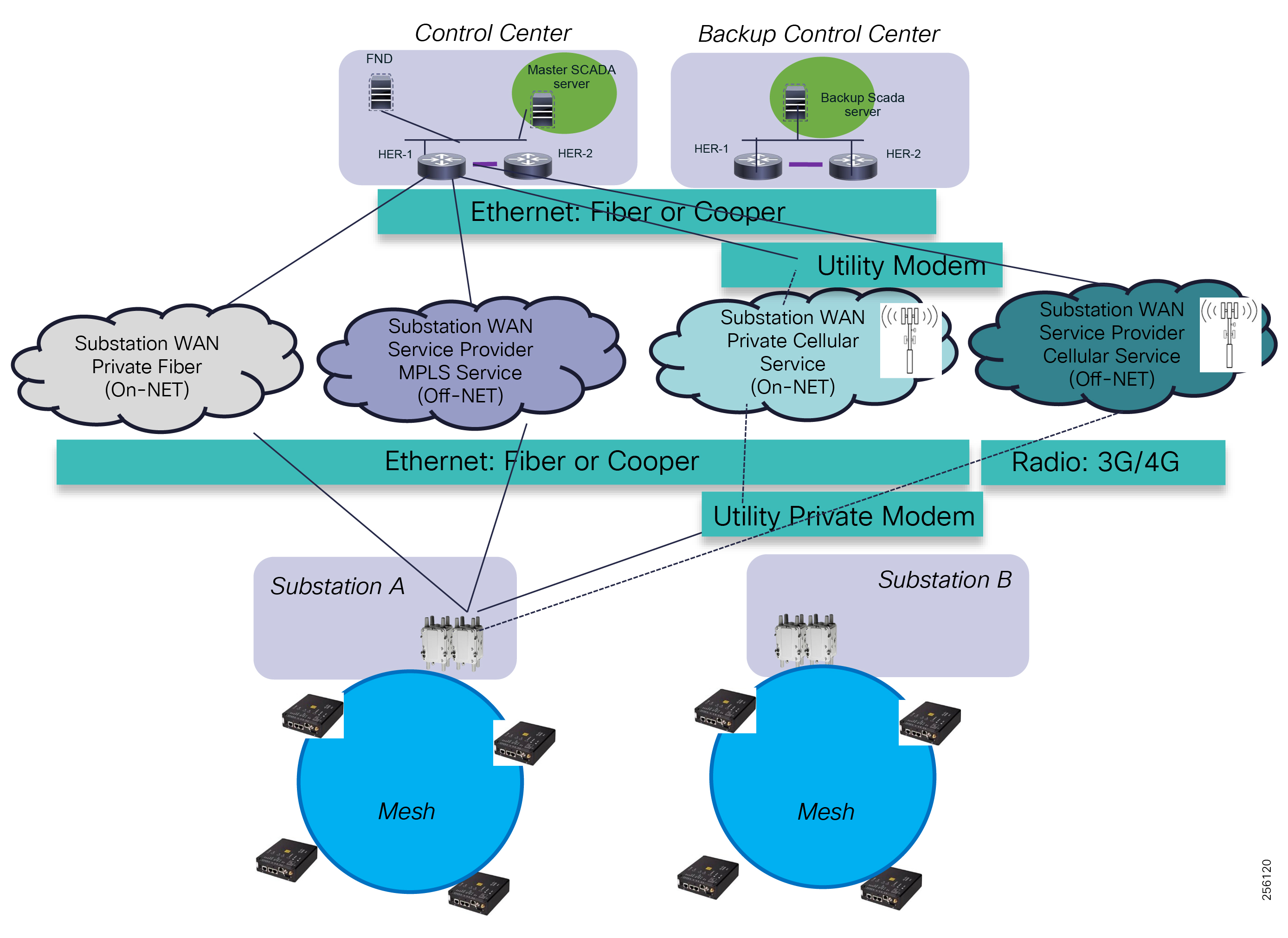

As part of this architecture, the WAN segment is referred to in two modes: On-Net and Off-Net:

■![]() On-Net is a high speed communications network owned and operated by the utility; examples include SDH/SONET, Carrier Ethernet, or MPLS as the most common.

On-Net is a high speed communications network owned and operated by the utility; examples include SDH/SONET, Carrier Ethernet, or MPLS as the most common.

■![]() On the other hand, the Off-Net network is a service provider-leveraged network that can be based on the same technologies but as a shared service that often includes pre-negotiated service level agreements.

On the other hand, the Off-Net network is a service provider-leveraged network that can be based on the same technologies but as a shared service that often includes pre-negotiated service level agreements.

The WAN segment for DA networks is often a cellular backhaul connection because building out a private network in numerous and remote locations, especially in the Secondary Substation model, is frequently cost prohibitive. The NAN Mesh offers opportunities to leverage the On-Net network as backhaul when the radio network gateway can be co-located at a utility-owned facility such as a substation or depot.

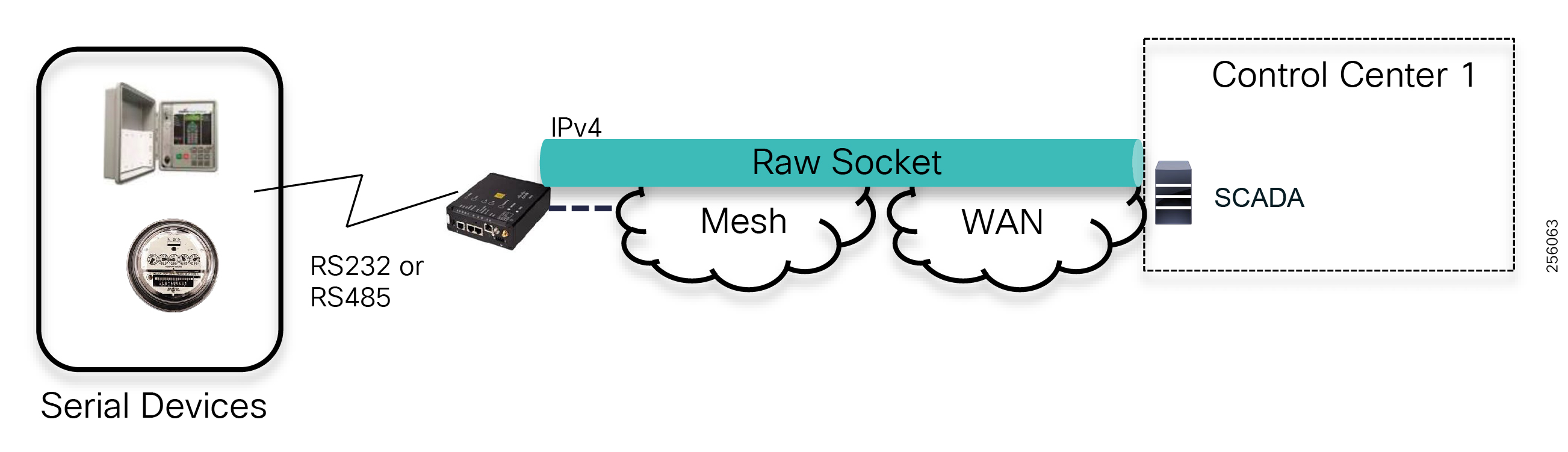

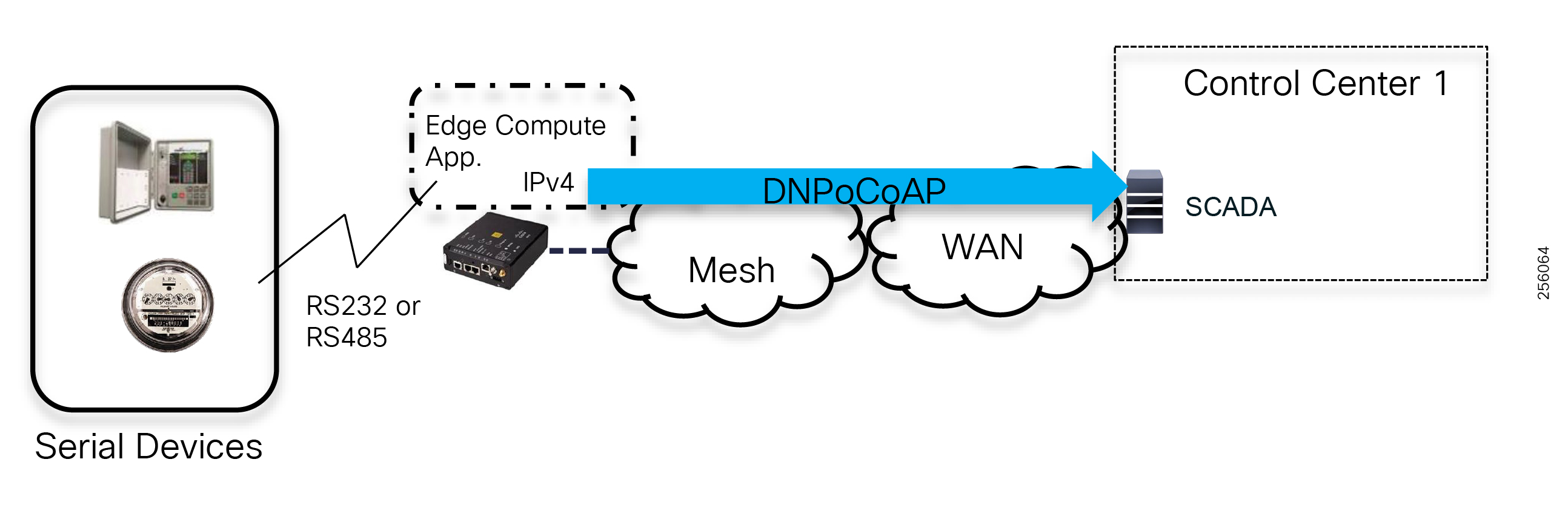

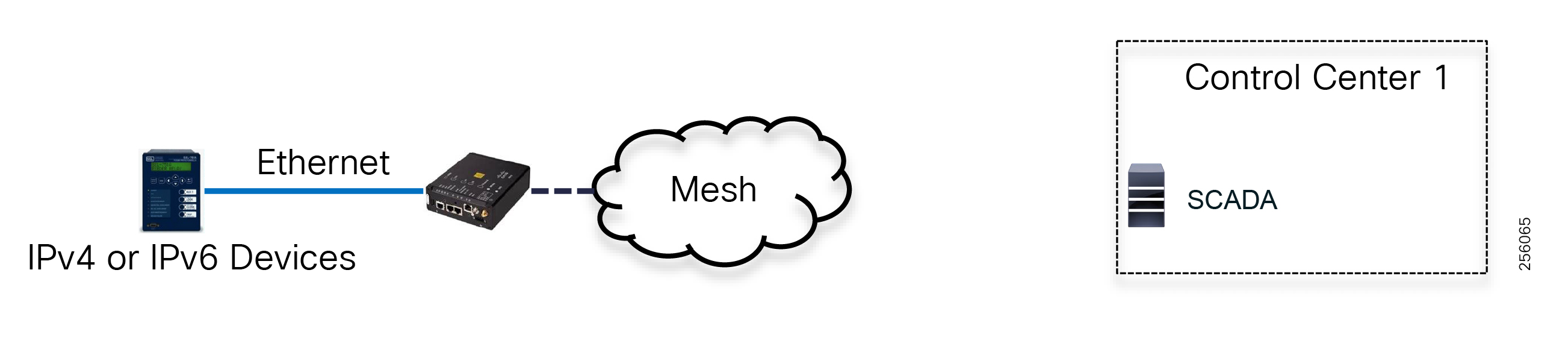

The edge or the NAN is built on a small form factor gateway or NAN router connected to the edge device such as a Capacitor Bank Controller (CBC) or voltage line monitor based on application or service. The connection to the edge device is often serial, but is rapidly moving to Ethernet. The NAN router can be configured to deliver edge services such as adaptation for serial connections via raw socket encapsulation or translation from serial protocols like IEC-101 to the packet-based IEC-104 protocol. The NAN router also provides security services such as 802.1x port-based authentication, encryption, and routing with possible alternate backhaul options, thus providing a secure connection for the edge device to the control center. The backhaul in the case of Secondary Substations is most often cellular with some satellite or DSL options.

Cisco Resilient Mesh is the latest version of the 900 Mhz Connected Grid Mesh radio with significant performance improvements now applicable for many Distribution Automation applications and use cases. However, it is recognized that Resilient Mesh may not be applicable for all use cases. The Distribution Feeder network will likely be a combination of mesh where the 900 Mhz radio network is feasible and hop count and latency meet application requirements with cellular to augment based on hop count, application performance, or latency requirements.

Distribution Automation Use Cases

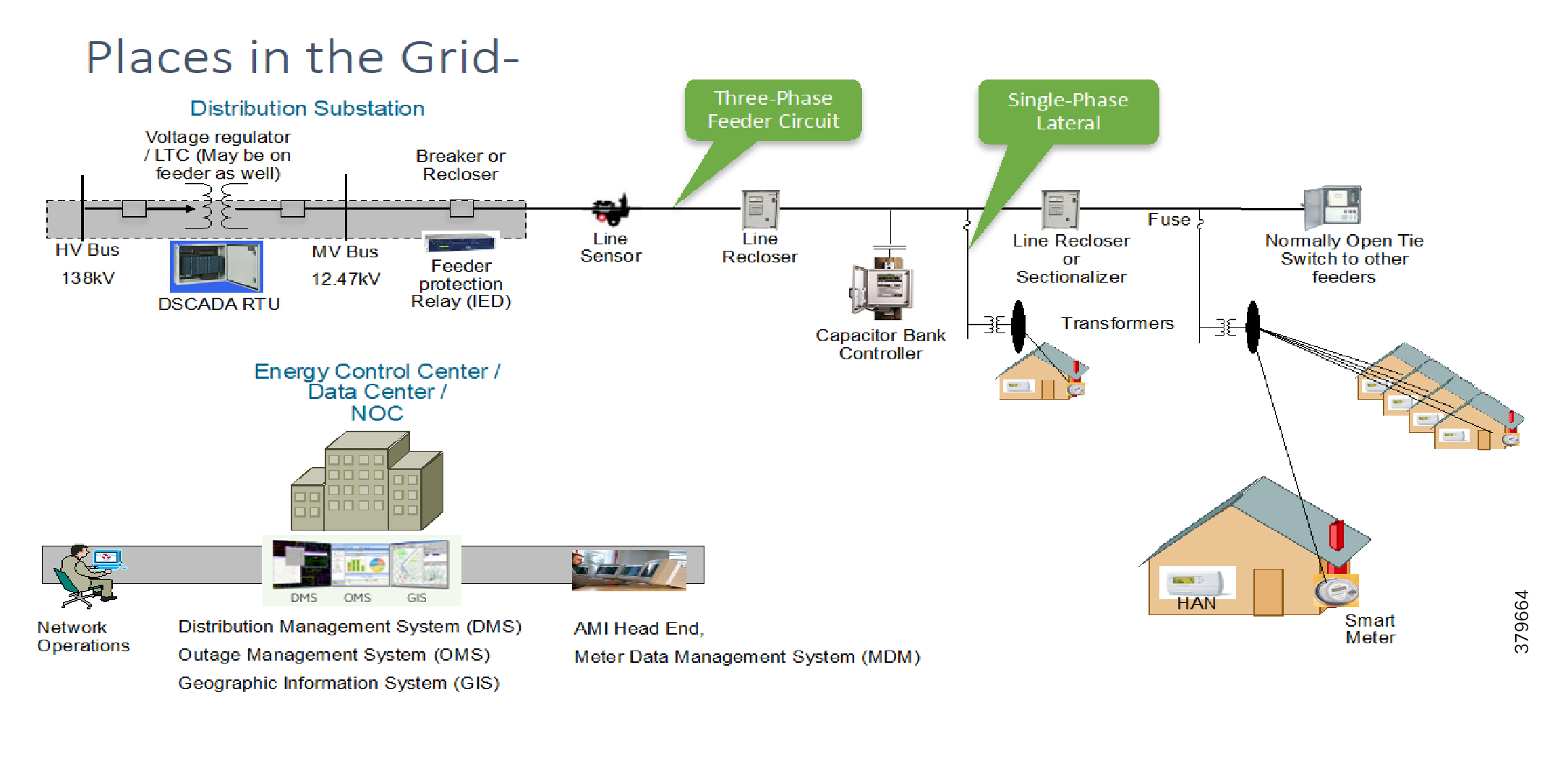

Distribution Automation (DA) refers to the monitoring and control of devices located on the distribution feeders, such as line reclosers, load break switches, sectionalizers, capacitor banks and line regulators, and devices located in the distribution substation. DA is an overlay network deployed in parallel to the distribution feeder. It enables two-way communication between controllers used in the distribution feeder and the intelligence application that resides in the Utility control center or Secondary Substation for improving grid reliability, availability, and control. Figure 2 depicts a radial distribution feeder:

In Figure 2, the distribution feeder can be observed coming out of the Secondary Substation; various distribution automation controllers (IEDs) in the feeder, such as the recloser controller, voltage regular controller, and capacitor bank controller, are positioned along the distribution feeder. Key functions and operations of Distribution Automation include protecting the distribution system, managing the fault, measuring the energy usage, managing the assets, and controlling and managing system performance. European feeders are largely three-phase and most European countries have a standard secondary voltage of 220, 230, or 240 V.

Use Cases

The following use cases of Distribution Automation will be discussed in this design guide:

■![]() Fault Location Isolation and Service Restoration (FLISR)

Fault Location Isolation and Service Restoration (FLISR)

The radial feeder distribution system design is considered for Volt/VAR regulation use cases and the parallel feeder distribution system is considered for FLISR use cases. Cisco DA Gateways are very well suited for other feeder deployments such as mesh and loop distributed feeder designs.

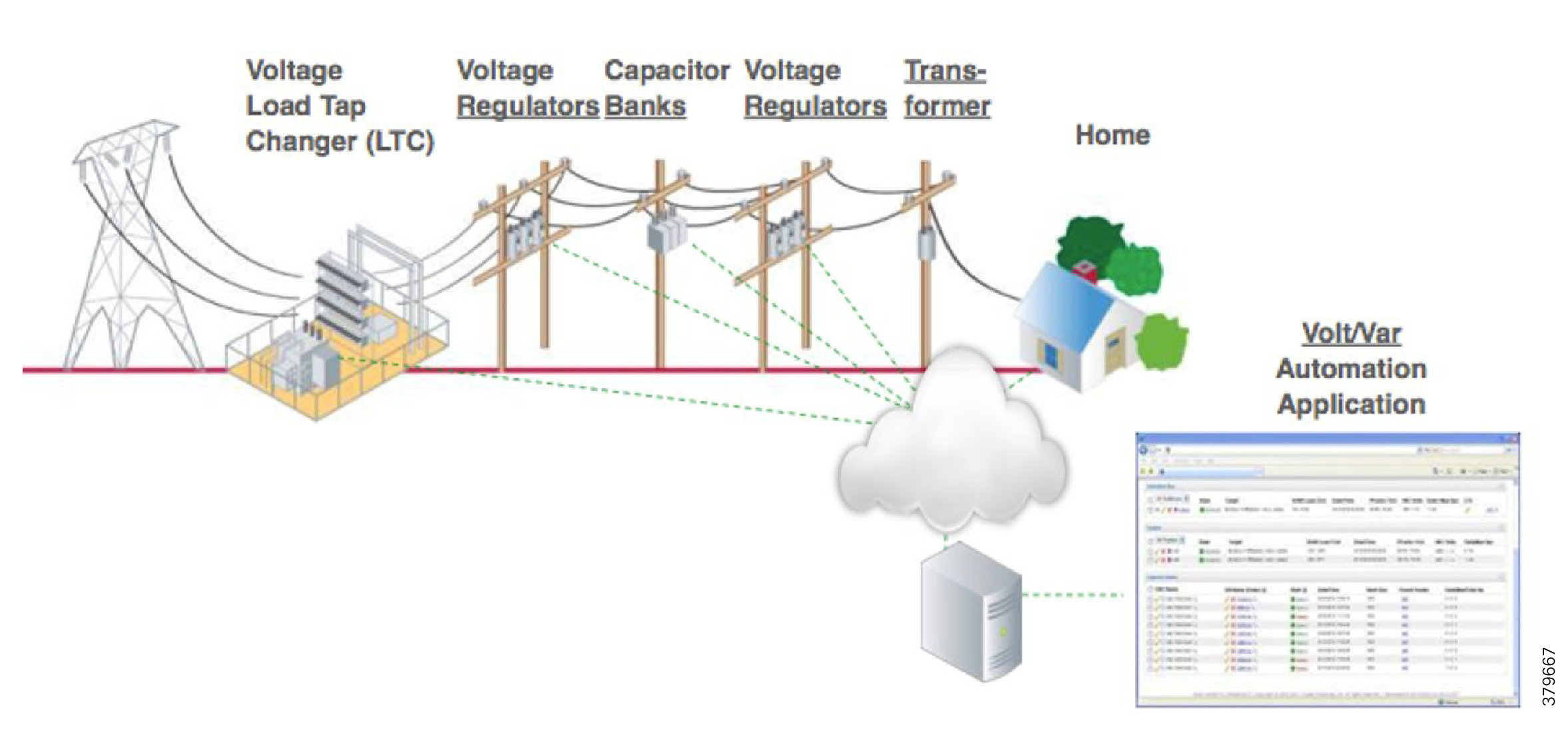

Volt/VAR Control Use Cases and Benefits

This use case address automating dynamic and efficient delivery of power. Utilities look at achieving large saving by enhancing the efficiency of their power distribution infrastructure-in other words, improving the effectiveness of the flow of electricity. In order to evaluate the process, it is important to review the differences between what is called real power and reactive power.

■![]() Real power is used to run all lights, devices and production lines. It is the power that "does the work."

Real power is used to run all lights, devices and production lines. It is the power that "does the work."

■![]() Reactive power does not contribute anything to doing work, but it does cause conductors to heat up and it takes up a certain amount of "space" in the wires.

Reactive power does not contribute anything to doing work, but it does cause conductors to heat up and it takes up a certain amount of "space" in the wires.

The more reactive power flowing on a line, the less "room" there is for real power, and the less efficient is the distribution system.

Today, in order to eliminate or at least minimize reactive power flows, utilities have deployed on their local distribution systems devices, such as capacitor banks or special transformers that are typically located at substations or on the feeder. These devices work to keep reactive power flows down, making the full capacity of the conductor available for the real power. This process is known as Volt/VAR regulation or control:

■![]() Power Factor Regulation/VAR Compensation—Improves efficiency of energy supply by ensuring voltage and current are in phase when supplied to the customer.

Power Factor Regulation/VAR Compensation—Improves efficiency of energy supply by ensuring voltage and current are in phase when supplied to the customer.

■![]() Conservation Voltage Regulation—At times of peak load, ensure the minimum required voltage level is supplied to the customer.

Conservation Voltage Regulation—At times of peak load, ensure the minimum required voltage level is supplied to the customer.

■![]() Volt/VAR Control—Power factor regulation + Conservation voltage regulation.

Volt/VAR Control—Power factor regulation + Conservation voltage regulation.

Volt/VAR Actors

Figure 3 depicts various actors used in the Volt/VAR use case. The actors used in the Volt/VAR use case are Load Tap Changers, Voltage Regulators, and Capacitor Bank Controllers (CBCs).

Voltage Regulator and Load Tap Controllers

Voltage regulation functions are performed using the Voltage Regulator/Load Tap Controller actors. Voltage can be raised or lowered based on load conditions. Voltage Regulators are types of transformers that make small adjustments to voltage levels in response to changes in load. They are installed in substations (where they are called load tap changers) and along distribution feeders to regulate downstream voltage. Voltage Regulators have multiple "raise" and "lower" positions and can automatically adjust according to feeder configurations, loads, and device settings.

Capacitor Bank Controllers

CBCs are used to supply reactive power. Utilities use capacitors to compensate for reactive power requirements caused by inductive loads from customer equipment, transformers, or overhead lines. Compensating for reactive power reduces the total amount of power that needs to be provided by power plants, resulting in a flatter voltage profile along the feeder and less energy wasted from electrical losses in the feeder. A distribution capacitor bank consists of a group of capacitors connected together. Capacitor banks are mounted on substation structures, distribution poles, or are "pad-mounted" in enclosures.

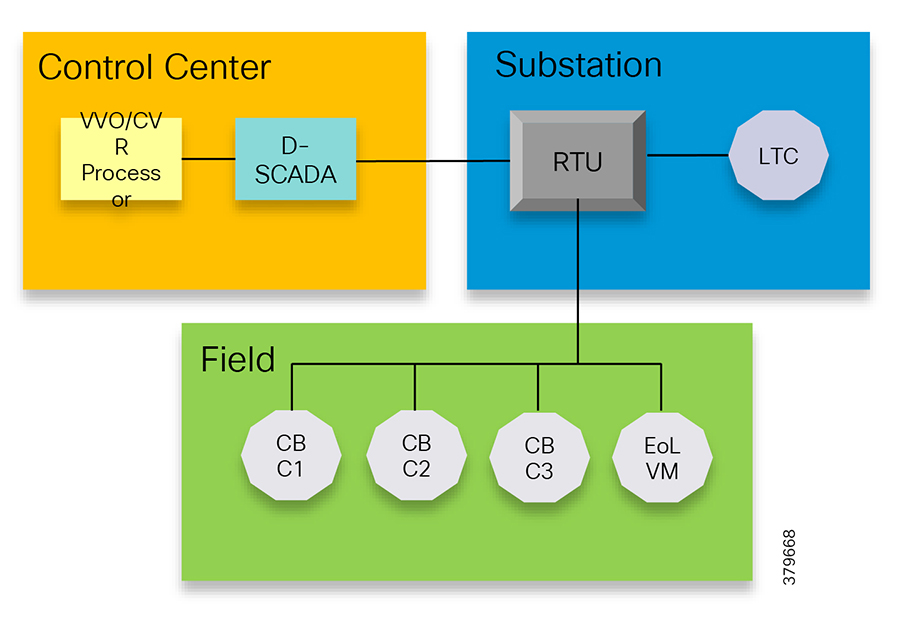

Volt/VAR Application Communication Flow

In Figure 4, Volt/VAR and Supervisory Control and Data Acquisition (SCADA) applications are hosted in the DSO control center and RTU and load tap controllers are located in the Secondary Substation. The remote terminal unit (RTU) acts as an outstation device that proxies the poll and/or control command to various field devices like the CBC and end-of-line voltage monitor. This guide covers the use case scenario where the Volt/VAR application flow between the Intelligent Electronic Device (IED) and SCADA happens via RTU and the distribution feeder type considered is radial. A direct application flow from field devices to the control center for the Volt/VAR use case will be covered in future guides.

Figure 4 Volt/VAR Block Diagram

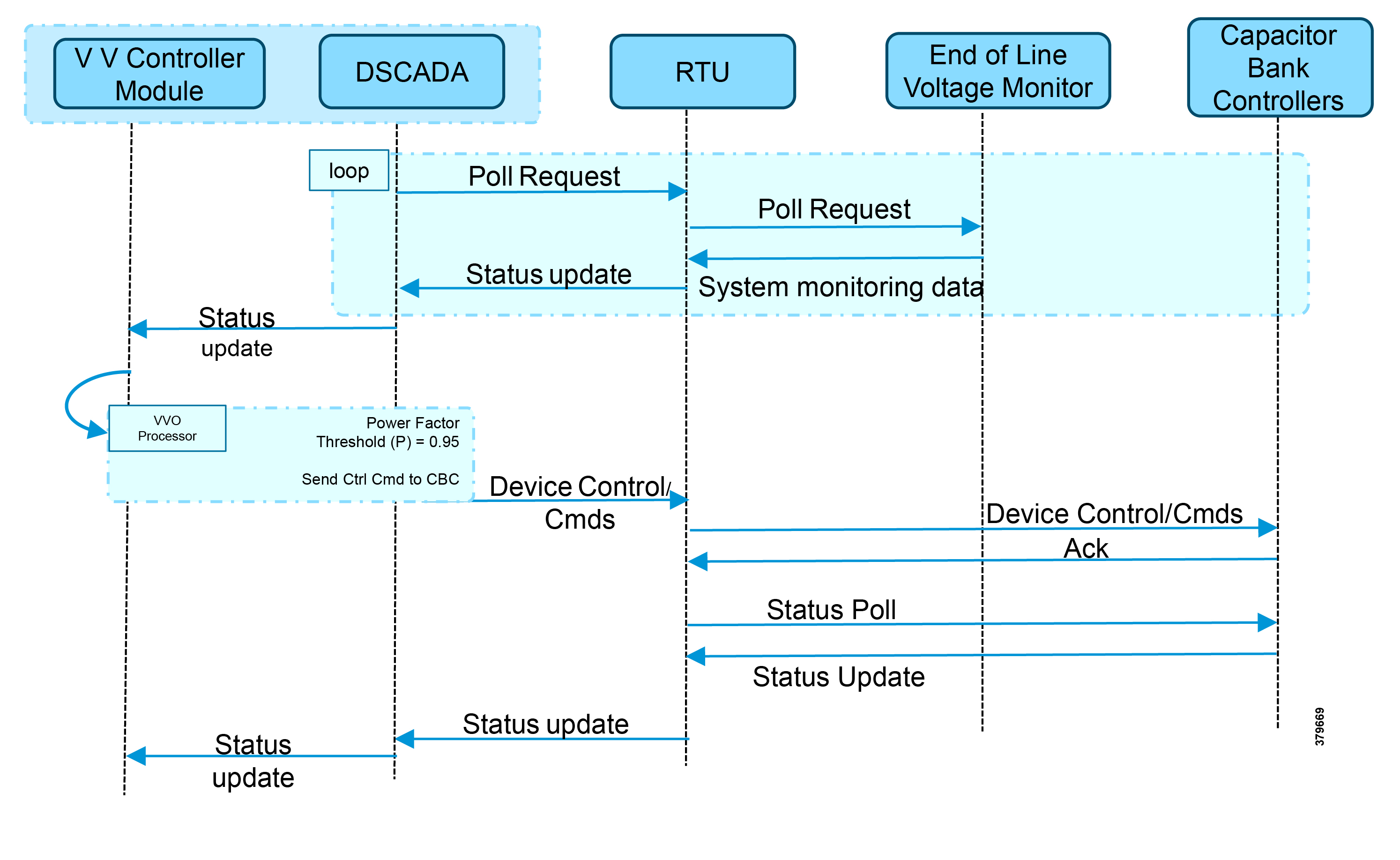

The detailed application flow between different actors for power factor regulation is depicted in Figure 5:

Figure 5 Power Factor Regulation Flows

1.![]() Event class data poll to the following devices from RTU:

Event class data poll to the following devices from RTU:

–![]() Substation meter, poll measured Value (Short Floating Point) registers (0 to 4)

Substation meter, poll measured Value (Short Floating Point) registers (0 to 4)

–![]() All CBC(s), poll measured Value (Short Floating Point) (0) and double point command(0)

All CBC(s), poll measured Value (Short Floating Point) (0) and double point command(0)

–![]() End-of-line voltage monitor, poll measured Value (Short Floating Point) register (0)

End-of-line voltage monitor, poll measured Value (Short Floating Point) register (0)

2.![]() The Volt/VAR Optimization processor processes the data received from the devices and makes a control command decision based on the power factor calculation.

The Volt/VAR Optimization processor processes the data received from the devices and makes a control command decision based on the power factor calculation.

3.![]() The control command is sent to RTU via SCADA to CBCs to close the Capacitor Bank Controller N by writing in a Control Relay Output Block (CROB) command register in T104.

The control command is sent to RTU via SCADA to CBCs to close the Capacitor Bank Controller N by writing in a Control Relay Output Block (CROB) command register in T104.

4.![]() Event class data poll to the following devices from the RTU:

Event class data poll to the following devices from the RTU:

–![]() Substation meter, poll measured Value (Short Floating Point) registers (0 to 4)

Substation meter, poll measured Value (Short Floating Point) registers (0 to 4)

–![]() All CBC(s), poll measured Value (Short Floating Point) (0) and double point command(0)

All CBC(s), poll measured Value (Short Floating Point) (0) and double point command(0)

–![]() End-of-line voltage monitor, poll measured Value (Short Floating Point) register(0)

End-of-line voltage monitor, poll measured Value (Short Floating Point) register(0)

5.![]() All the above steps are repeated to all the CBCs on the feeder line to maintain a Power Factor value close to 1.

All the above steps are repeated to all the CBCs on the feeder line to maintain a Power Factor value close to 1.

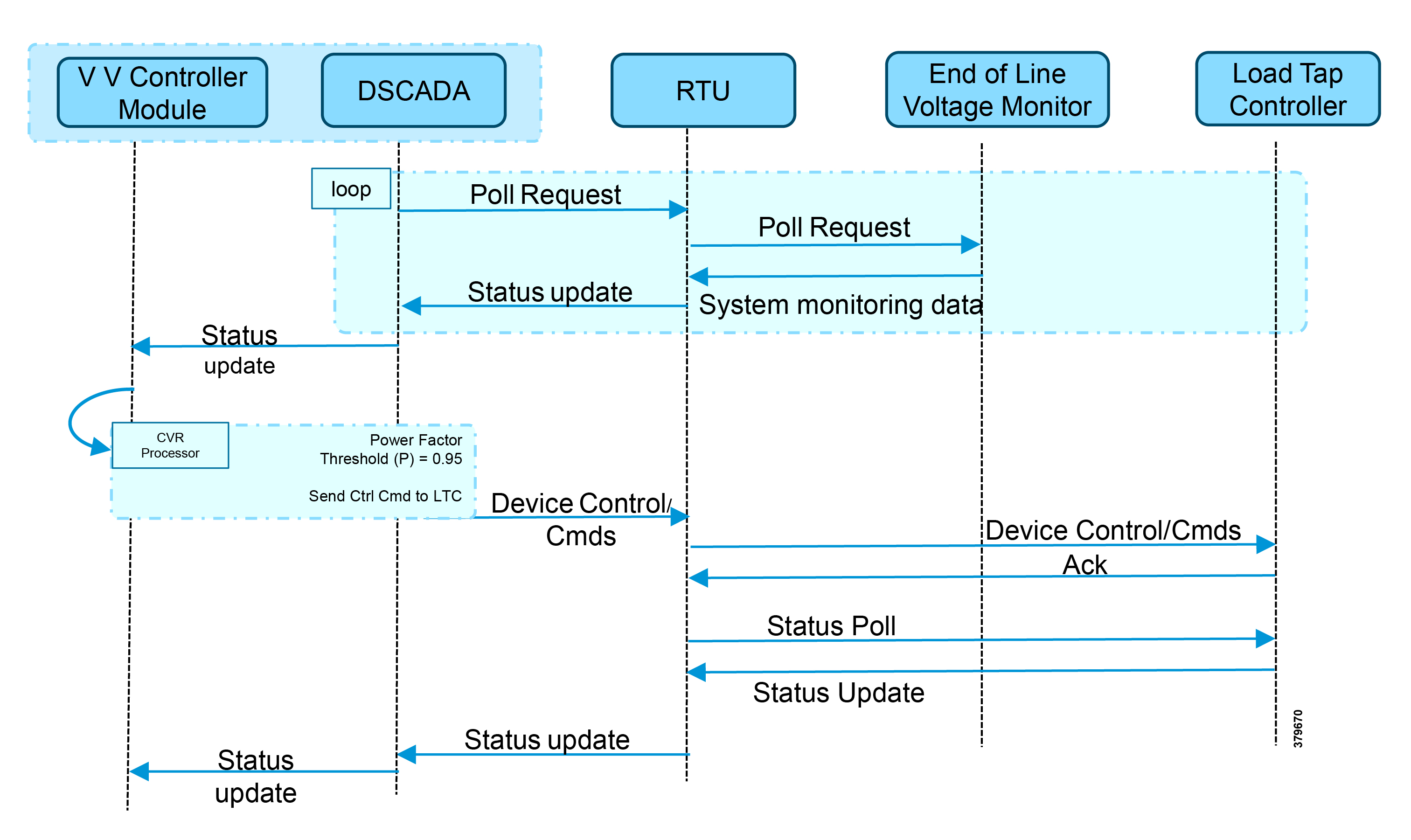

Figure 6 Conservation Voltage Regulation (CVR)

Figure 6 depicts the detail call flow involved in conservation voltage regulation.

1.![]() Event class data poll to the below devices from RTU:

Event class data poll to the below devices from RTU:

–![]() Substation meter, poll measured Value (Short Floating Point) registers (0 to 4)

Substation meter, poll measured Value (Short Floating Point) registers (0 to 4)

–![]() All CBC(s), poll measured Value (Short Floating Point) (0) and double point command (0)

All CBC(s), poll measured Value (Short Floating Point) (0) and double point command (0)

–![]() End-of-Line voltage monitor, poll measured Value (Short Floating Point) register (0)

End-of-Line voltage monitor, poll measured Value (Short Floating Point) register (0)

2.![]() The Volt/VAR Optimization processor processes the data received from the devices and makes a control command decision based on the power factor calculation.

The Volt/VAR Optimization processor processes the data received from the devices and makes a control command decision based on the power factor calculation.

3.![]() Control command is sent to RTU via SCADA to the load tap controller to lower/raise LTC by writing in a Control Relay Output Block (CROB) command register in T104.

Control command is sent to RTU via SCADA to the load tap controller to lower/raise LTC by writing in a Control Relay Output Block (CROB) command register in T104.

4.![]() Event class data polls to the following devices from RTU:

Event class data polls to the following devices from RTU:

–![]() Substation meter, poll measured Value (Short Floating Point) registers (0 to 4)

Substation meter, poll measured Value (Short Floating Point) registers (0 to 4)

–![]() All CBC(s), poll measured Value (Short Floating Point) (0) and double point command(0)

All CBC(s), poll measured Value (Short Floating Point) (0) and double point command(0)

–![]() End-of-Line voltage monitor, poll measured Value (Short Floating Point) register (0)

End-of-Line voltage monitor, poll measured Value (Short Floating Point) register (0)

5.![]() The above steps are repeated to maintain a Power Factor value close to 1 along the feeder line.

The above steps are repeated to maintain a Power Factor value close to 1 along the feeder line.

Fault, Location, Isolation, Service Restoration (FLISR)

FLISR Use Case and Benefits

FLISR is the process for dealing with fault conditions on the electrical grid. The following occurs as part of this process:

1.![]() Detects (and locates) faults

Detects (and locates) faults

2.![]() Isolates the faults to the smallest segment of the grid possible

Isolates the faults to the smallest segment of the grid possible

3.![]() Restores as much service as possible while the fault is isolated

Restores as much service as possible while the fault is isolated

FLISR includes automatic sectionalizing and restoration and automatic circuit reconfiguration. These applications accomplish DA operations by coordinating operation of field devices, software, and dedicated communication networks in order to automatically determine the location of a fault and rapidly reconfigure the flow of electricity so that some or all of the customers can avoid experiencing outages. Because FLISR operations rely on rerouting power, they typically require feeder configurations that contain multiple paths to single or multiple other substations. This creates redundancies in the power supply for customers located downstream or upstream of a downed power line, fault, or other grid disturbance.

The benefits of FLISR include:

■![]() Consumers experience minimal outage.

Consumers experience minimal outage.

■![]() Utilities improve the System Average Interruption Duration Index (SAIDI) and the System Average Interruption Frequency Index (SAIFI) numbers and avoid financial penalties being levied by the regulator.

Utilities improve the System Average Interruption Duration Index (SAIDI) and the System Average Interruption Frequency Index (SAIFI) numbers and avoid financial penalties being levied by the regulator.

FLISR application control can be implemented in the following modes:

■![]() Supervised Mode-In supervised mode of operation, no automatic control, system delivers information to operator. Operator initiates manual control actions. Restoration time will be longer in this approach. Please refer to the Distribution Automation - Secondary Substation 1.0 Implementation Guide, which addresses this use case, at the following URL:

Supervised Mode-In supervised mode of operation, no automatic control, system delivers information to operator. Operator initiates manual control actions. Restoration time will be longer in this approach. Please refer to the Distribution Automation - Secondary Substation 1.0 Implementation Guide, which addresses this use case, at the following URL:

–![]() https://salesconnect.cisco.com/#/search/Secondary%2520Substation%2520Implementation%2520Guide/conten t

https://salesconnect.cisco.com/#/search/Secondary%2520Substation%2520Implementation%2520Guide/conten t

■![]() Semi Automatic Mode-A mix of automatic and supervised control is followed. The DA system automatically isolates the fault and performs the restoration part of upstream restoration. The upstream section is between the substation and the faulted section. Manual restoration operation is performed on the downstream section, which is between the fault section and the end of feeder. This guide will address this mode of operation. In this mode, communication happens between IEDs in field to the Distribution Management System (DMS) application residing in control center.

Semi Automatic Mode-A mix of automatic and supervised control is followed. The DA system automatically isolates the fault and performs the restoration part of upstream restoration. The upstream section is between the substation and the faulted section. Manual restoration operation is performed on the downstream section, which is between the fault section and the end of feeder. This guide will address this mode of operation. In this mode, communication happens between IEDs in field to the Distribution Management System (DMS) application residing in control center.

■![]() Fully Automatic Mode-Isolation and restoration happens automatically without any dispatcher intervention. Communication happens directly between a group of associated IEDs. Restoration is very fast (<1 second), but this mode is a complex approach to deploy.

Fully Automatic Mode-Isolation and restoration happens automatically without any dispatcher intervention. Communication happens directly between a group of associated IEDs. Restoration is very fast (<1 second), but this mode is a complex approach to deploy.

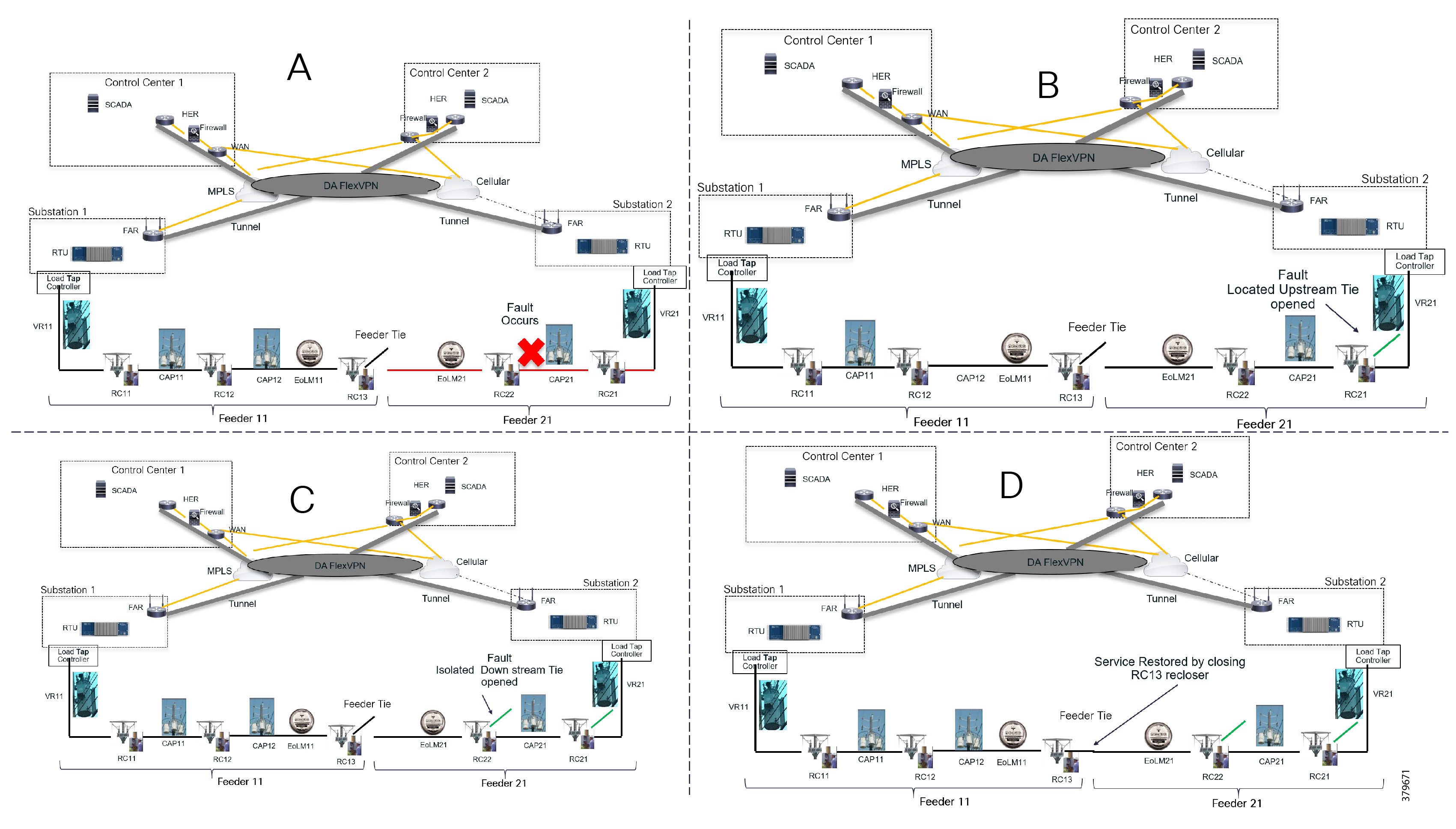

How FLISR Works

Figure 7 is divided into four parts (A,B,C, and D) to show how FLISR operations typically work.

■![]() In Example A of Figure 7, the FLISR system locates the fault, typically using line sensors that monitor the flow of electricity, measures the magnitudes of fault currents, and communicates conditions to other devices and grid operators.

In Example A of Figure 7, the FLISR system locates the fault, typically using line sensors that monitor the flow of electricity, measures the magnitudes of fault currents, and communicates conditions to other devices and grid operators.

■![]() Once located, FLISR opens switches on both sides of the fault: one immediately upstream and closer to the source of power supply (Example B of Figure 7), and one downstream and further away (Example C of Figure 7).

Once located, FLISR opens switches on both sides of the fault: one immediately upstream and closer to the source of power supply (Example B of Figure 7), and one downstream and further away (Example C of Figure 7).

■![]() The fault is now successfully isolated from the rest of the feeder. With the faulted portion of the feeder isolated, FLISR next closes the normally open tie switches to neighboring feeders. This re-energizes the unfaulted portion(s) of the feeder and restores services to all customers served by these unfaulted feeder sections from another substation/feeder (Example D of Figure 7).

The fault is now successfully isolated from the rest of the feeder. With the faulted portion of the feeder isolated, FLISR next closes the normally open tie switches to neighboring feeders. This re-energizes the unfaulted portion(s) of the feeder and restores services to all customers served by these unfaulted feeder sections from another substation/feeder (Example D of Figure 7).

FLISR Actors

■![]() Recloser-The circuit recloser is a self-contained device with a necessary monitoring circuit to detect and interrupt over-current conditions and automatically reclose the line.

Recloser-The circuit recloser is a self-contained device with a necessary monitoring circuit to detect and interrupt over-current conditions and automatically reclose the line.

■![]() Sectionalizing Switch or Remote Control Switch-Remote Controller Switches can be load break or fault interrupting devices.

Sectionalizing Switch or Remote Control Switch-Remote Controller Switches can be load break or fault interrupting devices.

■![]() Remote Fault Indicator-Used to detect faults.

Remote Fault Indicator-Used to detect faults.

■![]() Distribution Management System (DMS)-The DMS application residing in the DSO control center is an intelligent application, which is the brain of FLISR systems and which performs application circuit reconfiguration logic.

Distribution Management System (DMS)-The DMS application residing in the DSO control center is an intelligent application, which is the brain of FLISR systems and which performs application circuit reconfiguration logic.

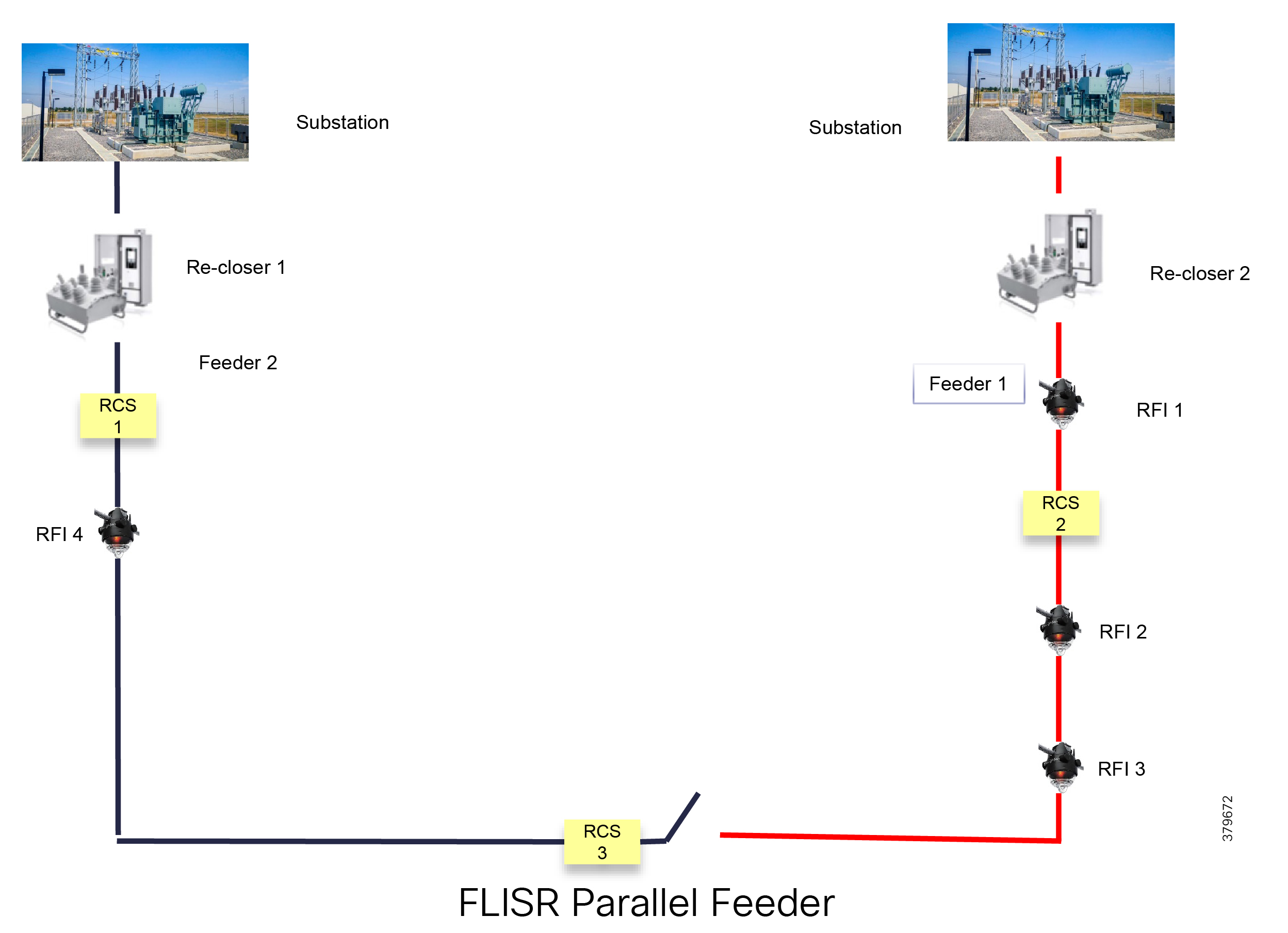

Figure 8 depicts a parallel feeder distribution system. Two distribution feeders are common out of two different Secondary Substations and each feeder has a recloser associated with it. Remote Fault Indicators and remote control switches are distributed across both feeders. RCS3 3 is, by default, an open switch.

Figure 8 FLISR Parallel Feeders

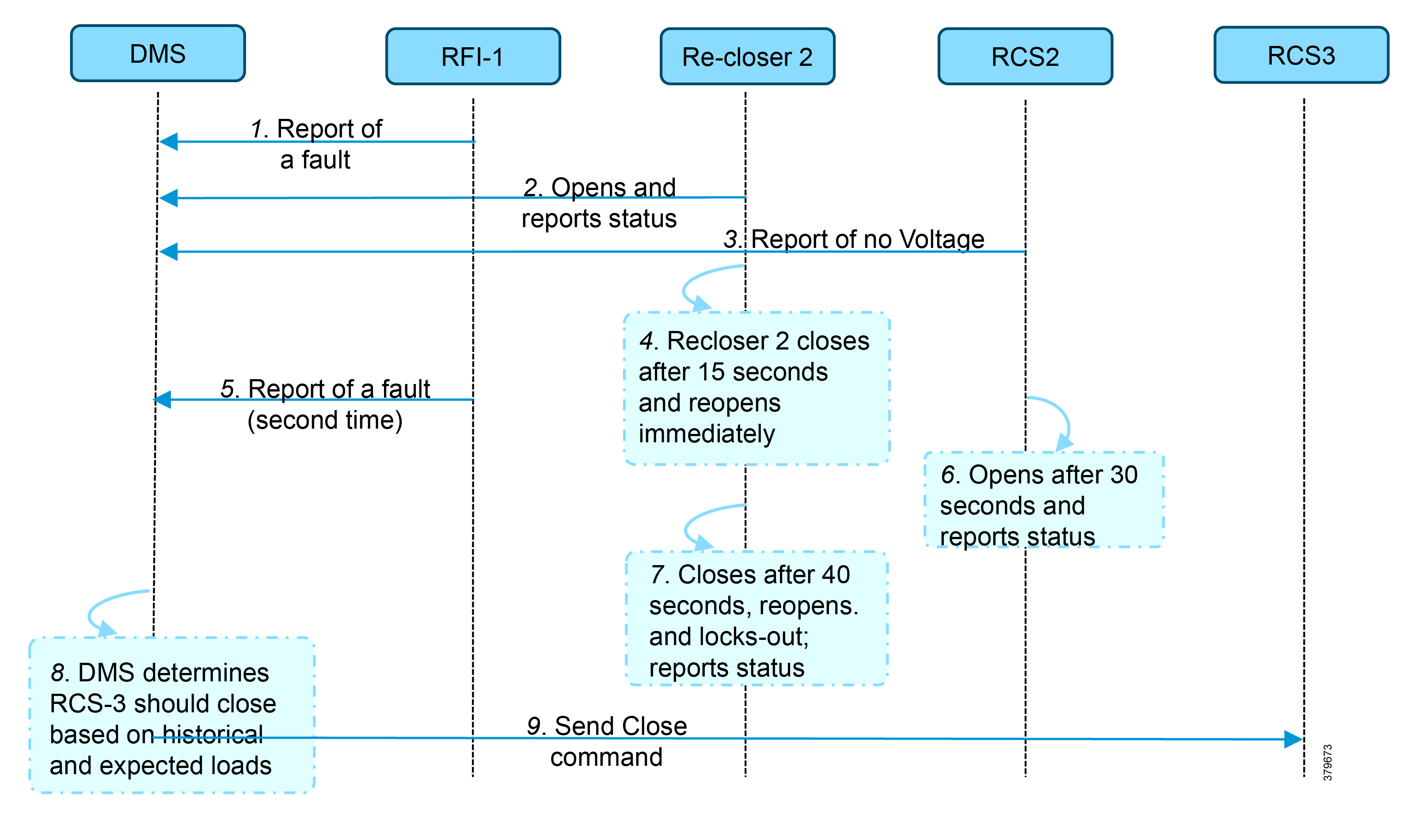

Figure 9 FLISR Application Communication Flow

In Figure 9, the application flow can be observed happening directly from feeder devices to the DMS application in the DSO control center. The flow is summarized below:

■![]() Remote Fault Indicator (RFI) 1 reports to the Distribution Management System (DMS) whenever it encounters a fault.

Remote Fault Indicator (RFI) 1 reports to the Distribution Management System (DMS) whenever it encounters a fault.

■![]() Recloser2 opens and send a report to DMS when it encounters a temporary fault.

Recloser2 opens and send a report to DMS when it encounters a temporary fault.

■![]() Recloser2 opens and send a report to DMS when it encounters a permanent fault.

Recloser2 opens and send a report to DMS when it encounters a permanent fault.

■![]() Remote Control Switch (RCS) 2 reports no voltage status to DMS.

Remote Control Switch (RCS) 2 reports no voltage status to DMS.

■![]() RCS 2 opens when it encounters faults for second time and send a report to DMS.

RCS 2 opens when it encounters faults for second time and send a report to DMS.

■![]() DMS issues a close command to the RCS 3.

DMS issues a close command to the RCS 3.

■![]() DMS initiates a periodic poll (every minute) for the all feeder devices.

DMS initiates a periodic poll (every minute) for the all feeder devices.

■![]() DMS initiates a solicit periodic poll (every 5 minutes once) for all feeder devices.

DMS initiates a solicit periodic poll (every 5 minutes once) for all feeder devices.

DA Solution FLISR Use Case using SEL devices over CR mesh

Cisco Resilient (CR) mesh solution provides a reliable communication infrastructure, with bandwidth capacity and low latency that meets the DA use case performance requirements.

Schweitzer Electric Engineering Laboratories (SEL) is one of the major utility grid equipment and DA solution vendor in North America. Cisco and Schweitzer Engineering Laboratories (SEL) have collaborated on joint validation of FLISR use case over Cisco CR mesh. This joint validation used Fault Location Isolation and Service Restoration (FLISR) products from SEL operating over Cisco Resilient Mesh. Operational measurements such as trip time, data alignment, service restoration and operational consistency were recorded and the application was found to work very well over CR mesh.

This section provides guidance to utilities that they could privately own and operate a FAN radio network in the ISM 902-928 MHz band, such as Cisco Resilient Mesh, as a multi-service solution working well with Distribution Automation applications. Cisco has committed to validate the major DA use cases like FLISR within their indoor labs and outdoor pilot locations.

Schweitzer Electric Laboratories (SEL) has a comprehensive solution for the DA FLISR application that can be deployed in centralized architecture. The solution uses a controller device to provide advanced restoration capabilities that can be located in the control center. Combined with Cisco Resilient Mesh communication infrastructure the FLISR application can operate in fully automatic mode.

The SEL equipment listed below is used for Validation:

■![]() SEL Real-Time Automation Controller (RTAC)

SEL Real-Time Automation Controller (RTAC)

■![]() SEL Advanced Recloser Control (SEL-651R)

SEL Advanced Recloser Control (SEL-651R)

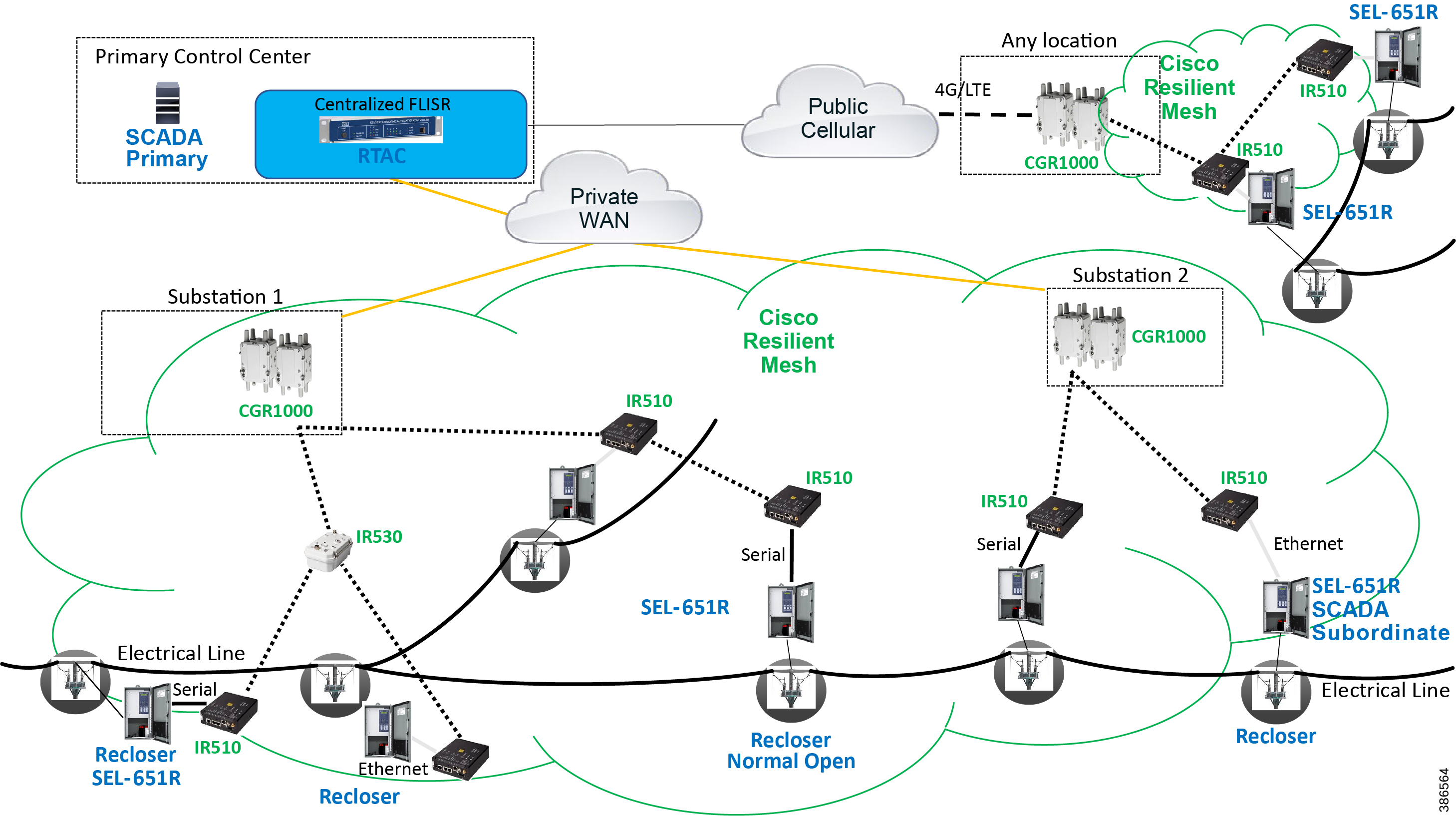

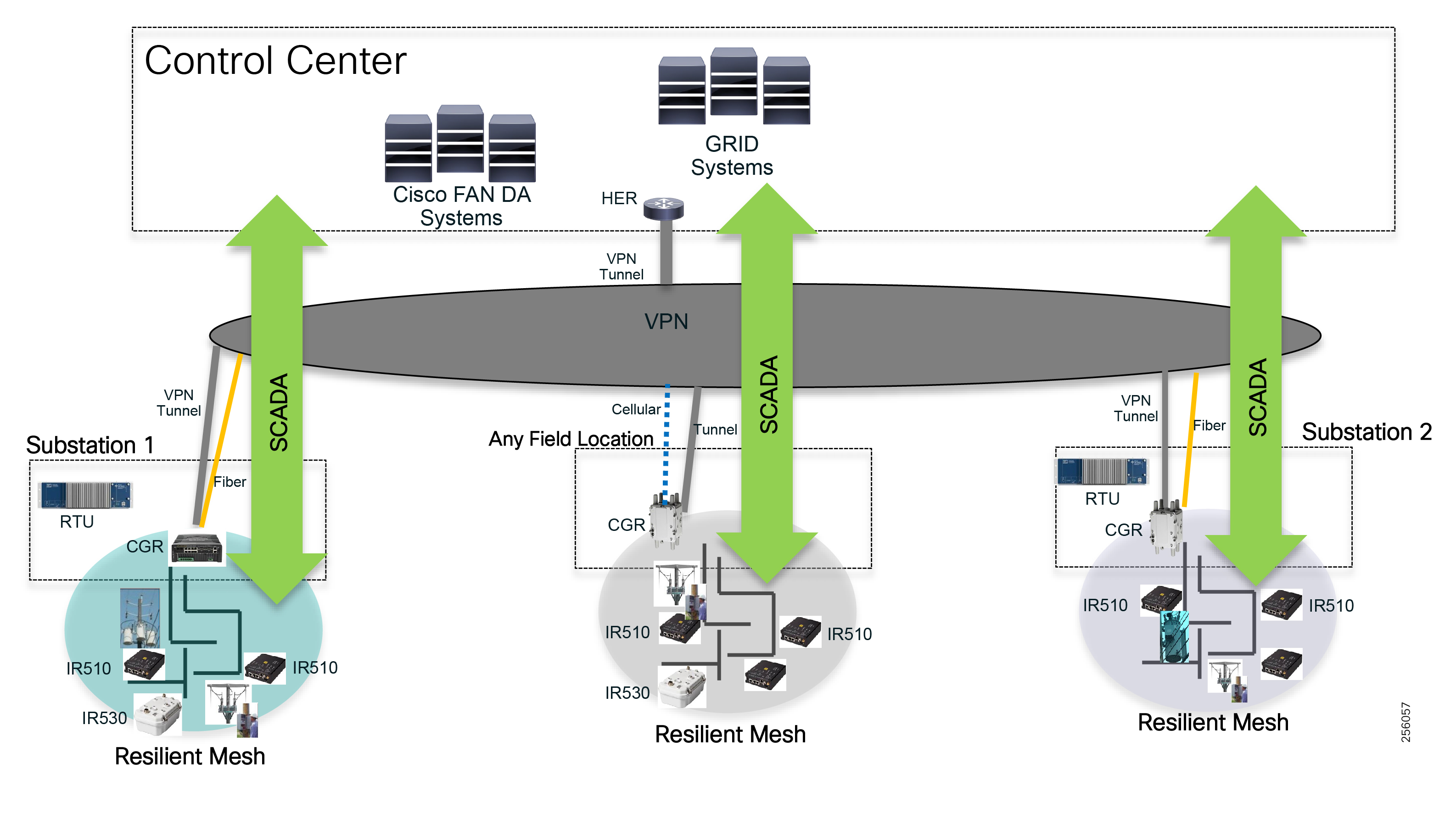

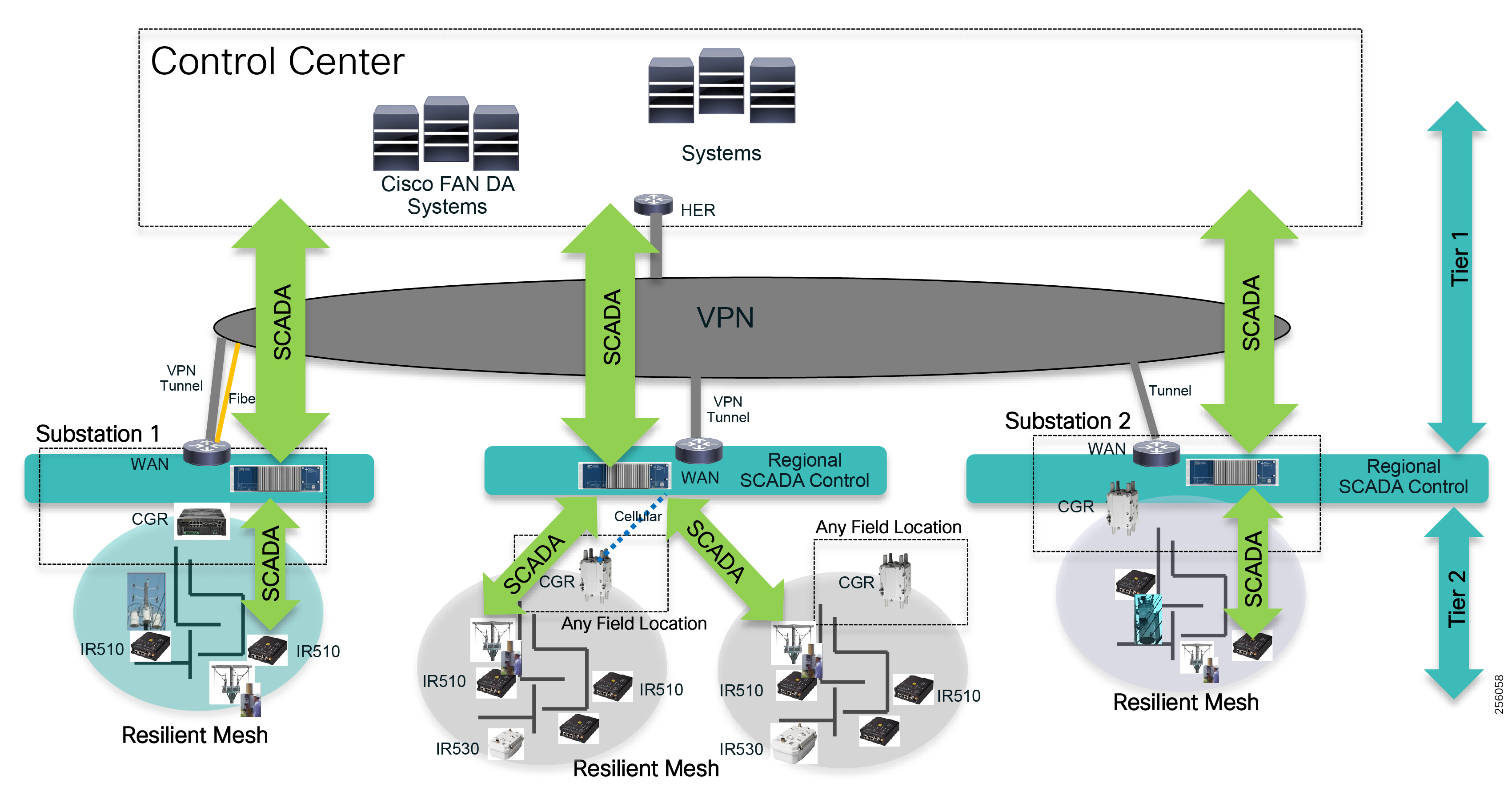

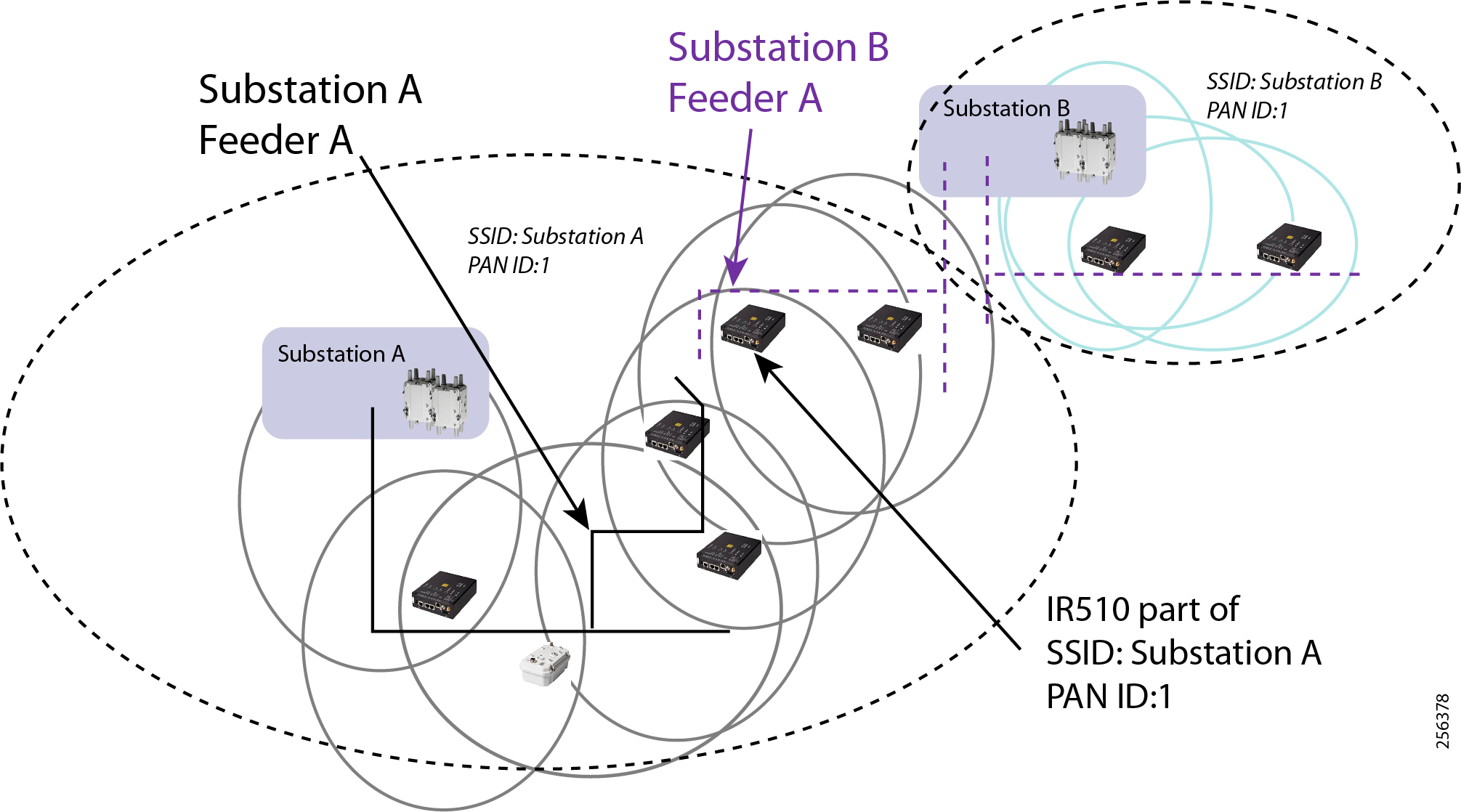

Cisco Resilient Mesh and SEL FLISR Architecture

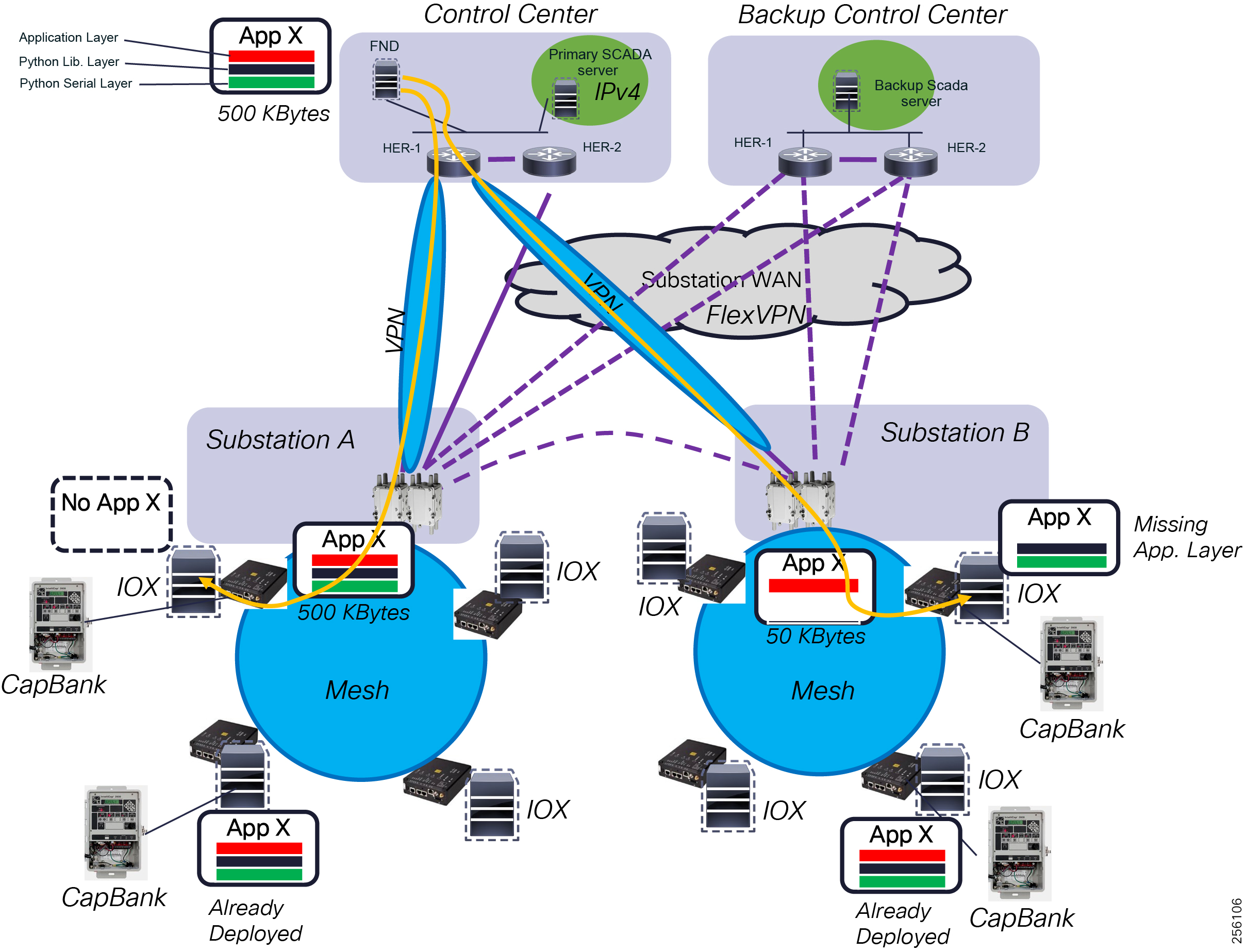

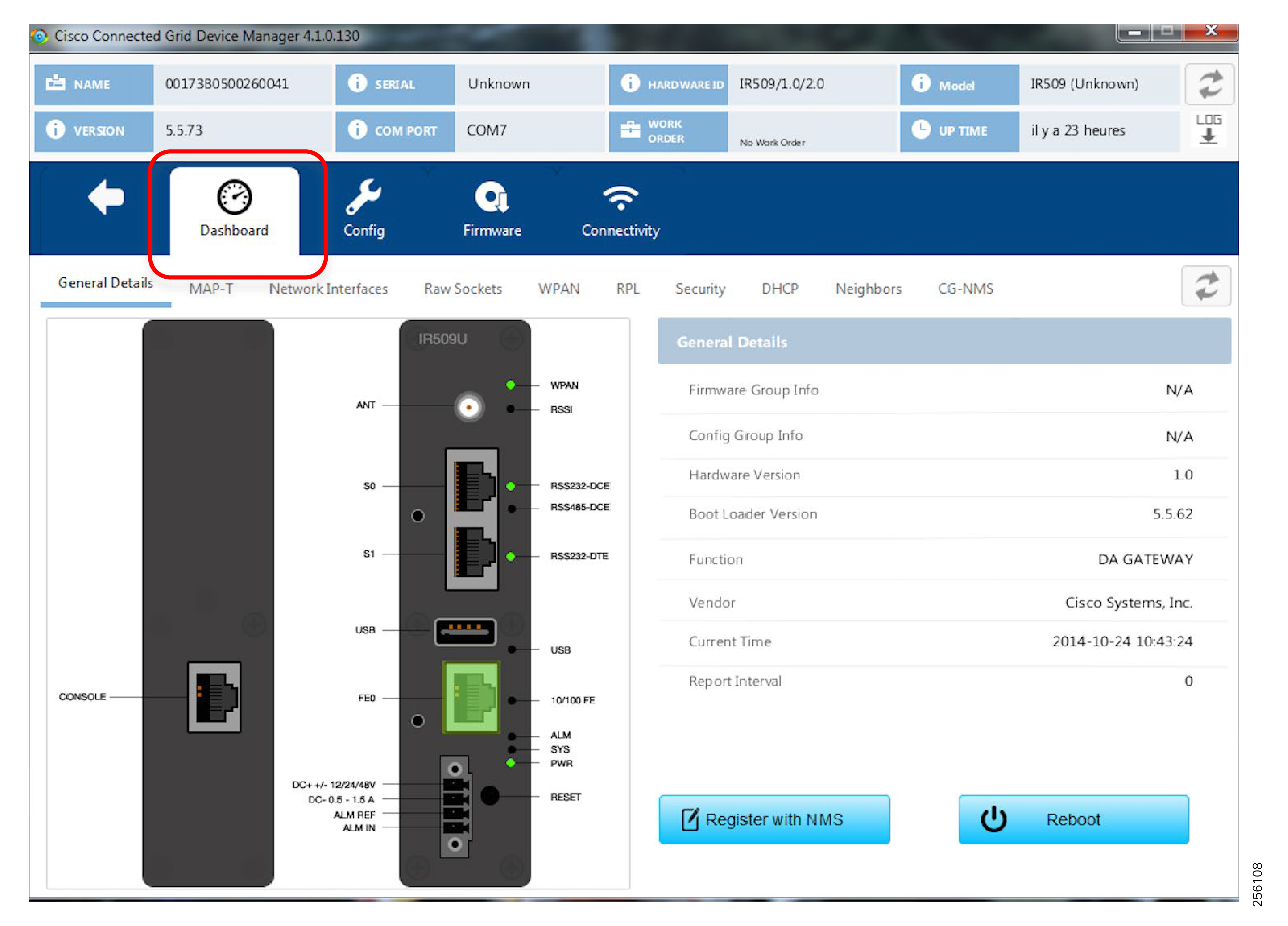

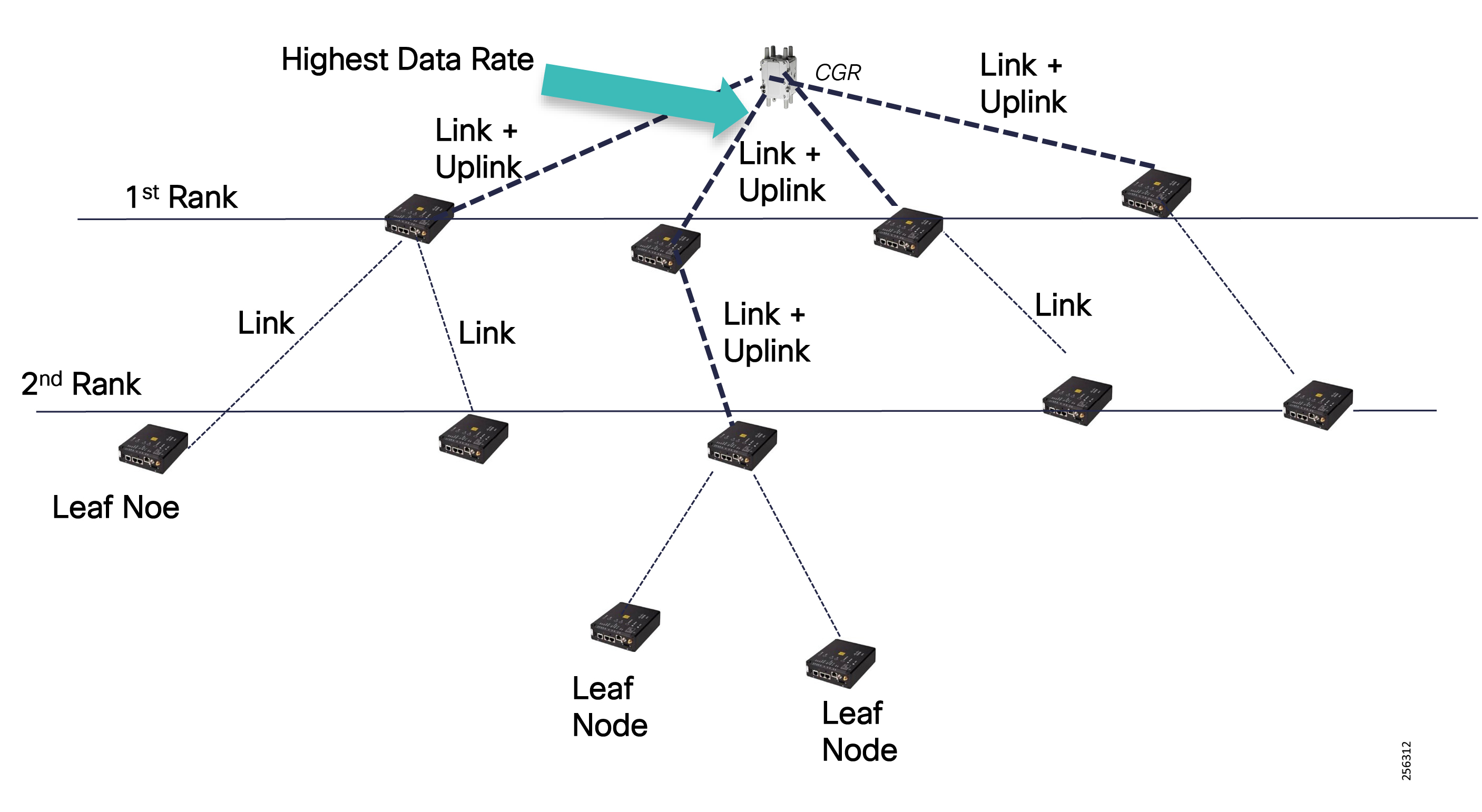

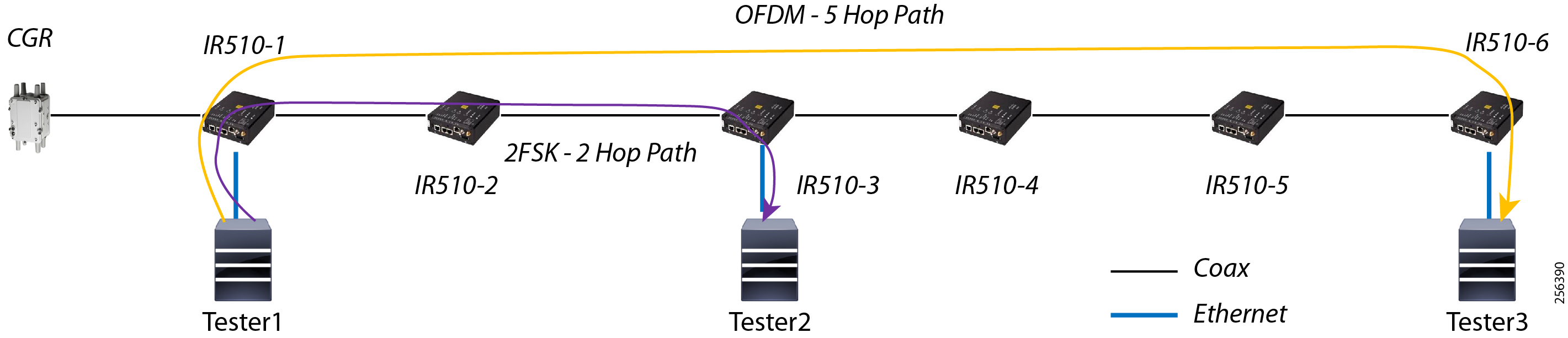

Cisco resilient mesh and SEL FLISR architecture are shown in Figure 10. SEL FLISR controller device deployment follows a centralized FLISR architecture. The SEL FLISR controller devices are the outstation devices. These outstation devices connect to Cisco IR510 DA gateways, enabling the communication path. Cisco CR mesh comprising IR510, IR530 can be aggregated at a CGR1000 series of routers, usually located in the substation/along the feeder. The CGR1000 series of routers can talk to the control center over Private WAN (or) Public Cellular and fiber networks. Cisco Resilient mesh provides communication infrastructure to the SEL FLISR Architecture.

Figure 10 Cisco Resilient Mesh and SEL FLISR Architecture

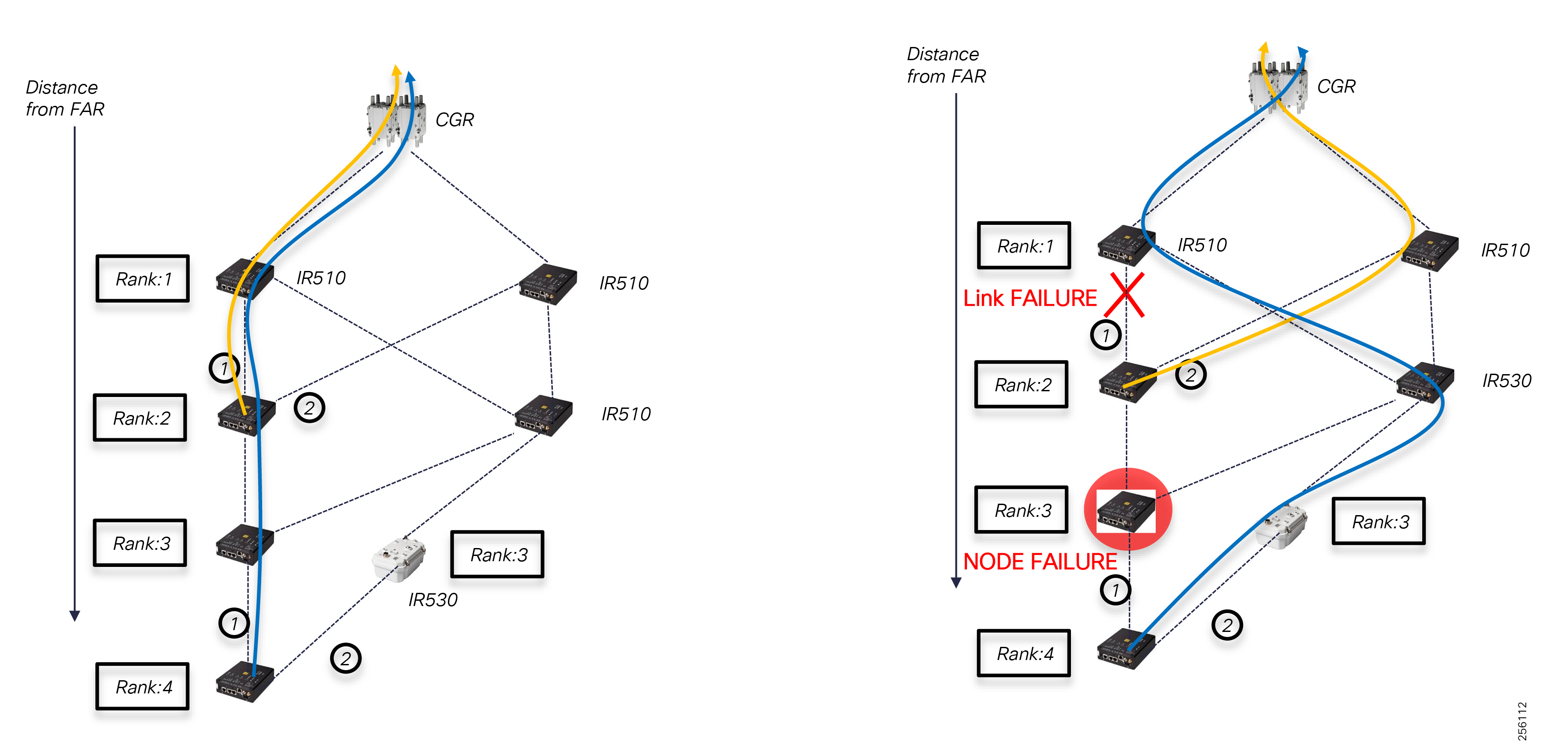

The SEL reclosers connect to the Cisco Resilient Mesh Industrial Routers (IR510) using Ethernet.

The Cisco IR510 DA gateways establish a reliable mesh wireless network based on signal propagation radio design. For instances where the radio signal cannot cover a certain area or signal levels are weak, Cisco Range Extenders (IR530) can be deployed to increase signal coverage for that area. CR mesh based on ISM 902-928MHz and IEEE802.15.4g/e standard using OFDM modulation with a physical data rate up to 1.2 Mbps can support the performance requirements of the FLISR application.

The SEL and Cisco DA solutions help utilities lower the SAIDI (System Average Interruption Duration Index) and SAFI (System Average Interruption Frequency Index) performance metrics which reflects the reliability of their Power Distribution network.

DNP3/IP messages between the SEL-651R recloser and the SEL controller are reliable routed through the mesh network via the most optimal path to the field area border router, such as Cisco CGR 1000 router, known as the mesh exit point, and then via either a fiber network or Cellular network to the Control Center location.

The SEL FLISR solution can also be deployed in a Centralized architecture where one or more RTAC devices are installed in the Control Center and each controller services an area that is not necessarily bonded to a substation service area. This approach has a lower deployment cost.

The Distribution Automation system consists of a DA controller (DAC) that communicates with multiple recloser controls, switch controls, feeder relays, and a wide range of other intelligent electronic devices (IEDs). The DAC is implemented on the SEL Real-Time Automation Controller (RTAC) family of controllers. The DAC can be applied to a wide variety of distribution network arrangements. The DACS addresses two major control objectives for the power distribution system: automatic reconfiguration (AR) and dynamic feeder optimization (DFO). This design will focus on the automatic reconfiguration since it has more stringent communications requirements.

The DAC functions to detect permanent fault and open-phase conditions on the distribution network. The DAC will act to isolate the affected zone of the feeder and restore power to the unaffected zones of the feeder from the normal source and from alternative sources, if available. The DAC also functions to detect overload conditions on the distribution network. The DAC will act to mitigate overloads by transferring load to adjacent feeders or by load shedding if alternative capacity is unavailable. In addition, the DAC functions to detect station events and loss-of-source events. The DAC will act to isolate the affected station and restore power to the unaffected feeders from alternative sources, if available.

DA FLISR Use case with SEL

Solution Overview

Cisco Resilient Mesh networks can support the SEL FLISR application over a variety of topologies and places in the network. Contents of the subsequent sections is summarized below:

These topologies were considered during the joint validation activity:

Each topology is described with the following:

■![]() FLISR Topology-based one-line diagram (with segment division)

FLISR Topology-based one-line diagram (with segment division)

■![]() FLISR Topology-based SEL device to Cisco device mapping diagram

FLISR Topology-based SEL device to Cisco device mapping diagram

■![]() FLISR Topology-based SEL device to Cisco device mapping table.

FLISR Topology-based SEL device to Cisco device mapping table.

FLISR Fault scenarios are described below:

For the SEL FLISR use case in Rural/Urban topology, the following faults in the power line are considered:

Each of these FLISR Fault scenarios are considered in three states:

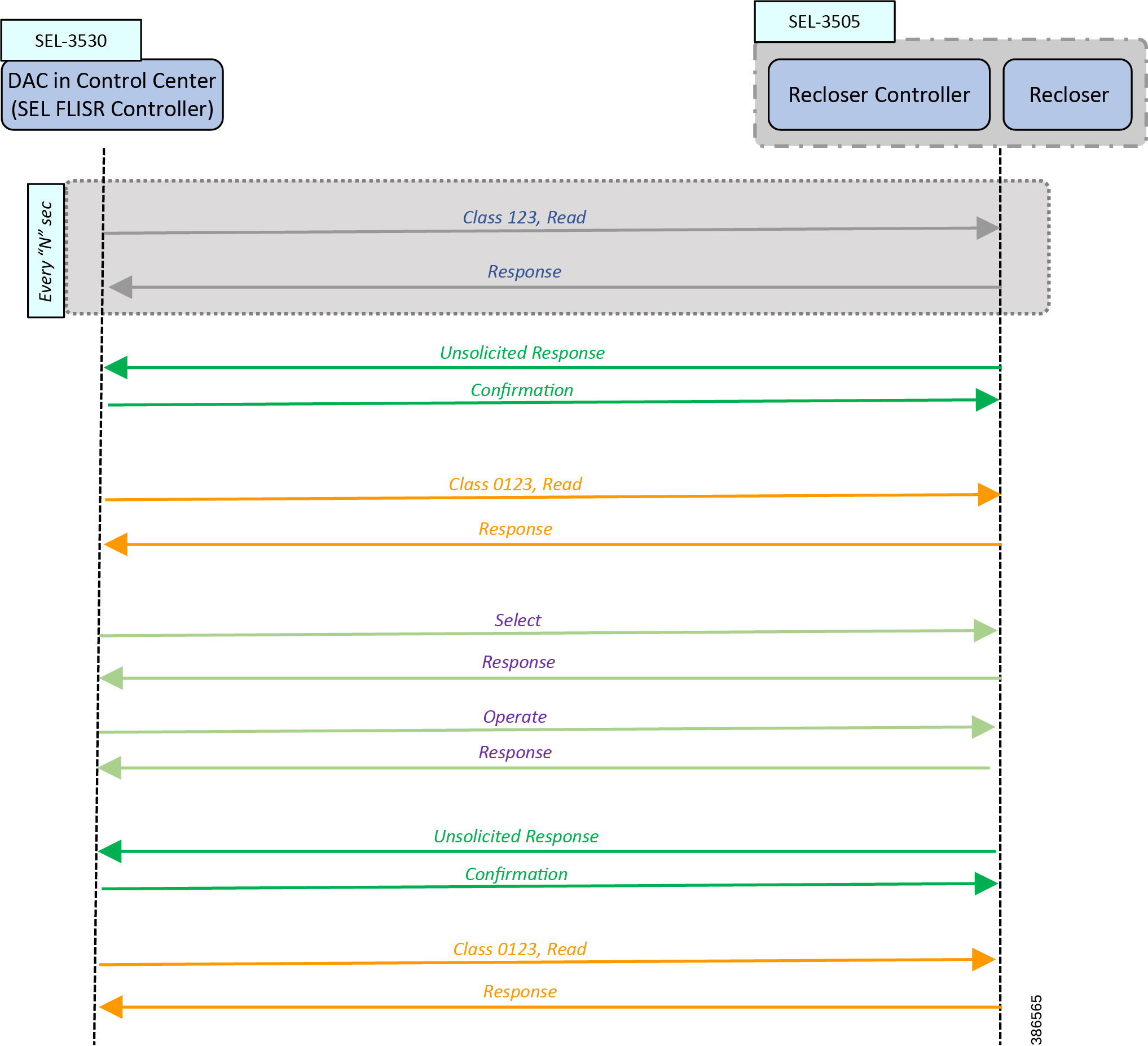

SEL FLISR Use case Validation Call Flow Sequence

The call flow sequence of the SEL FLISR use case validation uses the DNP3/IP application protocol. UDP is the recommended protocol at the transport layer to transport the DNP3/IP messages. The two topologies for SEL FLISR use case validation are:

Figure 11 FLISR - Call Flow Event Sequence - Application DNP3 between DAC and IED

The Call flow sequence in Figure 11 is categorized into these major blocks:

■![]() Unsolicited Response/Confirmation

Unsolicited Response/Confirmation

Of the Commands listed above, Class123 is a periodic command; others are event-driven commands. For example, Unsolicited Response is an event-driven command.

Class0123 is used as periodic command, but with a longer duration of polling interval. The interval is once every 30 minutes in case of FLISR over radio.

In Figure 11, the Class123 event is configured to operate periodically every “N” seconds.

In the following FLISR Fault scenarios, these faults are introduced in specific segments of the Urban/Rural topology:

When these faults are introduced into the SEL FLISR (Urban/Rural) topology into a particular segment, the SEL device in the affected segment raises “Unsolicited Response” messages to the SEL FLISR Controller (DAC), located in the control center.

DAC then sends an acknowledgment “Confirmation” message to the corresponding SEL device (Recloser Controller).

The “Unsolicited Response” conveys a change of state in the network. The SEL DA FLISR Controller performs “Class0123 Read” of all the related set of SEL devices to get the holistic view of the SEL FLISR Topology status.

In response to the Class0123 read request from the DAC, the SEL devices then respond with “Class 0123 Response” to the SEL FLISR Controller. The set of related SEL devices includes devices in the affected feeder section(s) and any adjacent sections connected with a normally open point.

The SEL FLISR Controller performs a FLISR computation, and decides which SEL recloser devices it must open, and which SEL recloser devices it must close. To accomplish this, the SEL FLISR Controller uses a “Select” message, followed by “Operate” message. Alternatively, the DA FLISR controller could directly perform “Operate” commands instead of Select before Operate. For each of the “Select” or “Operate” messages from the SEL FLISR Controller, the Recloser responds back with “Response” message.

Recommendation from SEL is to use direct Operate, as Select before Operate is a remnant of the past when auxiliary relays were used. This serves an additional purpose of reducing the load on the mesh by a fraction. Bandwidth conserved is the bandwidth earned for other application traffic.

When the status of the SEL device changes, the SEL recloser controller updates the SEL FLISR DA Controller device using “Unsolicited response” message, which is acknowledged back by DAC using “Confirmation” message.

When the SEL FLISR DA Controller requires a holistic view of the current status of the topology, it sends “Class0123 Read” to all the related SEL recloser controller devices. The current status of the related device is updated back in the corresponding “Class0123 Response” message sent by SEL recloser controller device to the DAC. The set of related SEL devices includes devices in the affected feeder section(s) and any adjacent sections connected with a normally open point.

This completes the SEL FLISR Event call flow sequence. This sequence could happen over SEL FLISR Urban/Rural topology, which are discussed in upcoming sections.

Note: Throughout this section, the terms DAC and SEL FLISR DA Controller are used interchangeably.

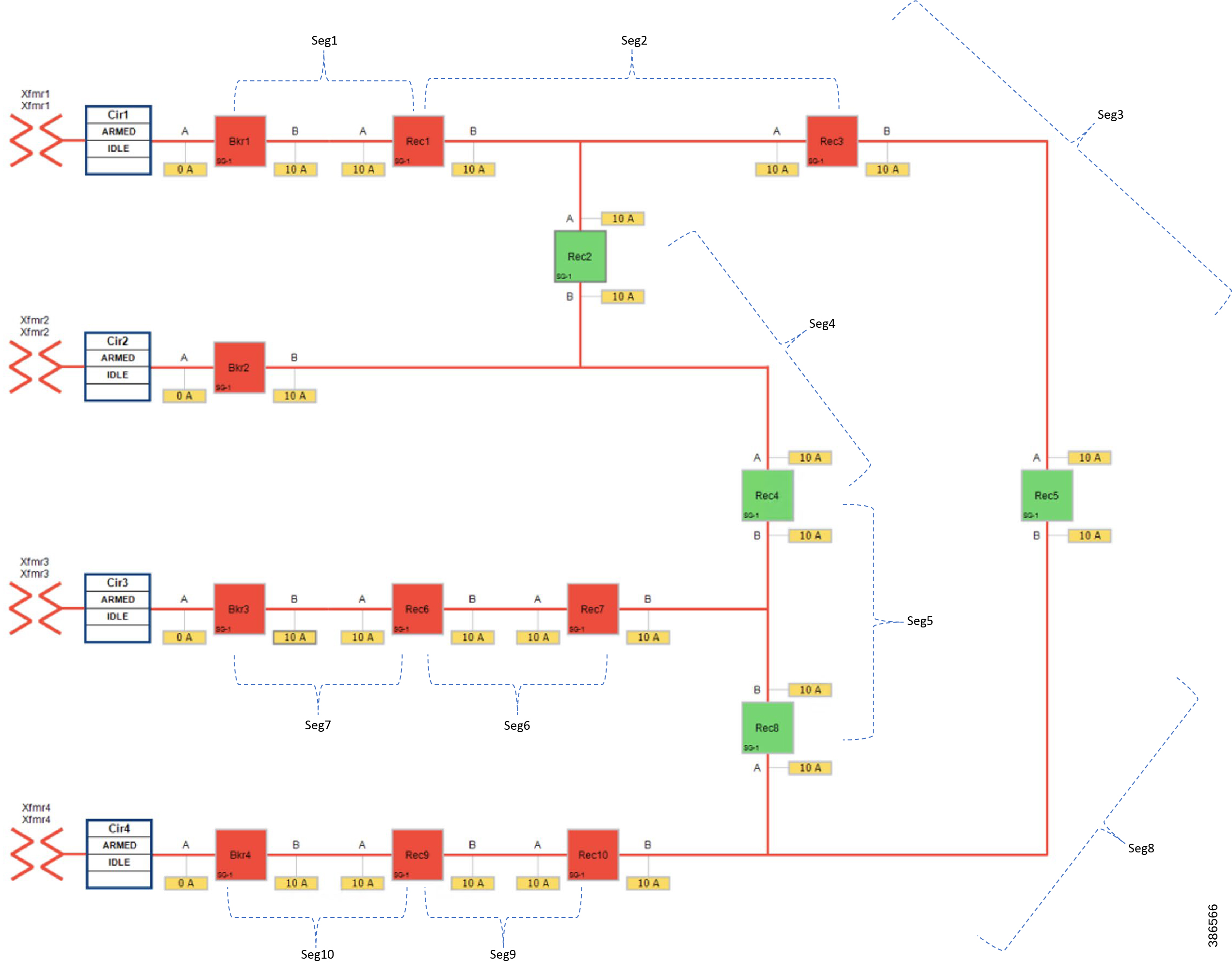

Cisco SEL FLISR Use case – Urban Topology

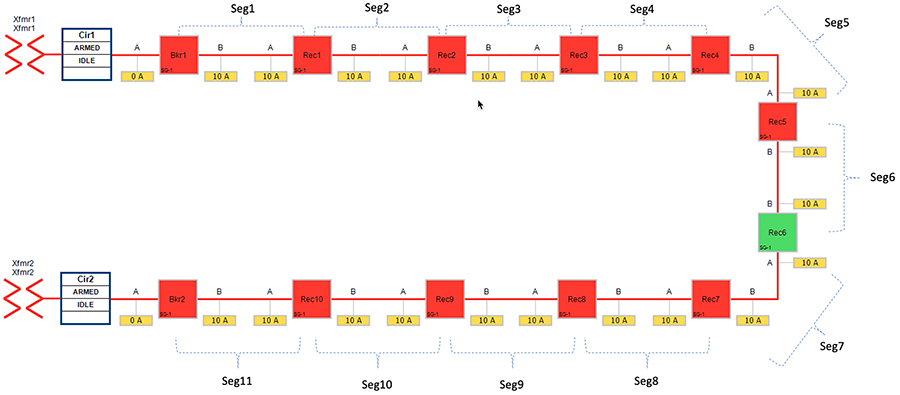

FLISR Topology, Urban Area - One-line diagram:

Figure 12 FLISR Topology, Urban Area – One-line diagram

The one-line diagram in Figure 12 describes the FLISR topology in urban area. The topology has been divided into ten segments (Seg1, Seg2,..., Seg10). Red boxes represent Energized (Closed) reclosers, while green boxes representing unenergized (Normally open) reclosers.

■![]() Seg 1, Seg 2 and Seg 3 were energized from Transformer1

Seg 1, Seg 2 and Seg 3 were energized from Transformer1

■![]() Seg 4 was energized from Transformer2

Seg 4 was energized from Transformer2

■![]() Seg 5, Seg 6, Seg 7 were energized from Transformer3

Seg 5, Seg 6, Seg 7 were energized from Transformer3

Urban FLISR Topology - SEL device to Cisco device mapping

Figure 13 FLISR Topology, Urban Area – SEL reclosers to Cisco device mapping

This topology captures the 1-to-1 mapping of SEL recloser devices to Cisco IR510 devices. The controller device is located in the Primary control center. CR Mesh is aggregated at the Field Area Network aggregator using CGR1000 series router which can be located in the substation. The communication between substation and control center can be over public/private WAN. The SEL device is positioned behind IR510 and connected using Ethernet.

■![]() The device representing DA CONTROLLER (SEL3530-2) is located in Primary control center.

The device representing DA CONTROLLER (SEL3530-2) is located in Primary control center.

■![]() The device representing Recloser 9 (SEL3505-9) is associated with IR510-13.

The device representing Recloser 9 (SEL3505-9) is associated with IR510-13.

■![]() The device representing Recloser 6 (SEL3505-6) is associated with IR510-12.

The device representing Recloser 6 (SEL3505-6) is associated with IR510-12.

■![]() The device representing Recloser 1 (SEL3505-1) is associated with IR510-1.

The device representing Recloser 1 (SEL3505-1) is associated with IR510-1.

■![]() The device representing Recloser 10 (SEL505-10) is associated with IR510-23

The device representing Recloser 10 (SEL505-10) is associated with IR510-23

■![]() The device representing Recloser 7 (SEL505-7) is associated with IR510-22

The device representing Recloser 7 (SEL505-7) is associated with IR510-22

■![]() The device representing Recloser 2 (SEL505-2) is associated with IR510-21

The device representing Recloser 2 (SEL505-2) is associated with IR510-21

■![]() The device representing Recloser 8 (SEL505-8) is associated with IR510-33

The device representing Recloser 8 (SEL505-8) is associated with IR510-33

■![]() The device representing Recloser 4 (SEL505-4) is associated with IR510-32

The device representing Recloser 4 (SEL505-4) is associated with IR510-32

■![]() The device representing Recloser 5 (SEL505-5) is associated with IR510-42

The device representing Recloser 5 (SEL505-5) is associated with IR510-42

■![]() The device representing Recloser 3 (SEL505-3) is associated with IR510-4

The device representing Recloser 3 (SEL505-3) is associated with IR510-4

The following table captures the Individual mapping of the SEL device with the Cisco Mesh and the mesh depth. The mapped pair of SEL/Cisco device is located on the mesh. The FLISR Controller (SEL3530-2) is located in the control center. The rest of the SEL devices (SEL 3505) are located along the substation and feeder.

Table 1 FLISR Urban Topology Components

The next section discusses a few different types of FLISR Faults (Fault with LockOut, Open Phase, Loss of Source), each of them in three different states (Normal, Fault, Restored).

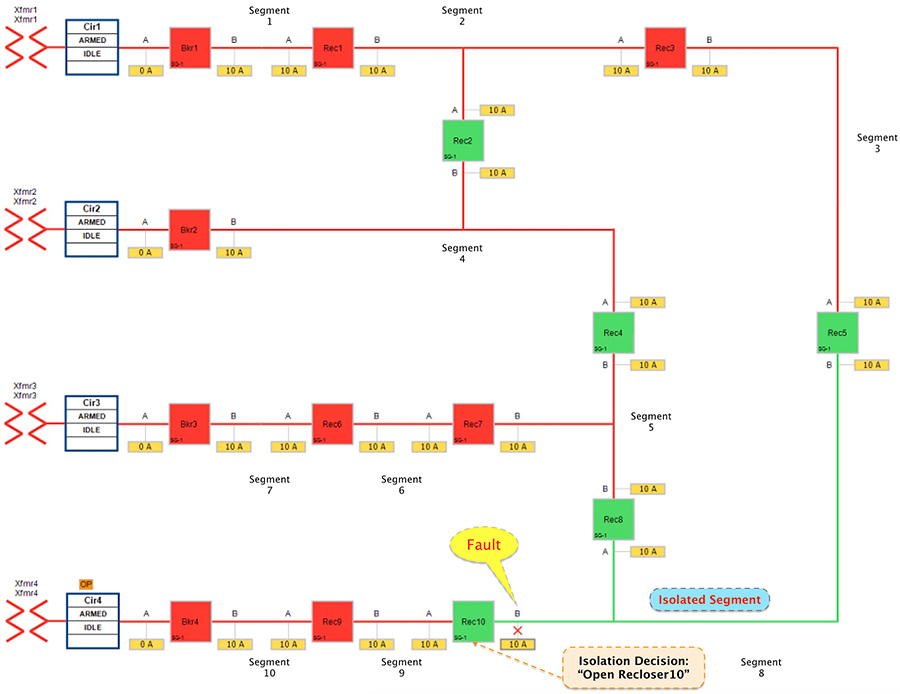

FLISR Fault scenario - Fault with Lock Out

In the case of a permanent fault, the recloser goes into lockout state until the fault is resolved by utility technician. For example, when a tree branch falls on the distribution line and conductors break each recloser that noticed the fault will trip and send an unsolicited DNP3/IP message upstream to the DA FLISR Controller. This covers the Fault Location identification phase of FLISR.

When the fault location is identified, the DA controller gets the overall current status of the topology by sending a Class0123 poll to all the related reclosers. This group includes devices in the affected feeder section(s) and any adjacent sections connected with a normally open point. Then the DAC performs the FLISR restoration computation, and proceeds to the FLISR restoration phase.

In the FLISR restoration phase, the DA Controller sends DNP3/IP commands to the respective reclosers that needs to change state in order to restore the services. Once the DA FLISR controller finishes the reconfiguration, it performs one round of Class0123 polling to ensure the stability. This covers the Fault Isolation and Service Restoration phase of the FLISR.

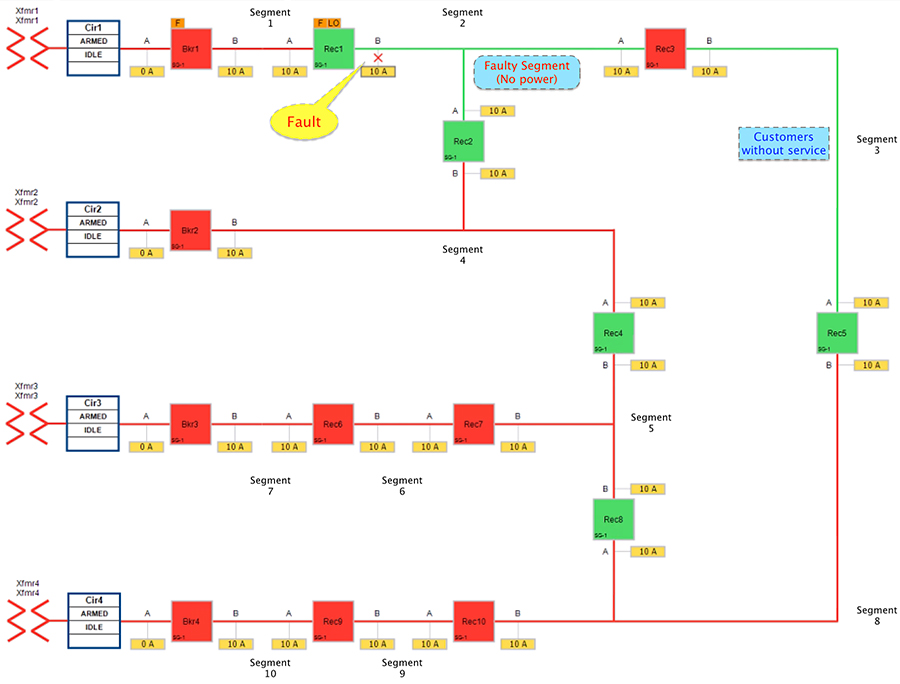

The Urban One-line topology in normal operational state is shown below.

Figure 14 Urban Topology - Fault with Lockout - Normal State

When a fault is introduced in Segment 2 between Recloser1 and Recloser2, at point B, recloser1 on the segment changes its state from Normally Closed state (NC) to Open state. This results in loss of power to the segments shown in the figure below.

■![]() Segment 2 (where the fault occurred)

Segment 2 (where the fault occurred)

■![]() Segment 3 (where the fault did not occur)

Segment 3 (where the fault did not occur)

Figure 15 Urban Topology - Fault with Lockout - Fault State

In the faulted state, customers in the region in segment 2 experience a loss of power; customers in the non-faulted region in segment 3 are also experiencing the loss of power.

The moment the fault occurred, recloser1 that detects the fault (in segment2) changes its state from Close to Open and enters Lockout state. The DA FLISR controller then sends Class0123 polling after one recloser goes into Lockout state, indicating there is a permanent fault.

Points to note in the topology:

■![]() Recloser1: Should change the state from Normally Closed state to Open state and locked out.

Recloser1: Should change the state from Normally Closed state to Open state and locked out.

■![]() Recloser3: might continue to be in Closed state, but no power on the feeder line.

Recloser3: might continue to be in Closed state, but no power on the feeder line.

■![]() Consumers of power located in Segment2 and Segment3 would experience loss of service.

Consumers of power located in Segment2 and Segment3 would experience loss of service.

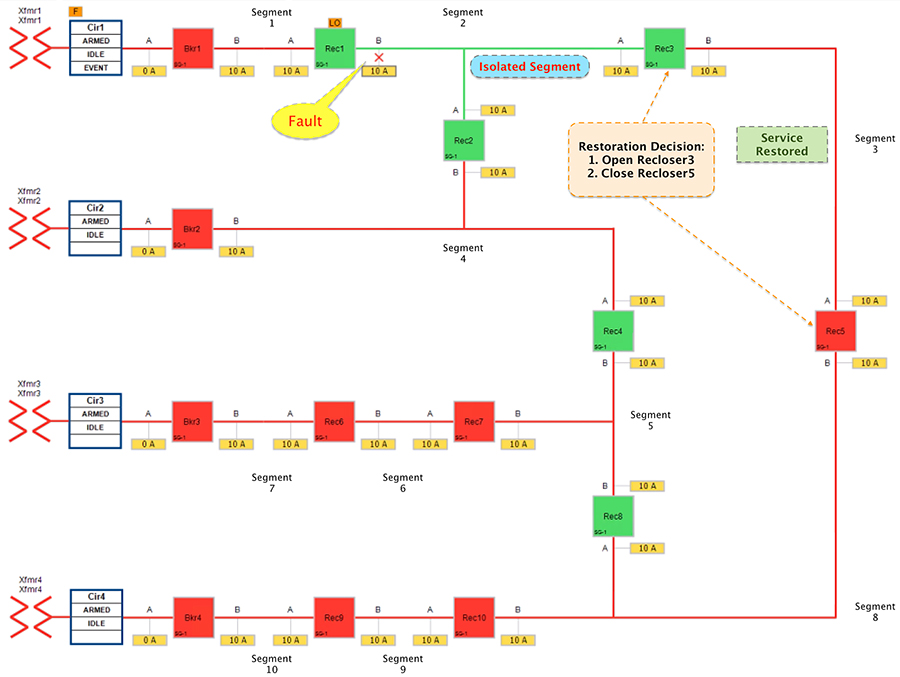

The FLISR SEL DA controller response sequence is outlined below:

1.![]() To Change the state of the Recloser 3 located between segment 2 and 3 from Normally closed to Open

To Change the state of the Recloser 3 located between segment 2 and 3 from Normally closed to Open

2.![]() To change the state of the Recloser 5(located between segment 3 & 8) from Normally Open state to Closed state, provided transformer 4 doesn’t get overloaded to serve segment 3.

To change the state of the Recloser 5(located between segment 3 & 8) from Normally Open state to Closed state, provided transformer 4 doesn’t get overloaded to serve segment 3.

This results in energizing segment 3 with power source from transformer4 feeder.

Figure 16 Urban Topology - Fault with Lockout - Restored State

The results to customers is as follows.

■![]() Customers in non-faulty region in segment 3 would have power service restored with the help of FLISR.

Customers in non-faulty region in segment 3 would have power service restored with the help of FLISR.

■![]() Due to Fault isolation, power outage is restricted to Customers in the faulty (and isolated) region in segment 2.

Due to Fault isolation, power outage is restricted to Customers in the faulty (and isolated) region in segment 2.

■![]() Fault Location Identification - identified in segment2.

Fault Location Identification - identified in segment2.

■![]() Service Restoration – Restoring the power to non-faulty segment (segment 3).

Service Restoration – Restoring the power to non-faulty segment (segment 3).

■![]() Isolation - Fault has been restricted to affected customers in segment 2 alone.

Isolation - Fault has been restricted to affected customers in segment 2 alone.

FLISR Fault scenario – Open Phase

An Open Phase fault applies to three-phase circuits, where one of the line voltage is lost. One cause could be due to a bad or loose street pole line jumper that interrupts the line. The loss of voltage will trigger a DNP3/IP unsolicited message to the DA Controller. The DA controller then initiates the necessary action, similar to FLISR Fault scenario - Fault with Lockout.

Figure 17 FLISR Urban Topology – Open Phase - Normal State

Figure 17 portrays the normal state of the FLISR Urban topology. Take note of segment 8. Reclosers corresponding to this segment are Recloser 5, Recloser 8 and Recloser 10. Of these three reclosers, Reclosers 5 & 8 are Normally Open. Recloser 10 is in Closed state, thus energizing segment 8 with the power source from Transformer 4.

The Open Phase Fault occurs in point B of recloser 10, as highlighted in below figure XX, with a red “X”.

Figure 18 FLISR Urban Topology – Open Phase - Fault State

Figure 18 portrays the fault state of the FLISR Urban topology. When fault occurred in segment 8 customers experience loss of power. The participating reclosers report the change of state using DNP3/IP Unsolicited Response message to the FLISR DA controller located in the control center.

The DA controller sends DNP3/IP Class0123 polling from all the related reclosers in the FLISR Urban topology to get a holistic view of the topology status. The set of related SEL devices includes devices in the affected feeder section(s) and any adjacent sections connected with a normally open point.

Note: In comparison to the FLISR Fault in the Fault with Lockout scenario, while in fault state only customers in the faulty segment 8 experience loss of power. All the other segments have power.

Segment 8 is chosen to illustrate the following point. In cases where no other segment is affected, other than the faulty segment, the FLISR restoration action can be to do nothing.

Figure 19 FLISR Urban Topology – Open Phase - Restored State

■![]() The DA controller makes the decision to change the state of the Recloser10 from normally closed state to Open.

The DA controller makes the decision to change the state of the Recloser10 from normally closed state to Open.

■![]() Due to Fault isolation, power outage is restricted to customers in the faulty region in segment 8.

Due to Fault isolation, power outage is restricted to customers in the faulty region in segment 8.

■![]() No Customers in any other segment are affected.

No Customers in any other segment are affected.

■![]() Fault Location Identification - identified in segment8.

Fault Location Identification - identified in segment8.

■![]() Service Restoration – No FLISR restoration needed in this case. It is only the isolation of faulty segment.

Service Restoration – No FLISR restoration needed in this case. It is only the isolation of faulty segment.

■![]() Isolation - Fault has been restricted to affected customers in segment 8 alone.

Isolation - Fault has been restricted to affected customers in segment 8 alone.

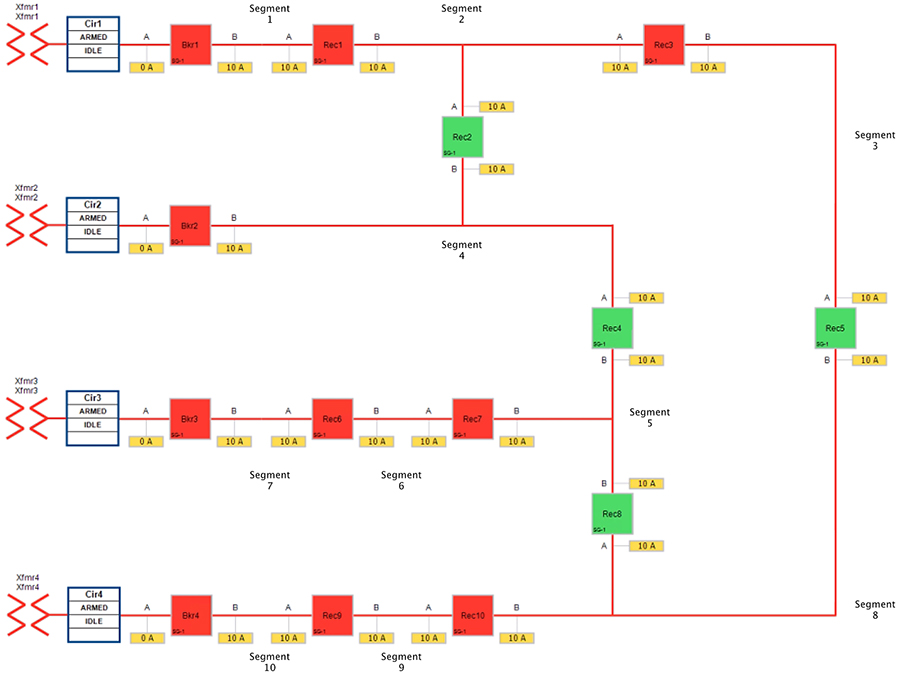

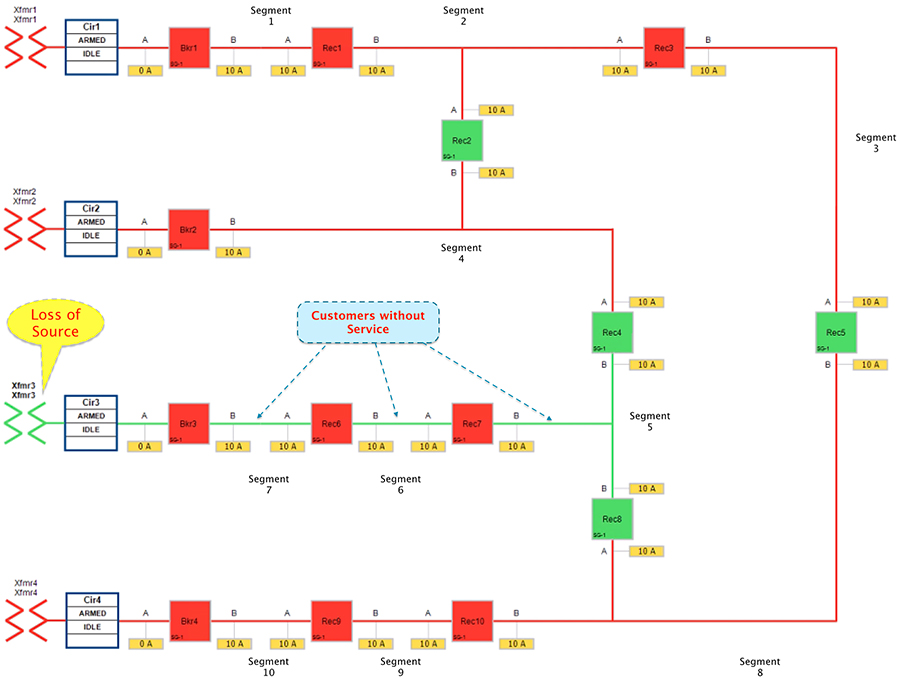

FLISR Fault scenario – Loss of Source

Loss of source applies to fault that occurs within the substation yard. For example, a bus fault at the substation could cause an outage for the entire feeder originating from that substation. In this scenario, the relay within the substation will notify the SEL DA controller of the loss of power which will then initiate the restoration process.

Figure 20 Urban Topology – Loss of Source - Normal State

Figure 20 portrays the normal state of the FLISR Urban topology. Notice the feeder line from transformer 3 which includes segment 7, segment 6 and segment 5.

■![]() Reclosers 4 & 8 are Normally Open. Circuit Breaker3, Reclosers 6 & 7 are Closed.

Reclosers 4 & 8 are Normally Open. Circuit Breaker3, Reclosers 6 & 7 are Closed.

■![]() All the three segments (5,6 and 7) derive a power source from transformer3.

All the three segments (5,6 and 7) derive a power source from transformer3.

The third feeder transformer was taken out of service to simulate a loss of power. The substation feeder breaker (Brk3) tripped and all the downstream customers lost power.

Figure 21 Urban Topology – Loss of Source - Fault State

■![]() Reclosers 4 & 8 are still in Normally Open state.

Reclosers 4 & 8 are still in Normally Open state.

■![]() Circuit Breaker3, Reclosers 6 & 7 are in Closed state.

Circuit Breaker3, Reclosers 6 & 7 are in Closed state.

■![]() All the three segments (5,6 and 7) experiences loss of power, as transformer3 is out of service.

All the three segments (5,6 and 7) experiences loss of power, as transformer3 is out of service.

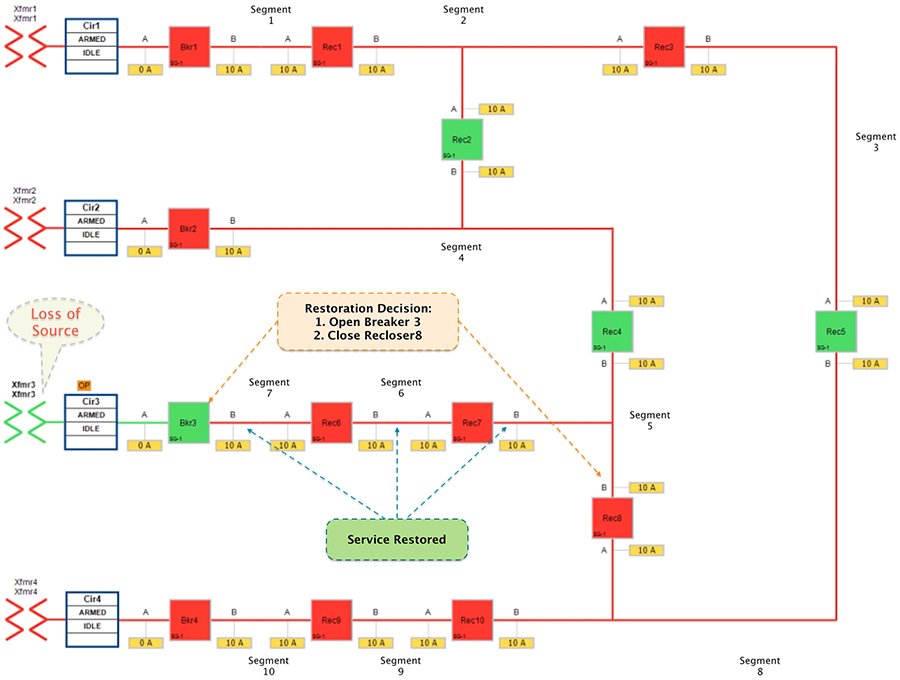

The circuit breaker and recloser devices sends DNP3/IP unsolicited message (about the loss of power) upstream to the SEL DA controller device located in the control center. The SEL DA controller performs DNP3/IP Class0123 polling from all the related reclosers in the FLISR Urban topology to get a holistic view of the current state of the topology.

The SEL FLISR DA Controller finds the most optimal way to restore the services and, in this case, it chooses to:

■![]() Change the Circuit breaker 3 from Closed state to Open state.

Change the Circuit breaker 3 from Closed state to Open state.

■![]() Change the Recloser8 state from Normal Open to Closed state.

Change the Recloser8 state from Normal Open to Closed state.

This would result in energizing segments (5,6 and 7) with power source from transformer4 feeder.

Figure 22 Urban Topology – Loss of Source - Restored State

■![]() Customers in all the affected segments (5,6 and 7) would have power service restored with the help of FLISR.

Customers in all the affected segments (5,6 and 7) would have power service restored with the help of FLISR.

■![]() Due to Fault isolation, transformer3 is taken out of the picture. Affected segments are now served by power from transformer4.

Due to Fault isolation, transformer3 is taken out of the picture. Affected segments are now served by power from transformer4.

■![]() Fault Location - identified fault in transformer3.

Fault Location - identified fault in transformer3.

■![]() Isolation - Fault has been restricted to transformer3 and it has been taken out of service.

Isolation - Fault has been restricted to transformer3 and it has been taken out of service.

■![]() Service Restoration – Restored the power to affected segments using alternate power source (transformer4).

Service Restoration – Restored the power to affected segments using alternate power source (transformer4).

Cisco SEL FLISR Use case – Rural Topology

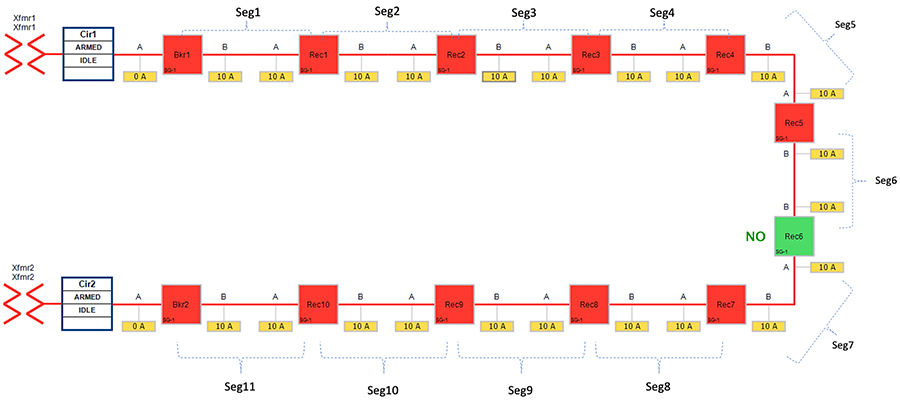

FLISR Topology – Rural Area - One-line diagram:

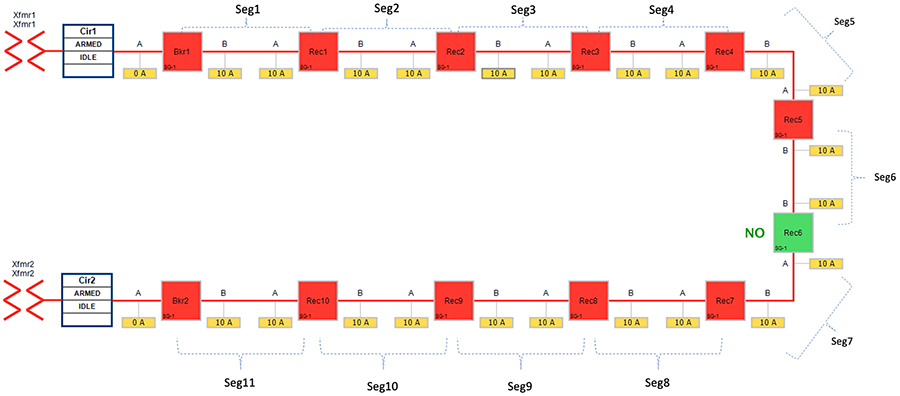

Figure 23 FLISR Topology (Rural Area) – One-line diagram

Figure 23 captures the one-line diagram of the FLISR topology in a Rural area. The topology has been divided into eleven segments (Seg1, Seg2,... Seg11). Red boxes represent Energized (Closed) power line, and green boxes represent unenergized (open) power line. Segments are energized as follows:

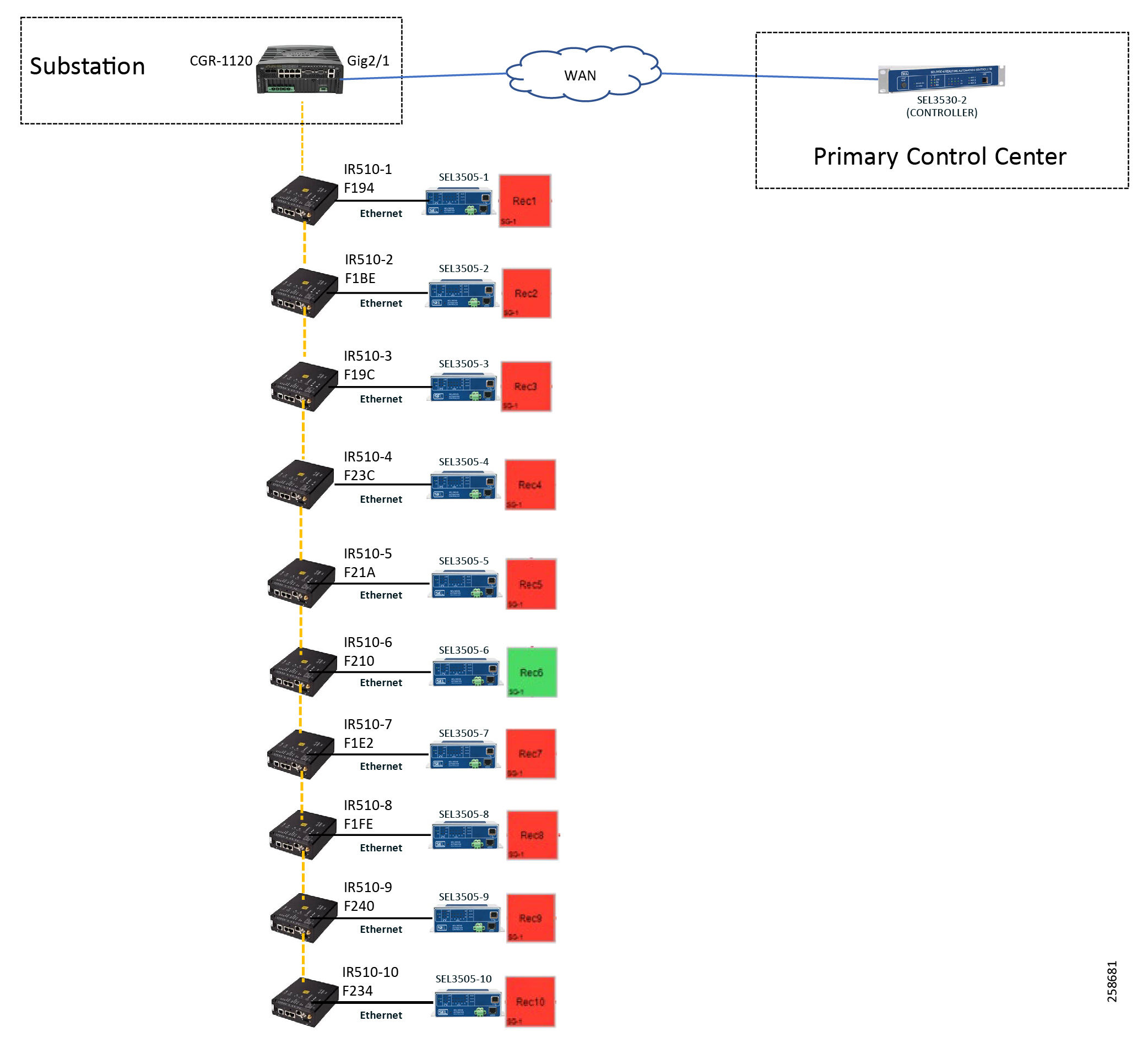

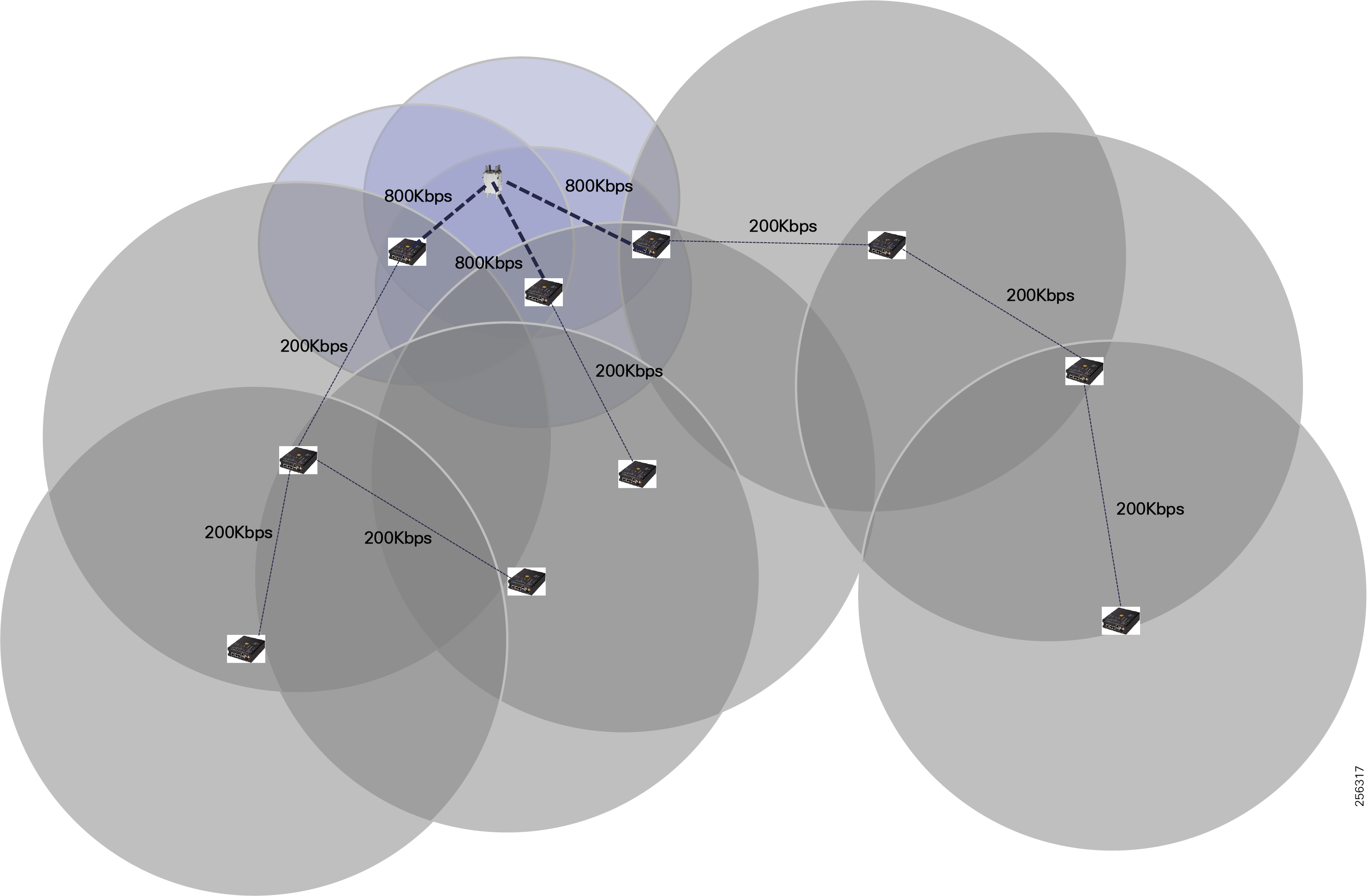

Rural FLISR Topology - SEL device to Cisco device mapping:

Figure 24 FLISR Topology (Rural Area) – SEL reclosers to Cisco device mapping diagram

Figure 24 captures the 1-to-1 mapping of SEL recloser devices to Cisco IR510 devices. The DA controller device is located in the Primary control center. CR Mesh is aggregated at the Field Area Network aggregator using CGR1000 series router which can be located in the substation. The communication between substation and control center can be over public/private WAN. The SEL device is positioned behind IR510 and connected using Ethernet.

The following table captures the Individual mapping of the SEL device with Cisco Mesh device and the mesh depth. The mapped pair of SEL/Cisco devices is located on the mesh. FLISR Controller (SEL3530-2) is located in control center. Other SEL devices, SEL 3505s, are located along the substation and feeder.

Table 2 FLISR Rural Topology Components

A few different types of FLISR Faults such as Fault with LockOut, Open Phase, Loss of Source, each of them in three different states (Normal, Fault, Restored) are discussed.

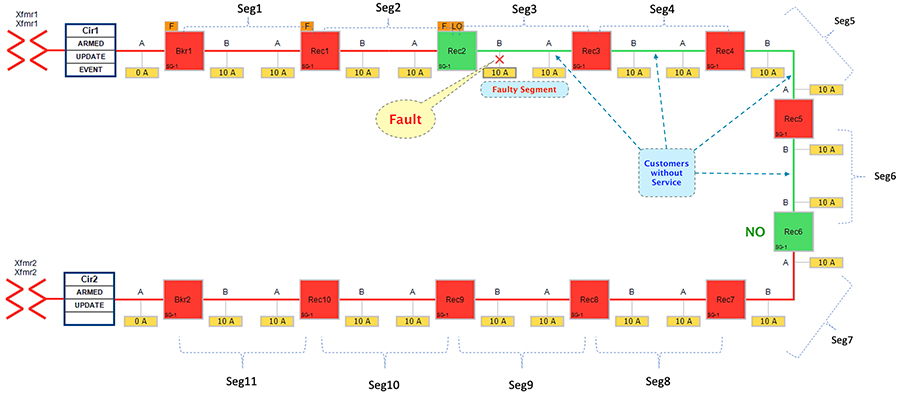

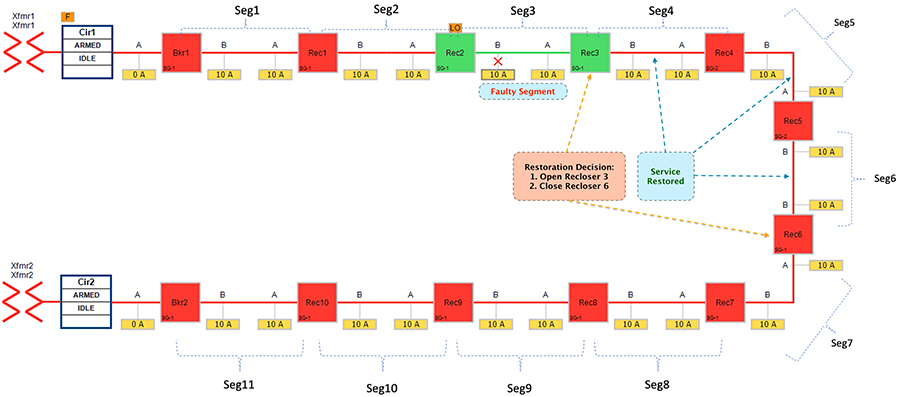

SEL FLISR Rural Topology - FLISR Fault scenario - Fault with LockOut

This failure scenario in Rural topology is very much similar to “SEL FLISR Urban Topology - FLISR Fault scenario - Fault with LockOut”. From the SEL FLISR Use case point of view, there is not much difference. Affected segments sends DNP3/IP unsolicited messages to SEL FLISR DA Controller located in the control center, which in turns performs Class0123 polling to know the holistic view of the FLISR topology. Later, SEL DA controller performs the required restoration operations. However, the underlying topology of the Cisco RF mesh is different. Rural topology uses hierarchical multi-hop topology.

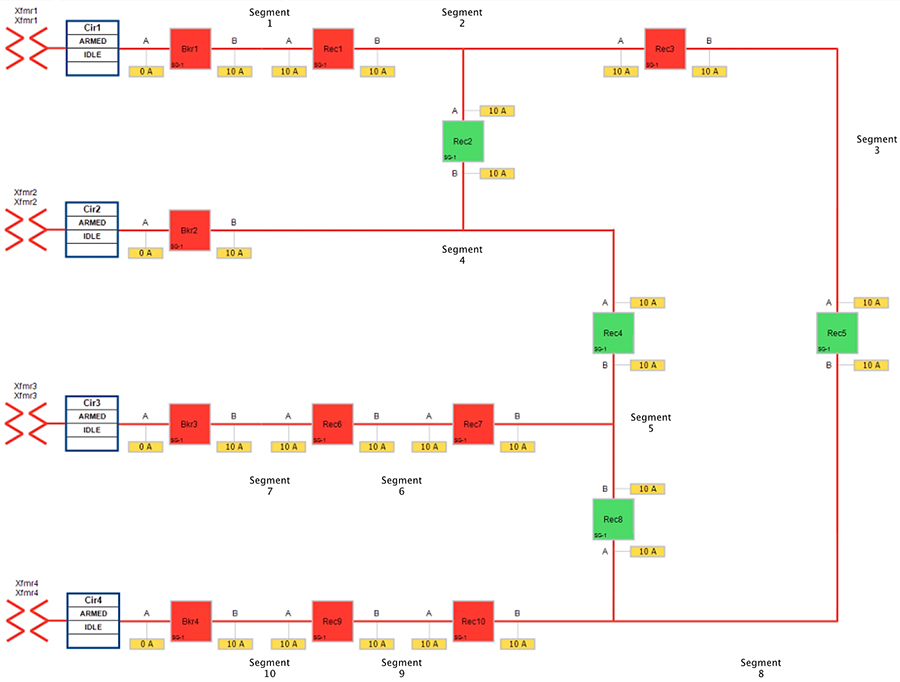

The Rural One-line topology in normal operational state looks like Figure 25 below.

Figure 25 Rural Topology - Fault with Lockout - Normal State

In above Figure 25:

■![]() Recloser 6 is in Normally Open (NO) state. Every other device is in closed state (the line is energized).

Recloser 6 is in Normally Open (NO) state. Every other device is in closed state (the line is energized).

■![]() Segments 1-6 are powered by feeder from transformer1

Segments 1-6 are powered by feeder from transformer1

■![]() Segments 7-11 are powered by feeder from transformer2

Segments 7-11 are powered by feeder from transformer2

When a fault gets introduced in Segment 3 (between Recloser2 and Recloser3), recloser 2 participating in that segment changes its state from Normally Closed state (NC) to Open state. This would result in loss of power to below segments (as shown in the figure below):

■![]() Segment 3 (between Recloser 2 and 3) - where the fault did occur

Segment 3 (between Recloser 2 and 3) - where the fault did occur

■![]() Segments 4-6 (between Recloser 3 and 6) - where the fault did not occur.

Segments 4-6 (between Recloser 3 and 6) - where the fault did not occur.

Figure 26 Rural Topology - Fault with Lockout - Fault State

In this state, customers in the faulty region in segment 3 is experiencing loss of power. Along with them, customers in non-faulty regions belonging to segments 4-6 are also experiencing the loss of power.

The moment fault occurred, recloser2 participating in the segment (3) changes its state from Closed to Open. Meanwhile, following reclosers would send DNP3/IP unsolicited messages upstream to the SEL FLISR DA controller:

■![]() Recloser2 – conveys the fault.

Recloser2 – conveys the fault.

■![]() Reclosers (3-6) – conveys the loss of power.

Reclosers (3-6) – conveys the loss of power.

The SEL FLISR DA controller then performs Class0123 polling on every related recloser to get holistic view of the topology before making any restoration decision.

Figure 27 Rural Topology - Fault with Lockout - Restored State

The FLISR SEL DA controller decides:

1.![]() To change the state of Recloser 3 (located between segment 3 & 4) from normally closed state to Open state.

To change the state of Recloser 3 (located between segment 3 & 4) from normally closed state to Open state.

2.![]() To change the state of Recloser 6 (located between segment 6 & 7) from Normally Open state to Closed state. This would result in energizing segments (4-6) with power source from transformer2 feeder.

To change the state of Recloser 6 (located between segment 6 & 7) from Normally Open state to Closed state. This would result in energizing segments (4-6) with power source from transformer2 feeder.

■![]() Customers in non-faulty region in segments 4-6 would have power service restored with the help of FLISR.

Customers in non-faulty region in segments 4-6 would have power service restored with the help of FLISR.

■![]() Due to Fault isolation, power outage is restricted to Customers in the faulty region in segment 3.

Due to Fault isolation, power outage is restricted to Customers in the faulty region in segment 3.

■![]() Fault Location Identification - identified in segment3.

Fault Location Identification - identified in segment3.

■![]() Service Restoration – Restoring the power to non-faulty segment (segment 4-6).

Service Restoration – Restoring the power to non-faulty segment (segment 4-6).

■![]() Isolation - Fault has been restricted to affected customers in segment 3 alone.

Isolation - Fault has been restricted to affected customers in segment 3 alone.

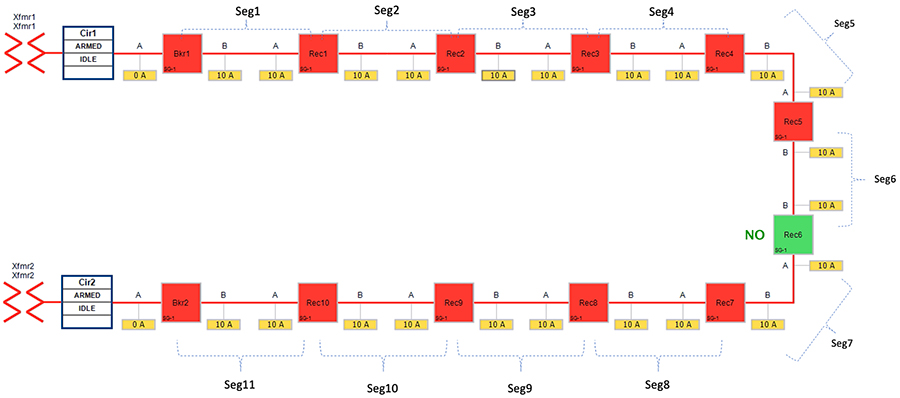

SEL FLISR Rural Topology - FLISR Fault scenario – Open Phase

Open Phase fault applies to three phase circuits, where one of the line voltage is lost. One cause could be due to a bad or loose street pole line jumper that interrupts the line. The loss of voltage will again trigger a DNP3/IP unsolicited message to the DA Controller. DA controller then initiates the necessary action, similar to FLISR Fault scenario - Fault with Lockout.

Figure 28 FLISR Rural Topology – Open Phase - Normal State

Figure 28 portrays the normal state of the FLISR Rural topology. The emphasis is placed on segment 8 and segment 7. Reclosers corresponding to these segments are Recloser 6,7 and 8.

In above Figure 28:

■![]() Recloser 6 is in Normally Open state. Every other device is in closed state (means, line is energized).

Recloser 6 is in Normally Open state. Every other device is in closed state (means, line is energized).

■![]() Segments 1-6 are powered by feeder from transformer1

Segments 1-6 are powered by feeder from transformer1

■![]() Segments 7-11 are powered by feeder from transformer2

Segments 7-11 are powered by feeder from transformer2

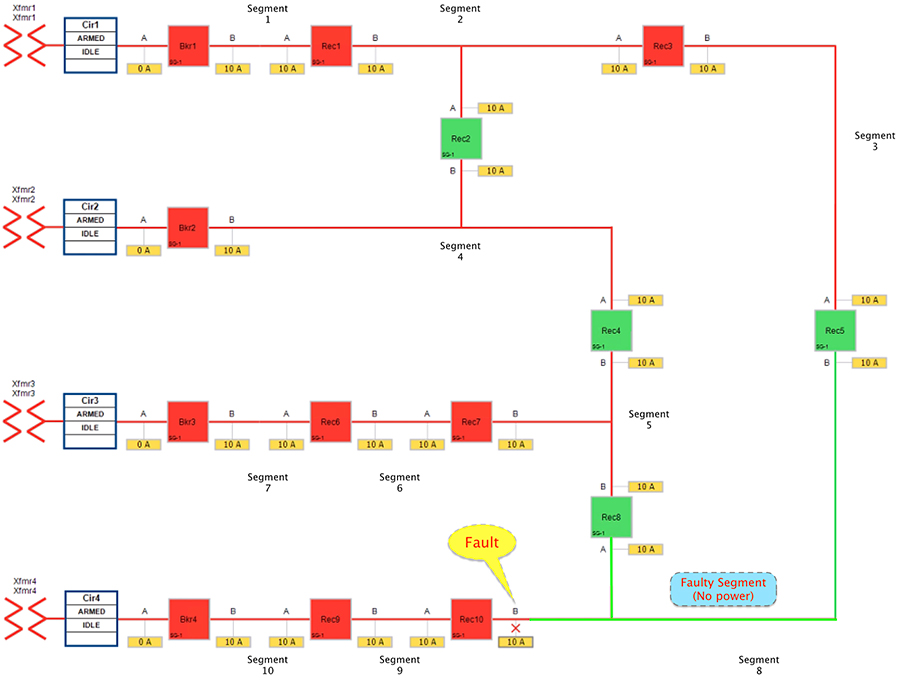

The Open Phase Fault occurs in point A of recloser 7, as highlighted in below figure XX, with a red “X”.

Figure 29 FLISR Rural Topology – Open Phase - Fault State

Figure 29 portrays the fault state of the FLISR Rural topology, when fault occurred on segment 8.

This would result in loss of power to below segments:

■![]() Segment 8 (between Recloser 8 and 7) - where the fault did occur

Segment 8 (between Recloser 8 and 7) - where the fault did occur

■![]() Segment 7 (between Recloser 7 and 6) - where the fault did not occur.

Segment 7 (between Recloser 7 and 6) - where the fault did not occur.

In this state, customers in the faulty segment (8) is experiencing loss of power. Along with them, customers in non-faulty segment (7) is also experiencing the loss of power. Before the fault occurred, both of these segments (7 & 8) used to receive power from transformer2.

The moment fault occurred on segment 8, the participating reclosers would be sending DNP3/IP unsolicited messages upstream to the SEL FLISR DA Controller located in the control center. The SEL FLISR DA controller then performs Class0123 polling on every related recloser in the FLISR Rural topology, to get a holistic view of the topology before making any restoration decision.

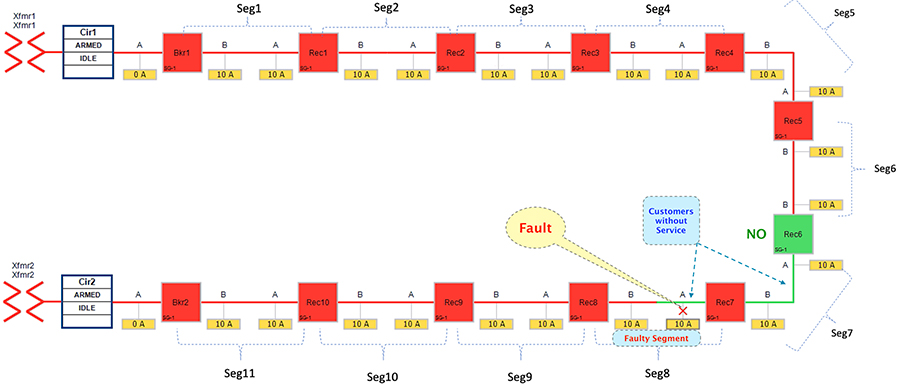

Figure 30 FLISR Rural Topology – Open Phase - Restored State

The FLISR SEL DA controller decides:

1.![]() To change the state of the Recloser 8 (located between segment 9 & 8) from Closed state to Open state

To change the state of the Recloser 8 (located between segment 9 & 8) from Closed state to Open state

2.![]() To change the state of the Recloser 7 (located between segment 8 & 7) from Closed state to Open state

To change the state of the Recloser 7 (located between segment 8 & 7) from Closed state to Open state

3.![]() To change the state of Recloser 6 (located between segment 6 & 7) from Normally Open state to Closed state. This would result in energizing segment (7) with power source from transformer1 feeder.

To change the state of Recloser 6 (located between segment 6 & 7) from Normally Open state to Closed state. This would result in energizing segment (7) with power source from transformer1 feeder.

■![]() Customers in non-faulty region in segments 7 would have power service restored with the help of FLISR.

Customers in non-faulty region in segments 7 would have power service restored with the help of FLISR.

■![]() Due to Fault isolation, power outage is restricted to Customers in the faulty region (segment 8).

Due to Fault isolation, power outage is restricted to Customers in the faulty region (segment 8).

■![]() Fault Location Identification - identified in segment8.

Fault Location Identification - identified in segment8.

■![]() Service Restoration – Restoring the power to non-faulty segment (segment 7).

Service Restoration – Restoring the power to non-faulty segment (segment 7).

■![]() Isolation - Fault has been restricted to affected customers in segment 8 alone.

Isolation - Fault has been restricted to affected customers in segment 8 alone.

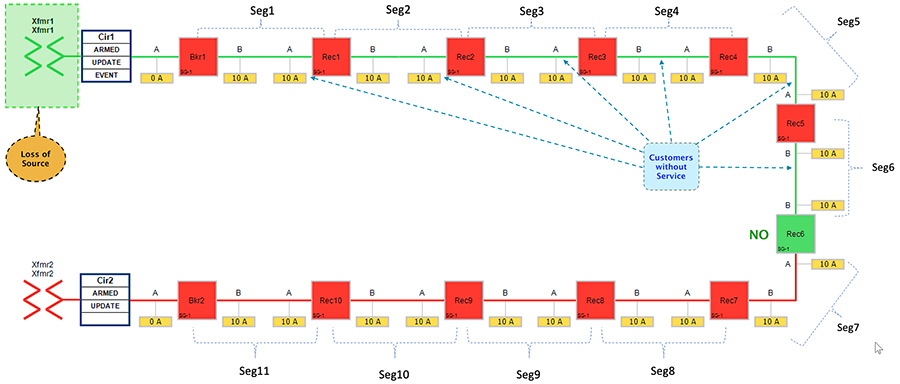

SEL FLISR Rural Topology - FLISR Fault scenario – Loss of Source:

Loss of source applies to fault that occur within the substation yard. For example, a transformer going bad (or) bus fault in the substation, that causes an outage for the entire feeder originating from that substation. In this scenario, the relay within the substation will notify the SEL DA controller of the loss of power which will then initiate the restoration process.

Figure 31 Rural Topology – Loss of Source - Normal State

Figure 31 portrays the normal state of the FLISR Rural topology. The emphasis is placed on entire feeder line from transformer 1 (which include segments 1-6) In normal operational state,

■![]() Recloser 6 is in Normally Open state. All other devices Circuit Breakers & Reclosers are in Closed state.

Recloser 6 is in Normally Open state. All other devices Circuit Breakers & Reclosers are in Closed state.

■![]() Segments 1-6 deriving power from transformer1

Segments 1-6 deriving power from transformer1

■![]() Segments 7-11 deriving power from transformer2

Segments 7-11 deriving power from transformer2

The first feeder transformer was taken out of service to simulate a loss of source. Once the transformer1 is taken out of service, all the downstream customers in segments (1-6) would experience loss of power.

Figure 32 Rural Topology – Loss of Source - Fault State

■![]() Segments 1-6 would experience loss of power, as transformer1 is out of service.

Segments 1-6 would experience loss of power, as transformer1 is out of service.

■![]() Reclosers 6 is still in Normally Open state.

Reclosers 6 is still in Normally Open state.

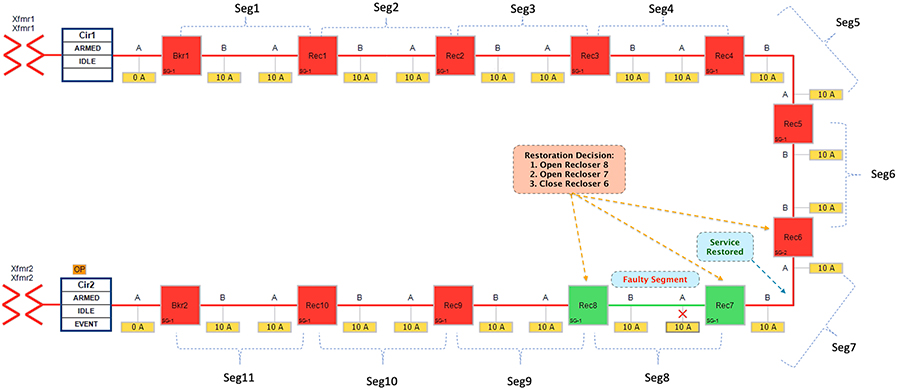

The circuit breaker and recloser devices sends DNP3/IP unsolicited message upstream to the SEL FLISR DA controller device located in the control center, about the loss of power in the feeder line. The SEL DA controller performs DNP3/IP Class0123 polling from all the related reclosers in the FLISR Rural topology to get a holistic view of the current state of the topology.

The SEL FLISR DA Controller finds the most optimal way to restore the services and, in this case, it chooses to:

■![]() Change the Circuit breaker 1 from Closed state to Open state.

Change the Circuit breaker 1 from Closed state to Open state.

■![]() Change the Recloser6 state from Normal Open state to Close state, provided the additional load will not cause an overload on transformer2.

Change the Recloser6 state from Normal Open state to Close state, provided the additional load will not cause an overload on transformer2.

■![]() This would result in energizing segments (1-6) with power source from transformer2 feeder.

This would result in energizing segments (1-6) with power source from transformer2 feeder.

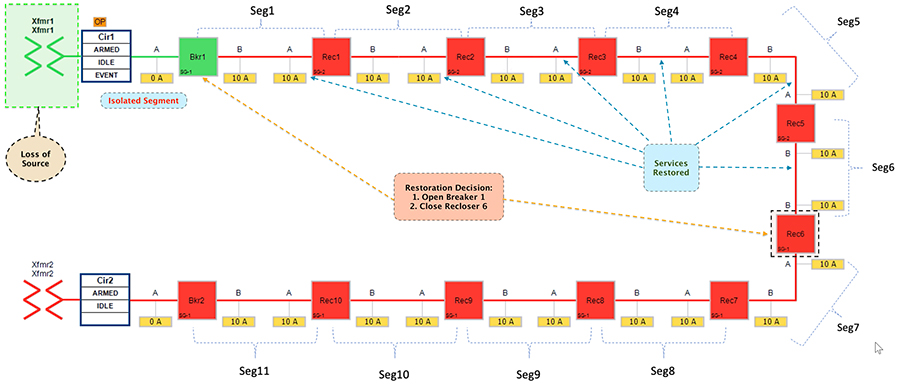

Figure 33 Rural Topology – Loss of Source - Restored State

■![]() Customers in all the affected segments (1-6) would have power service restored with the help of FLISR.

Customers in all the affected segments (1-6) would have power service restored with the help of FLISR.

■![]() Due to Fault isolation, transformer1 is isolated by opening Circuit Breaker1. Affected segments are now served by transformer2.

Due to Fault isolation, transformer1 is isolated by opening Circuit Breaker1. Affected segments are now served by transformer2.

■![]() Fault Location Identification - identified in transformer1.

Fault Location Identification - identified in transformer1.

■![]() Service Restoration – Restored the power to affected segments using alternate power source (transformer2).

Service Restoration – Restored the power to affected segments using alternate power source (transformer2).

■![]() Isolation - Fault has been restricted between transformer1 and breaker1.

Isolation - Fault has been restricted between transformer1 and breaker1.

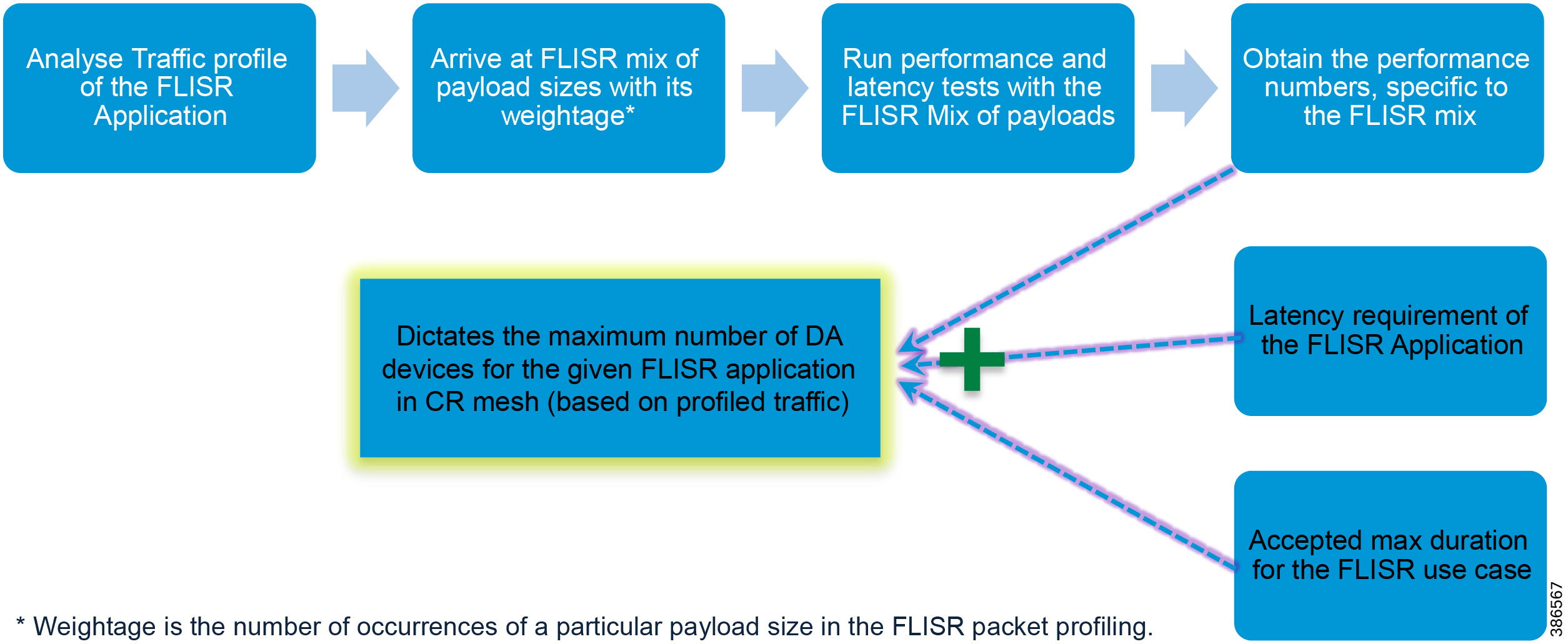

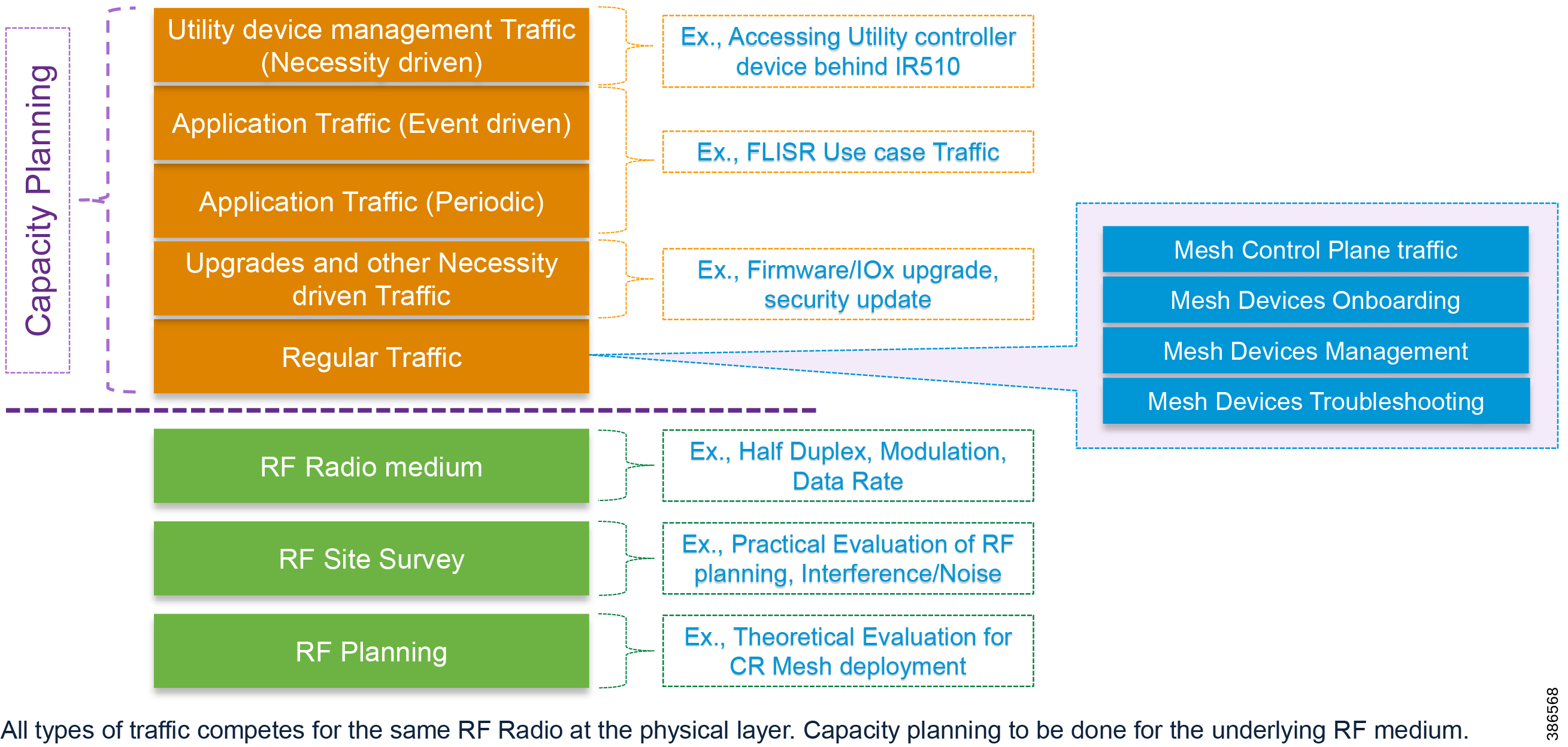

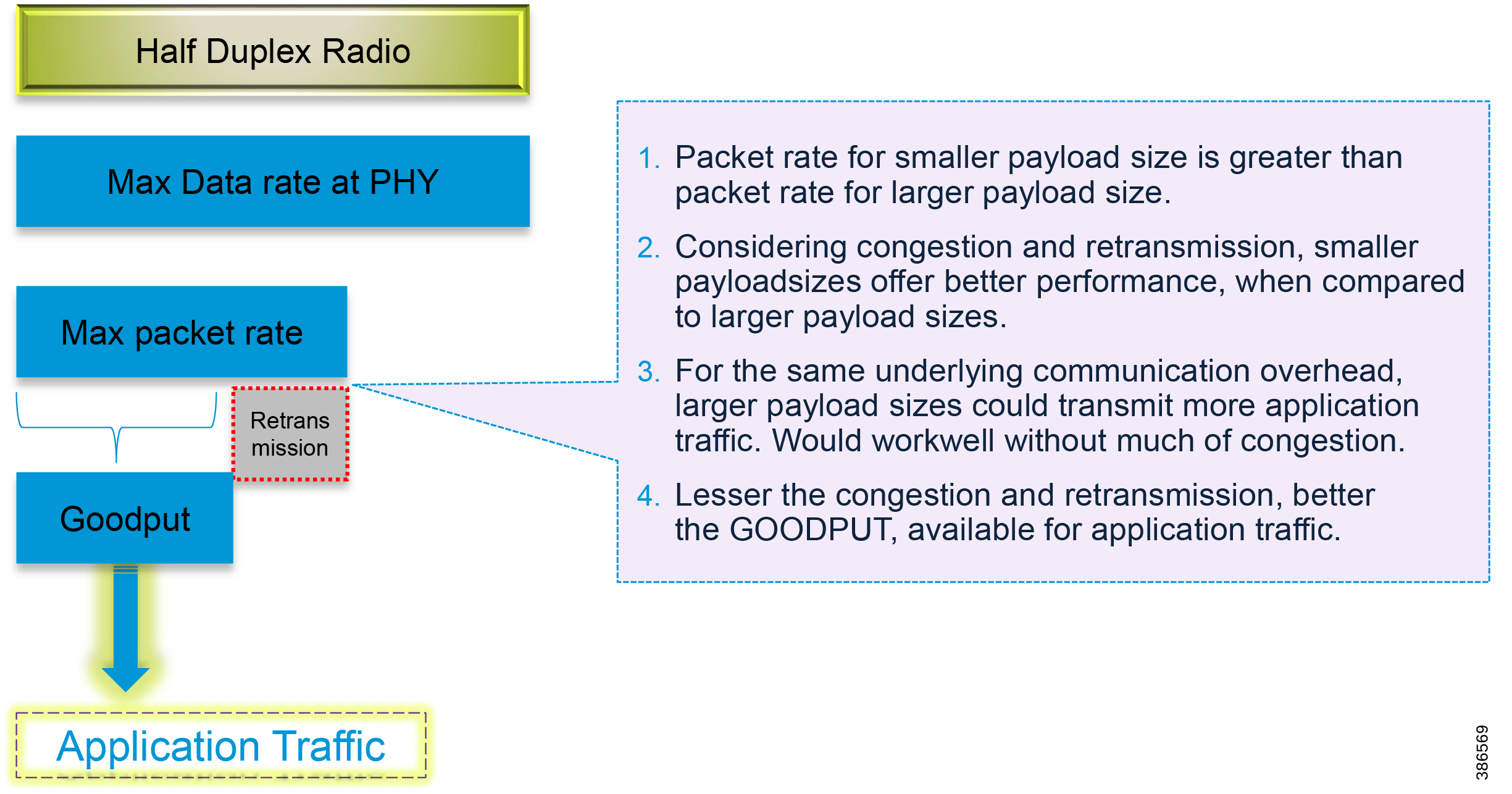

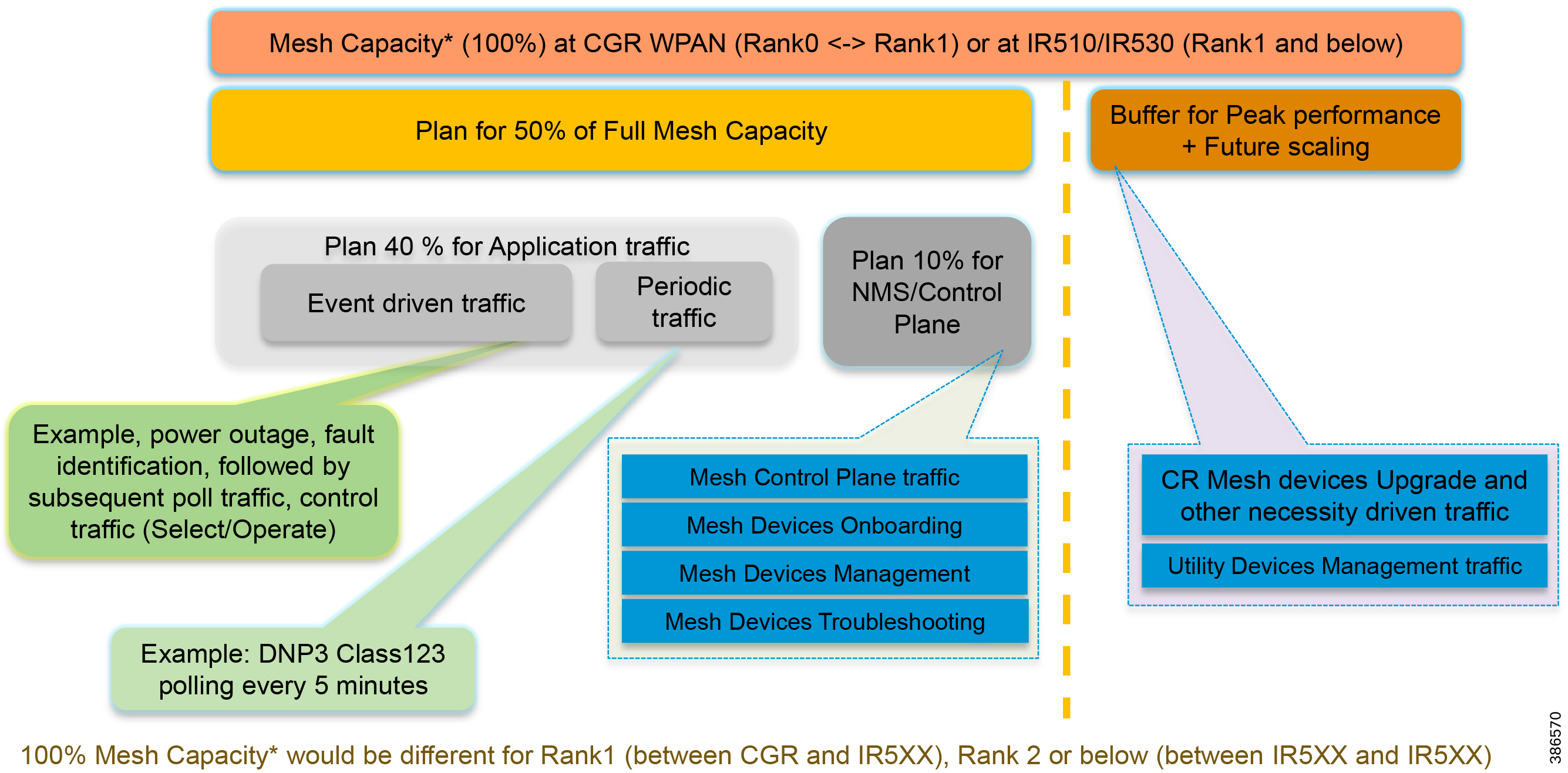

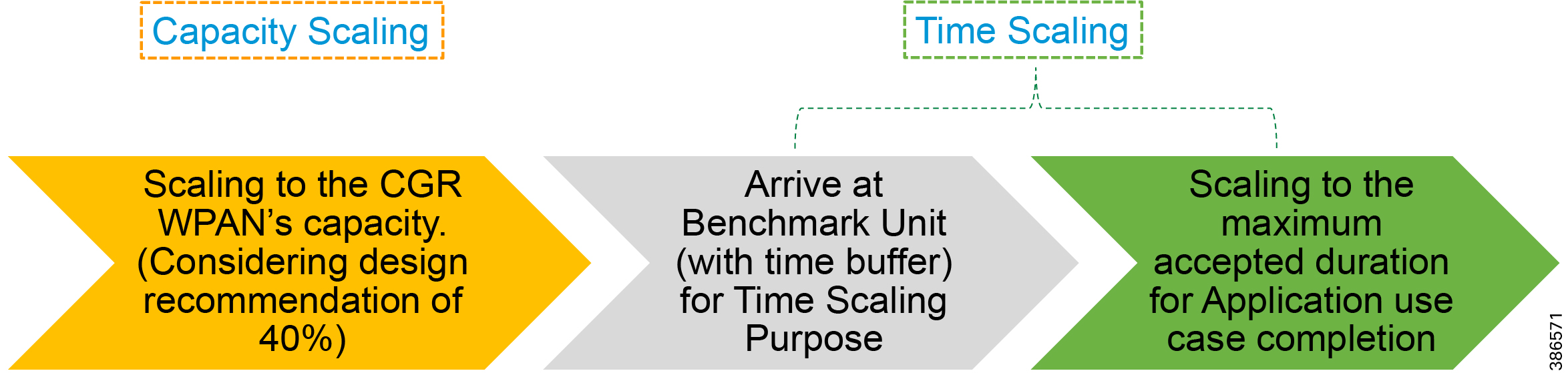

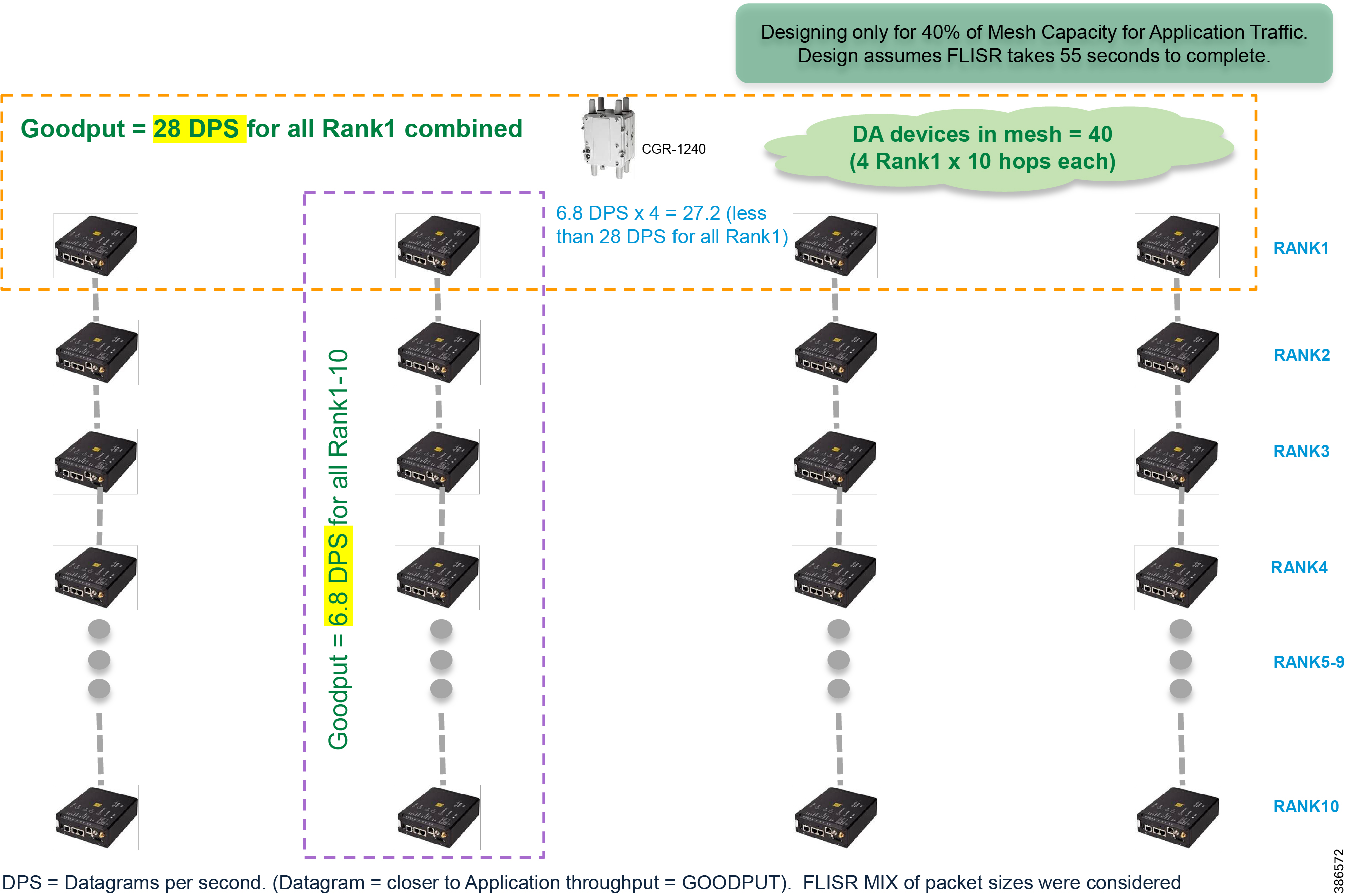

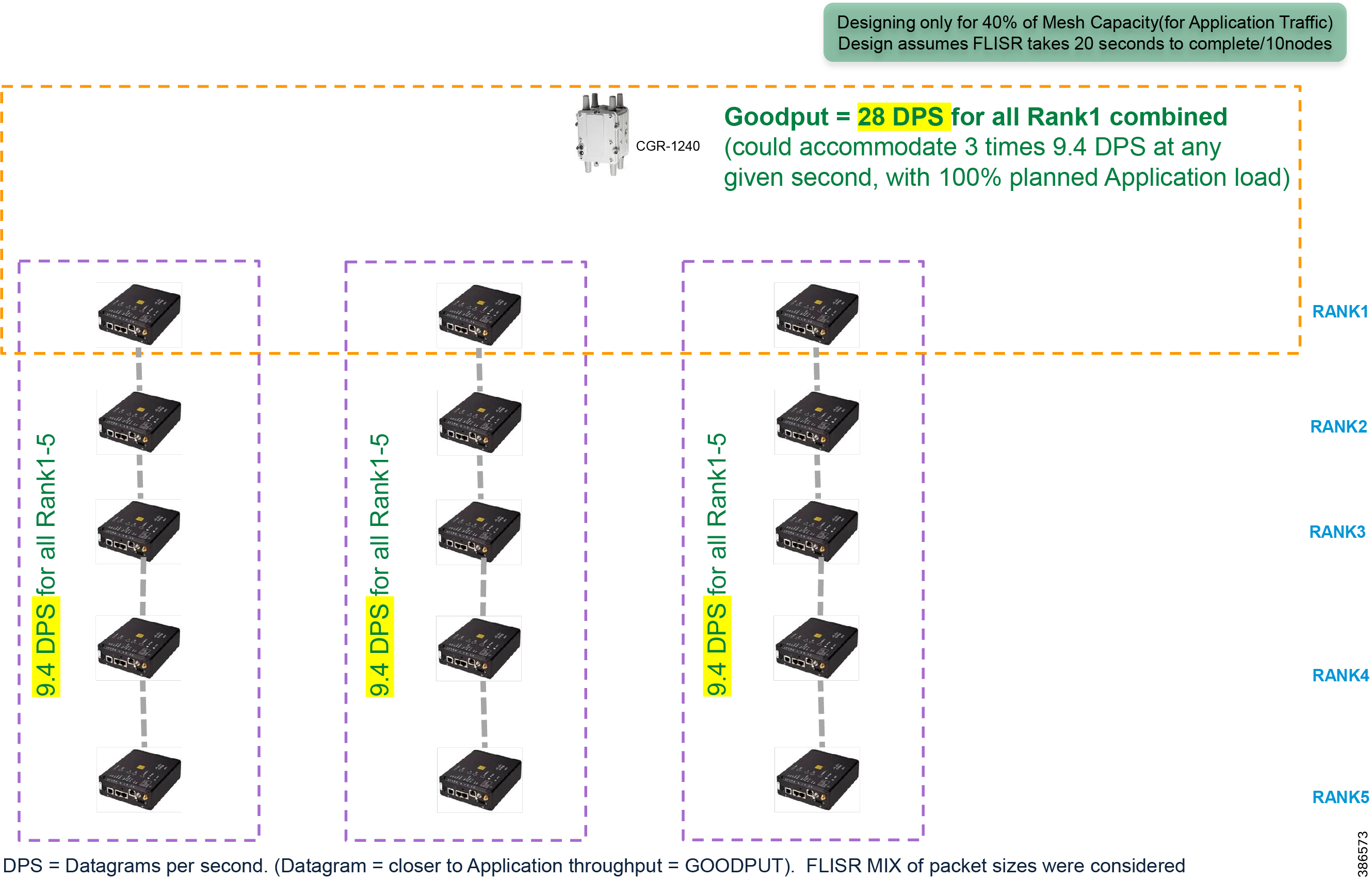

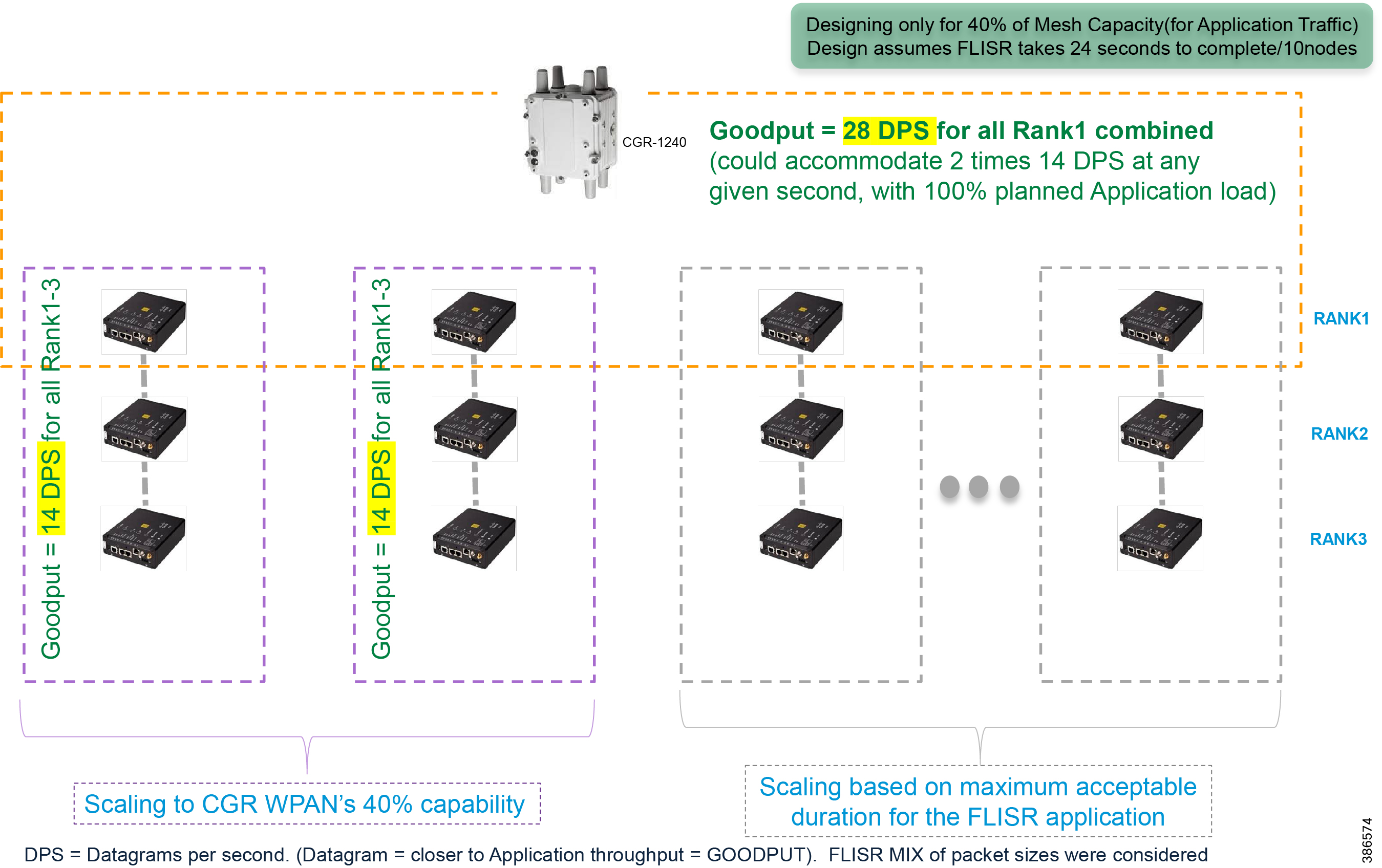

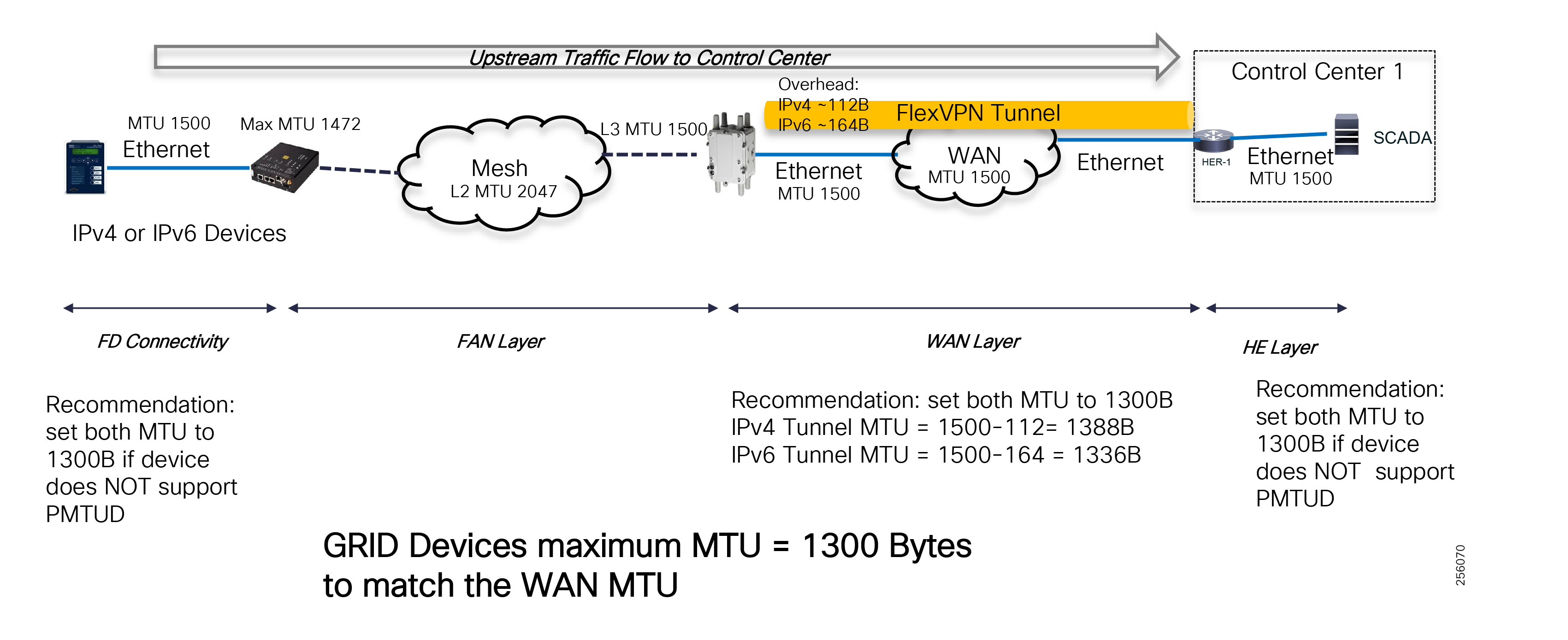

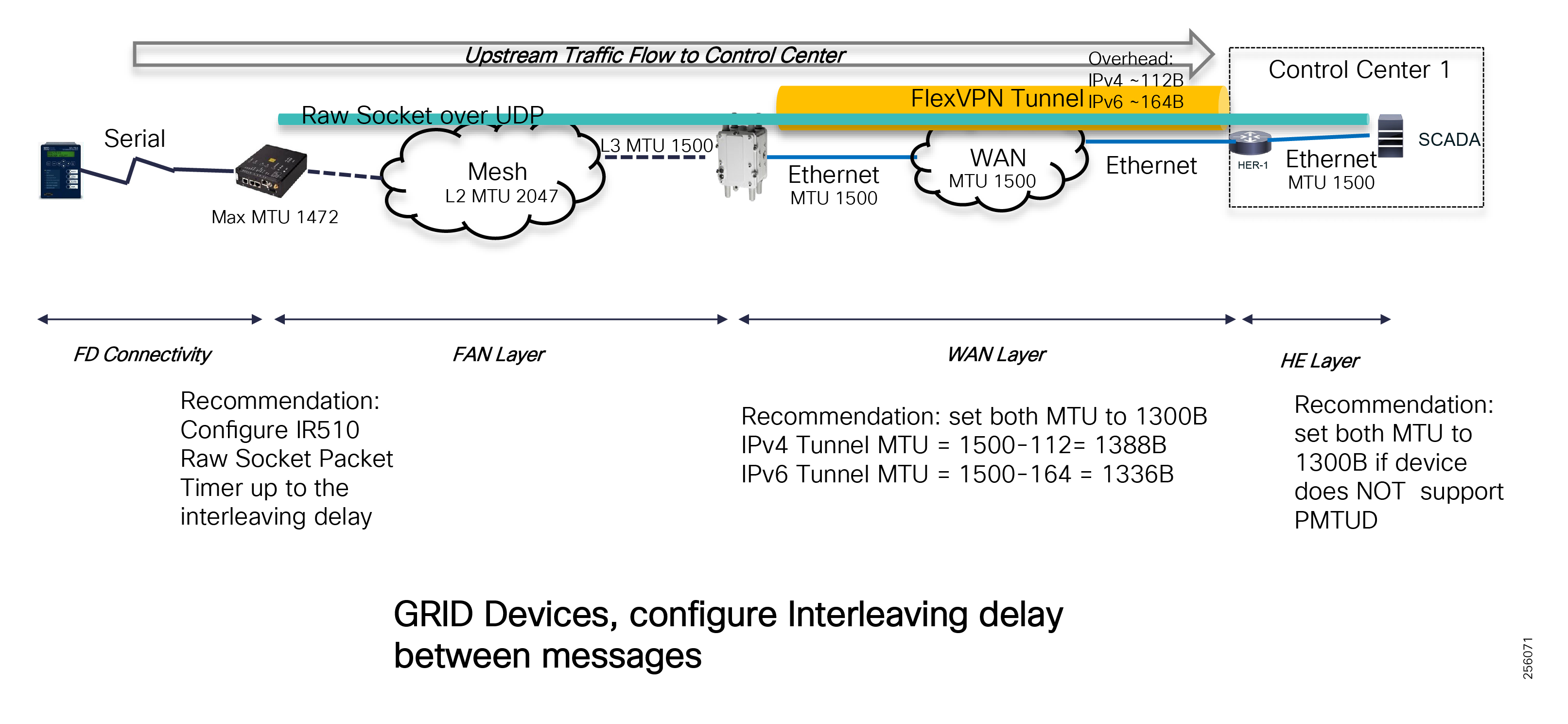

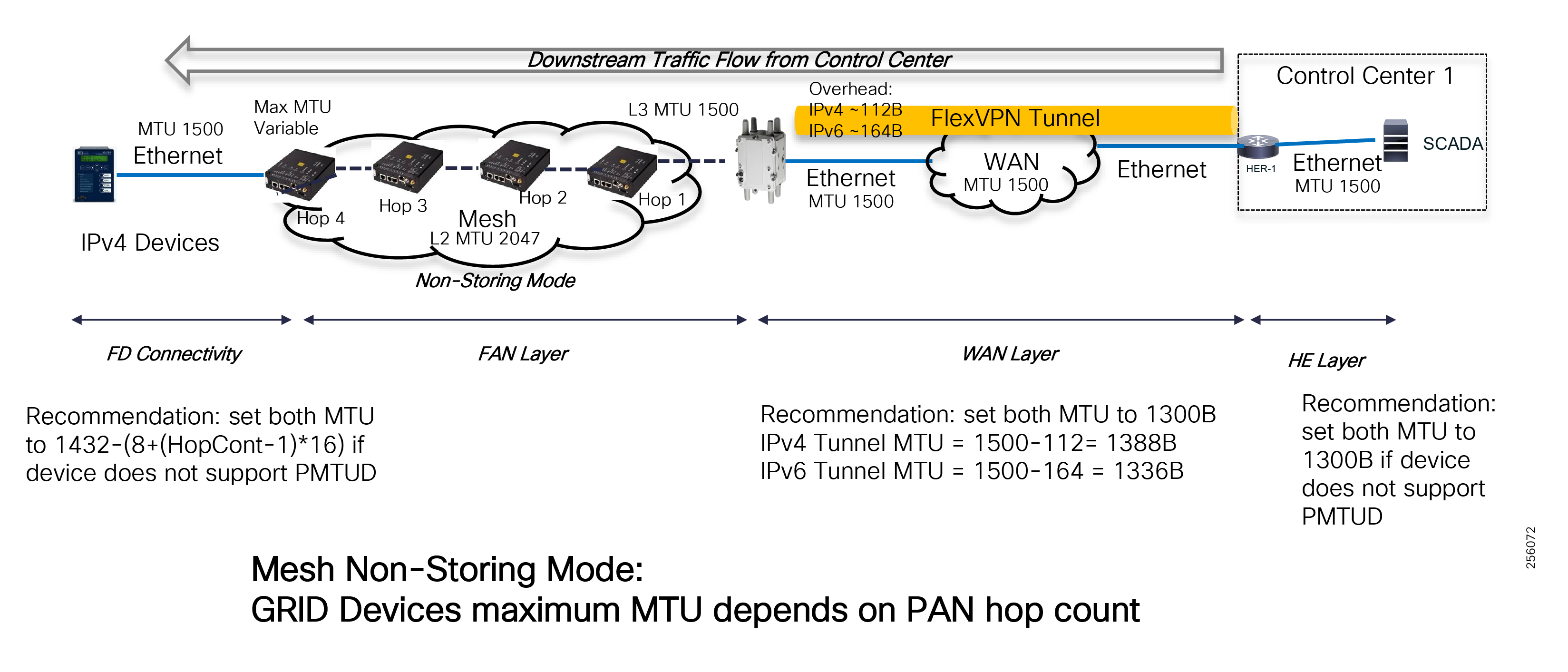

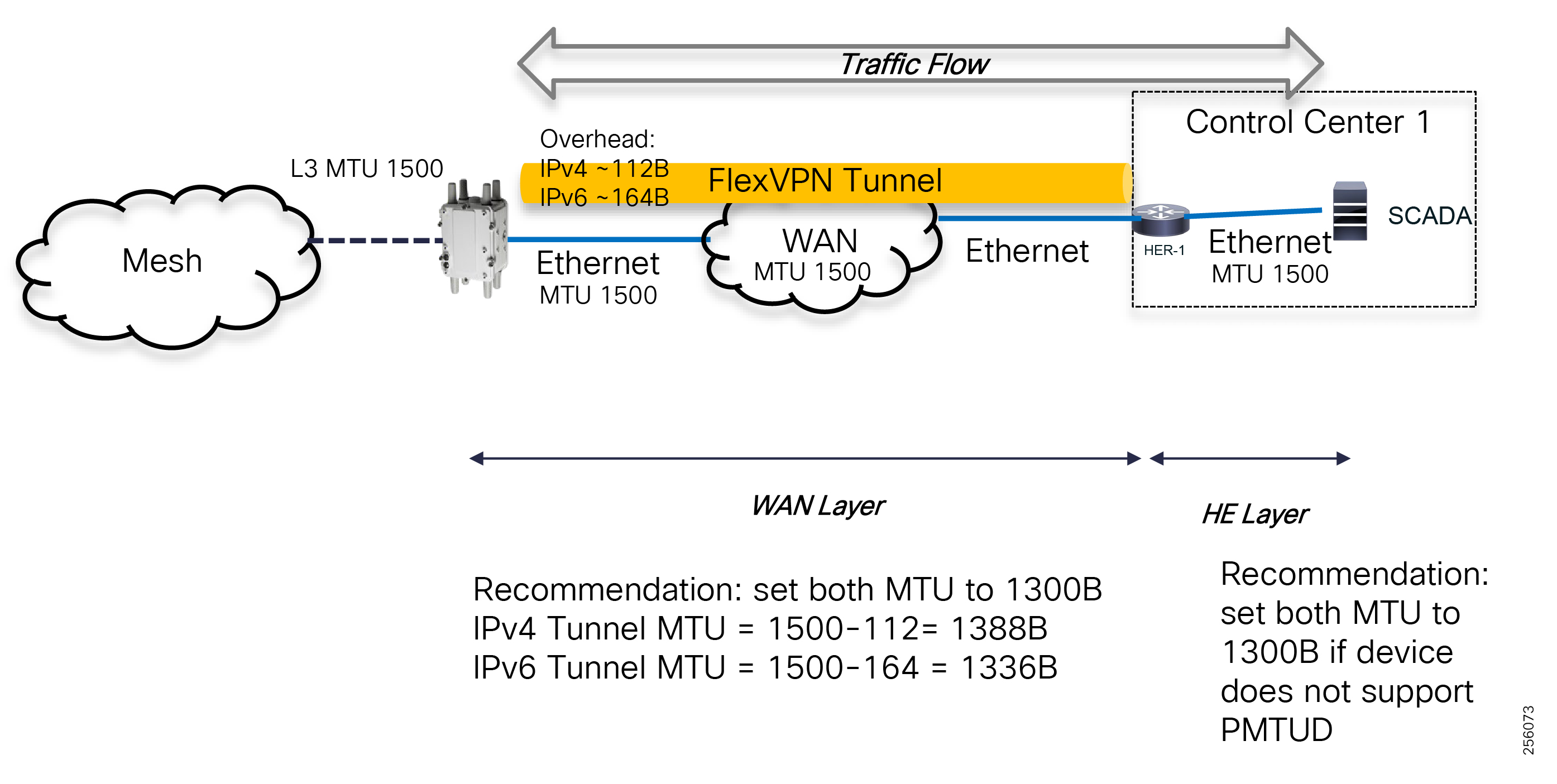

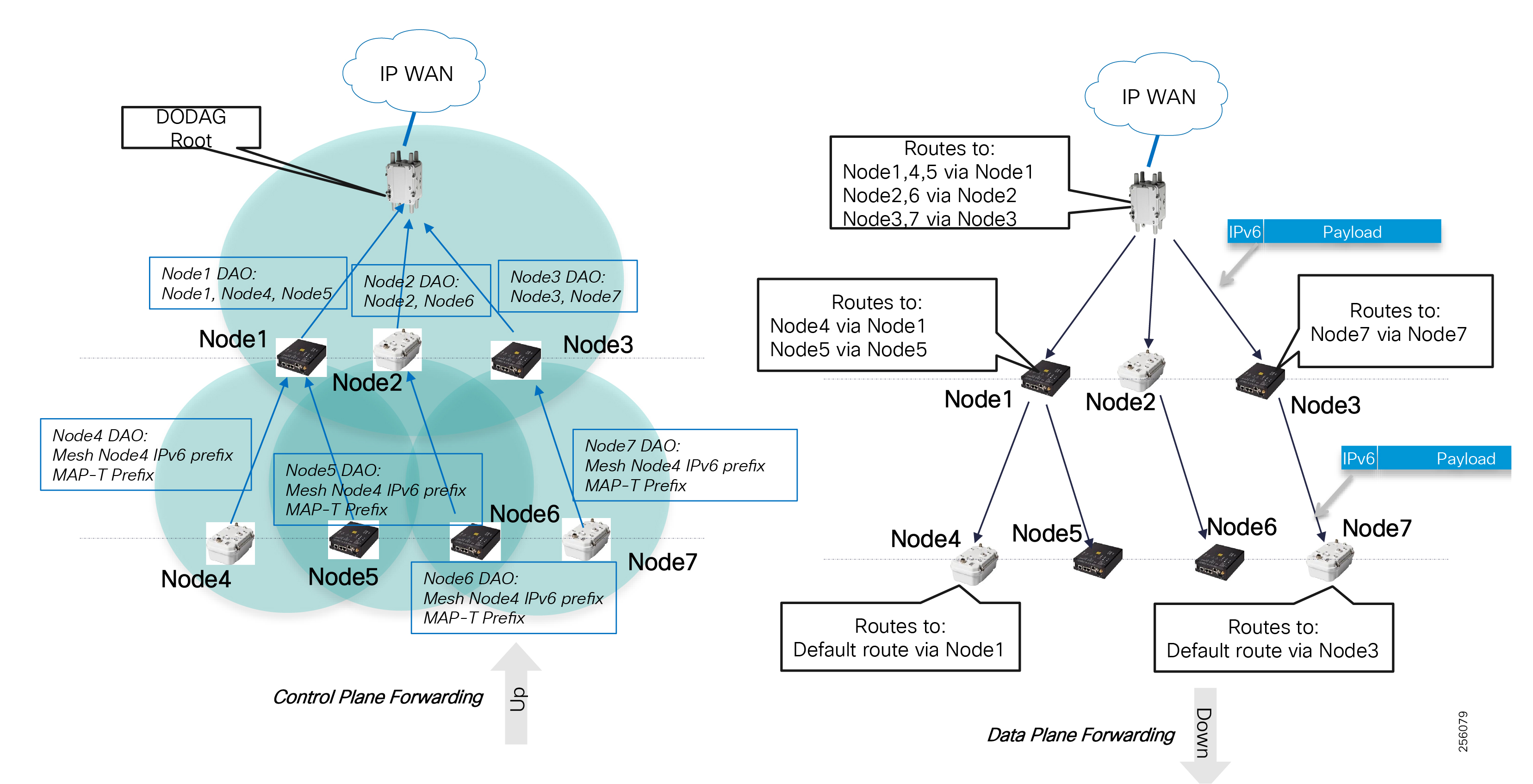

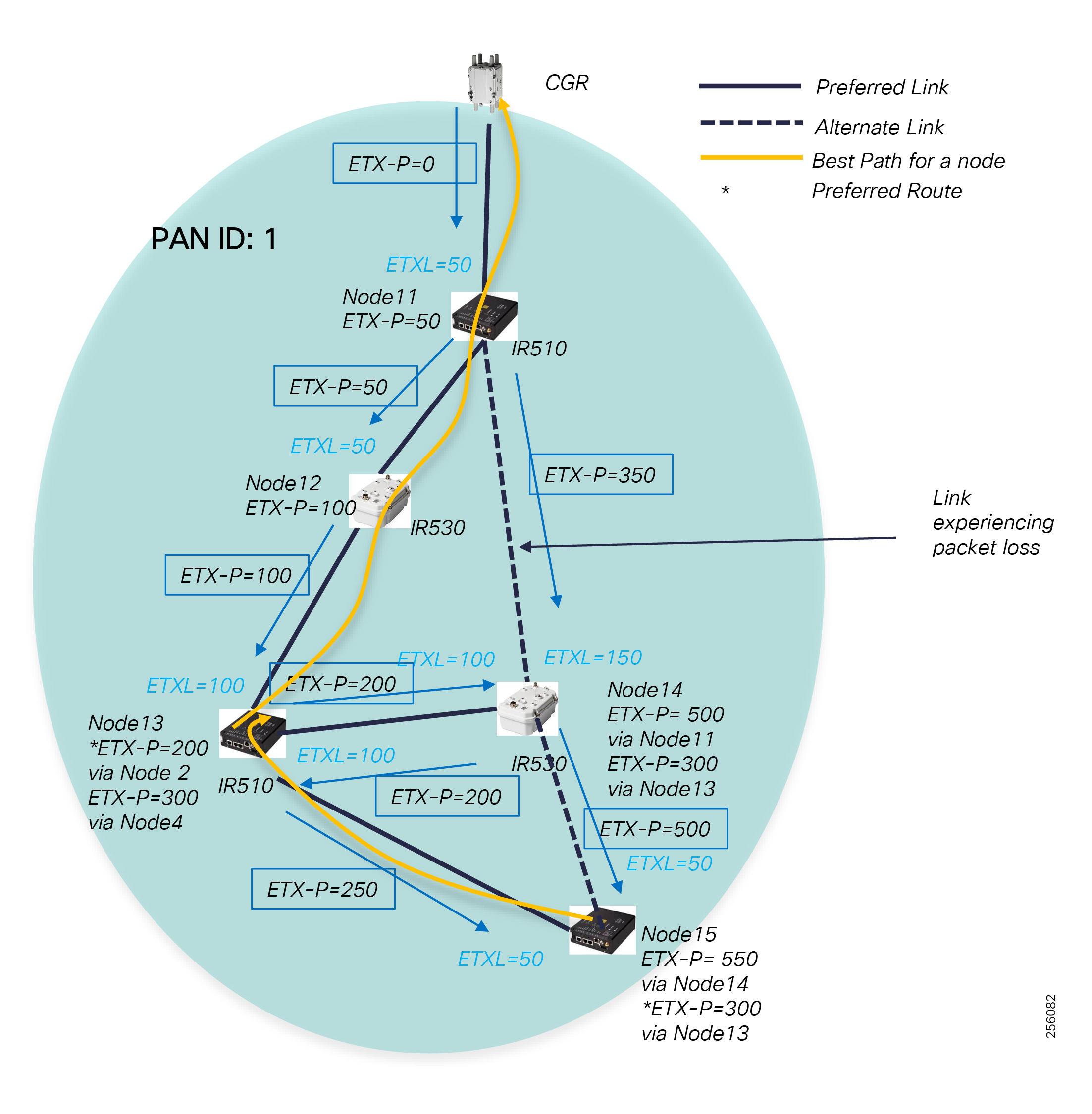

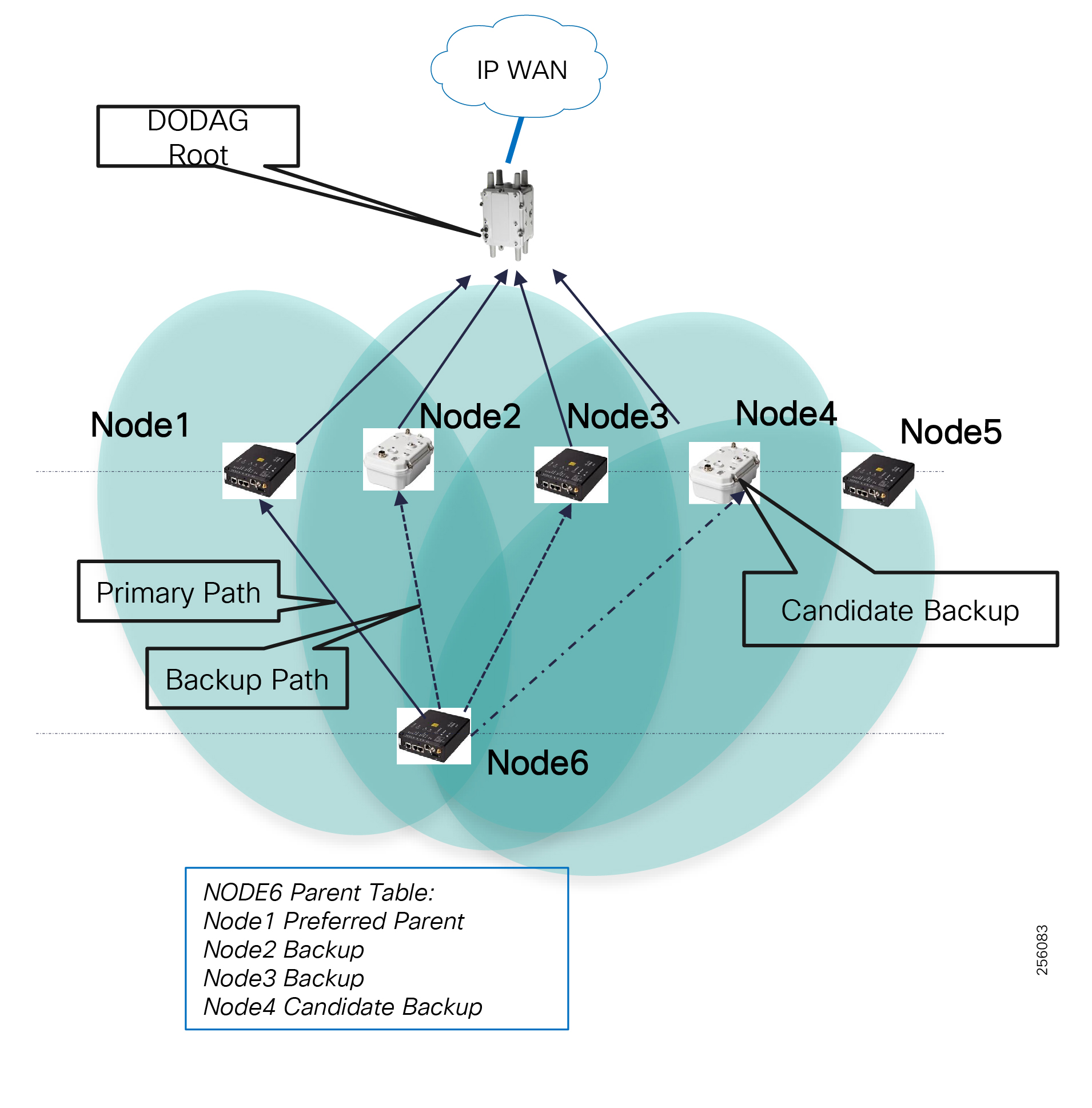

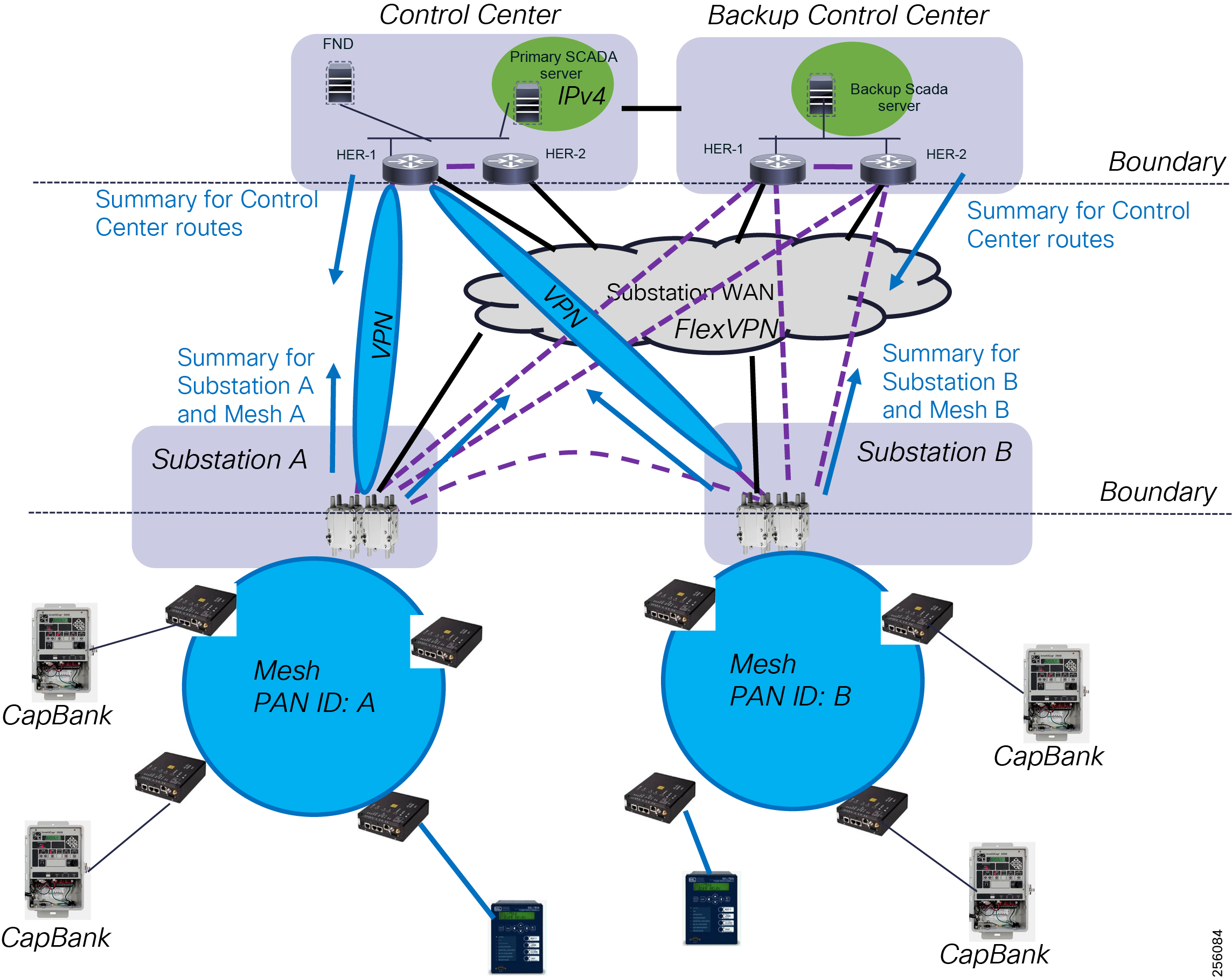

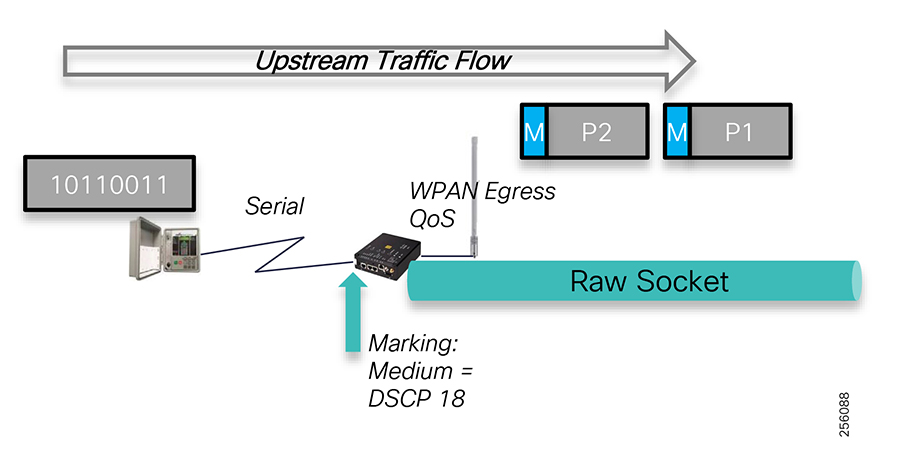

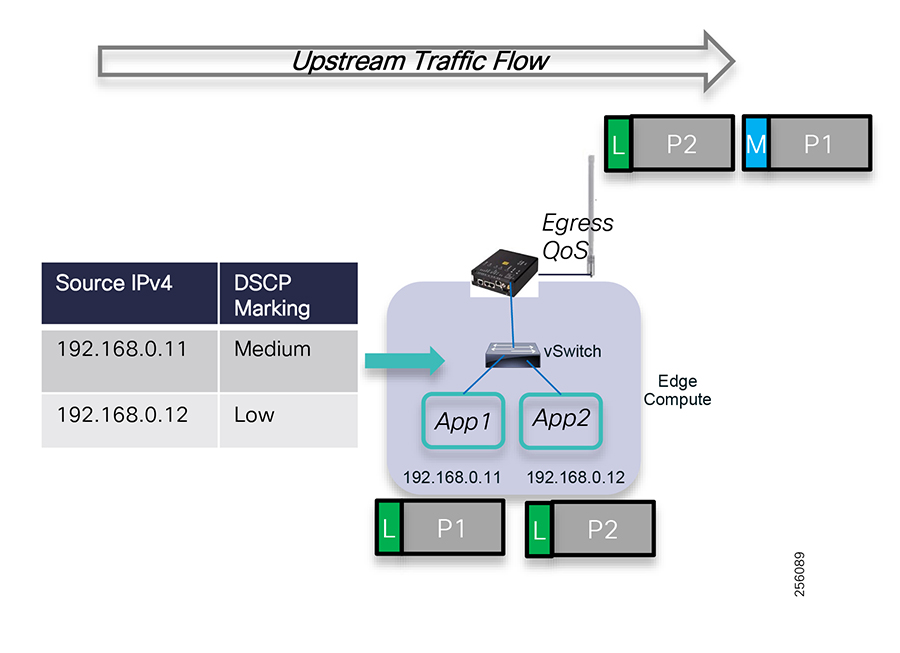

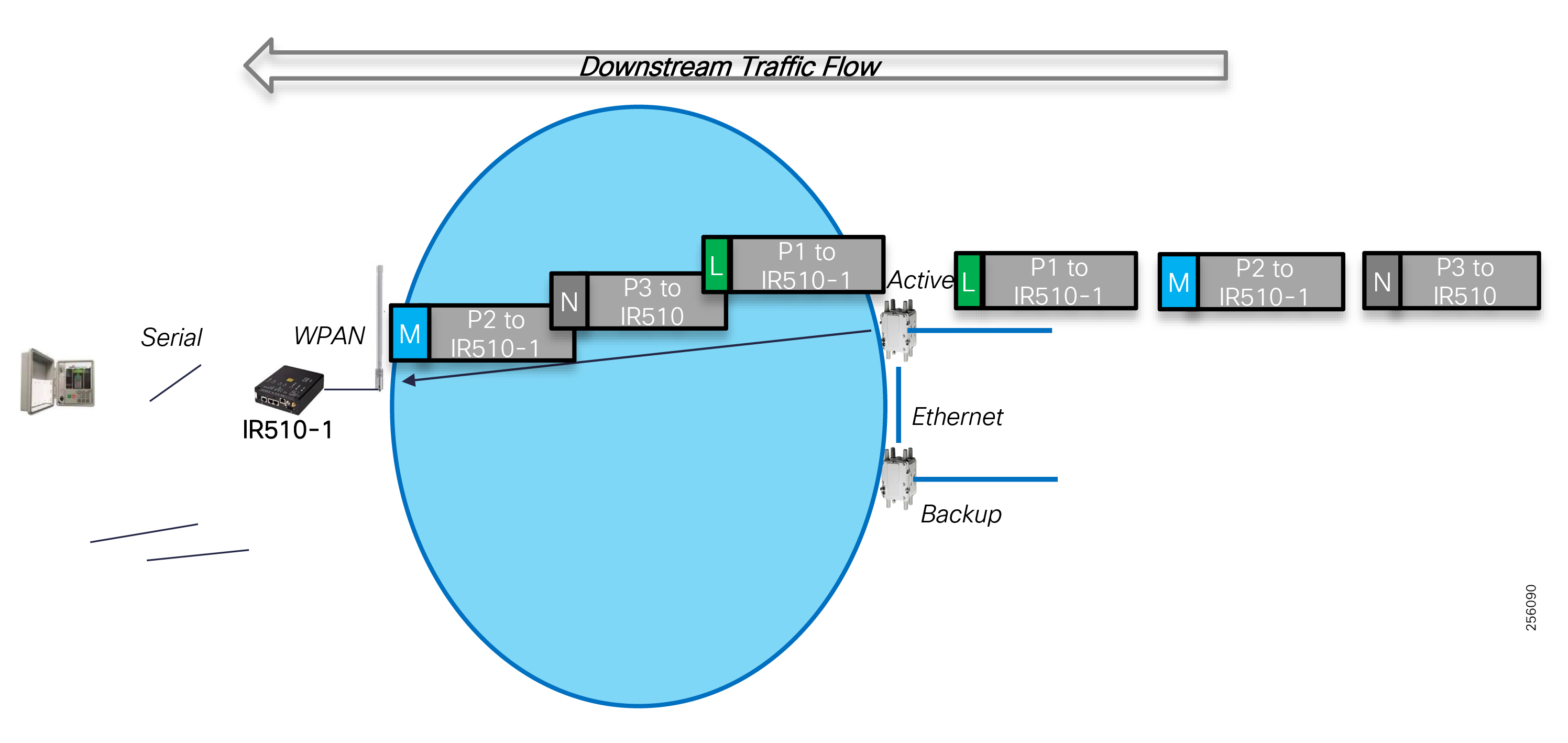

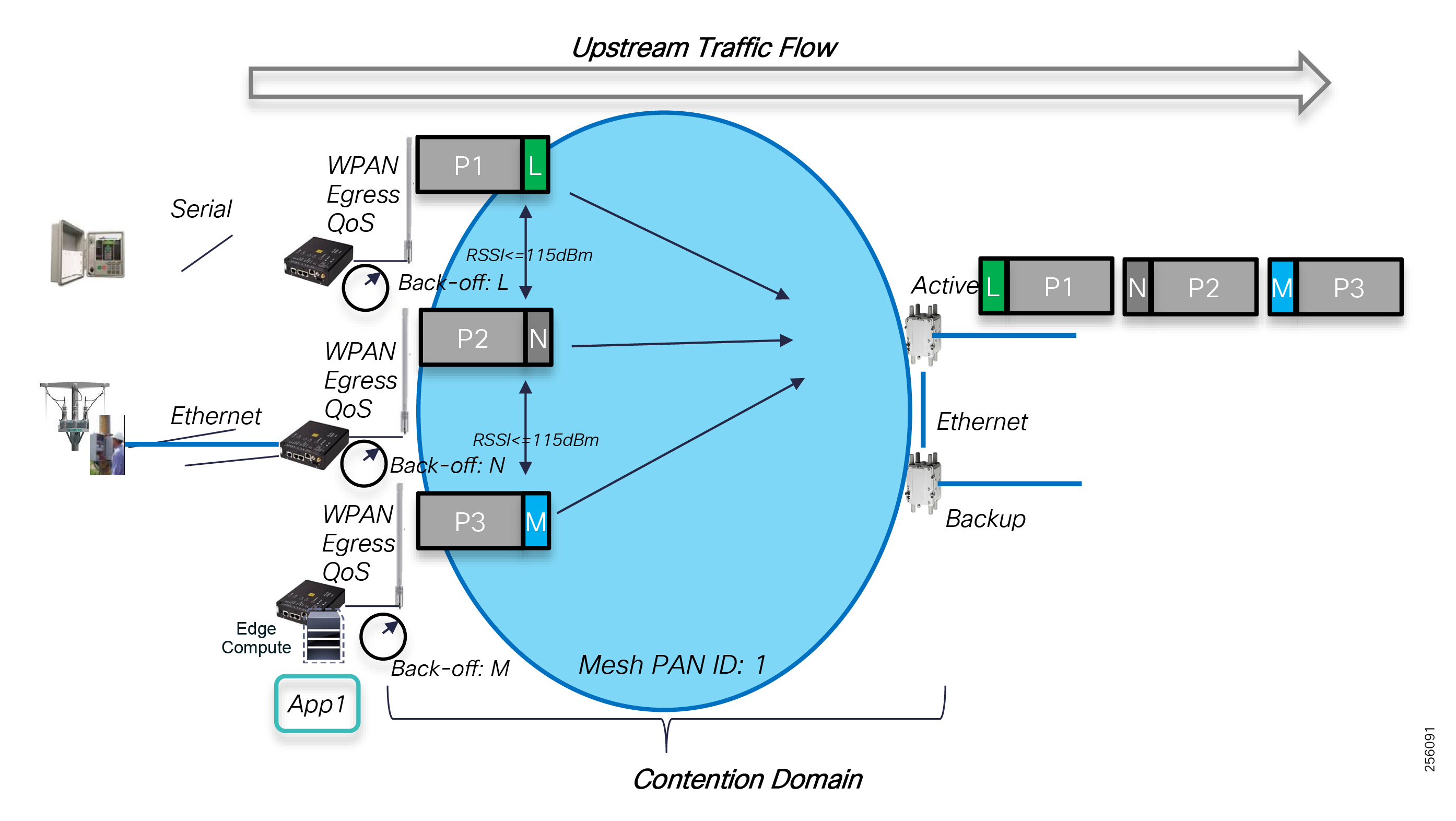

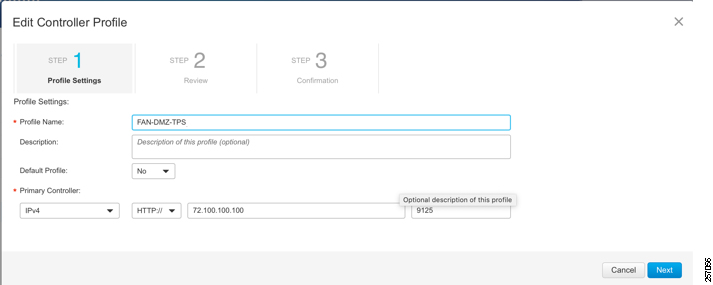

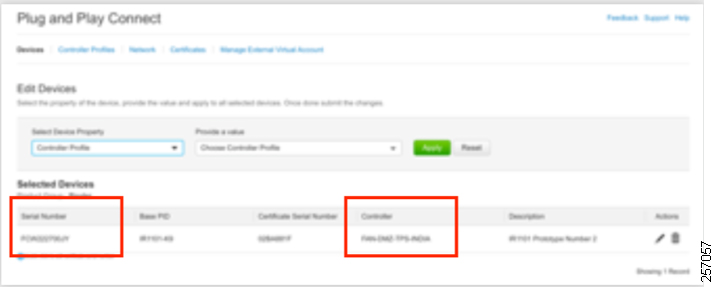

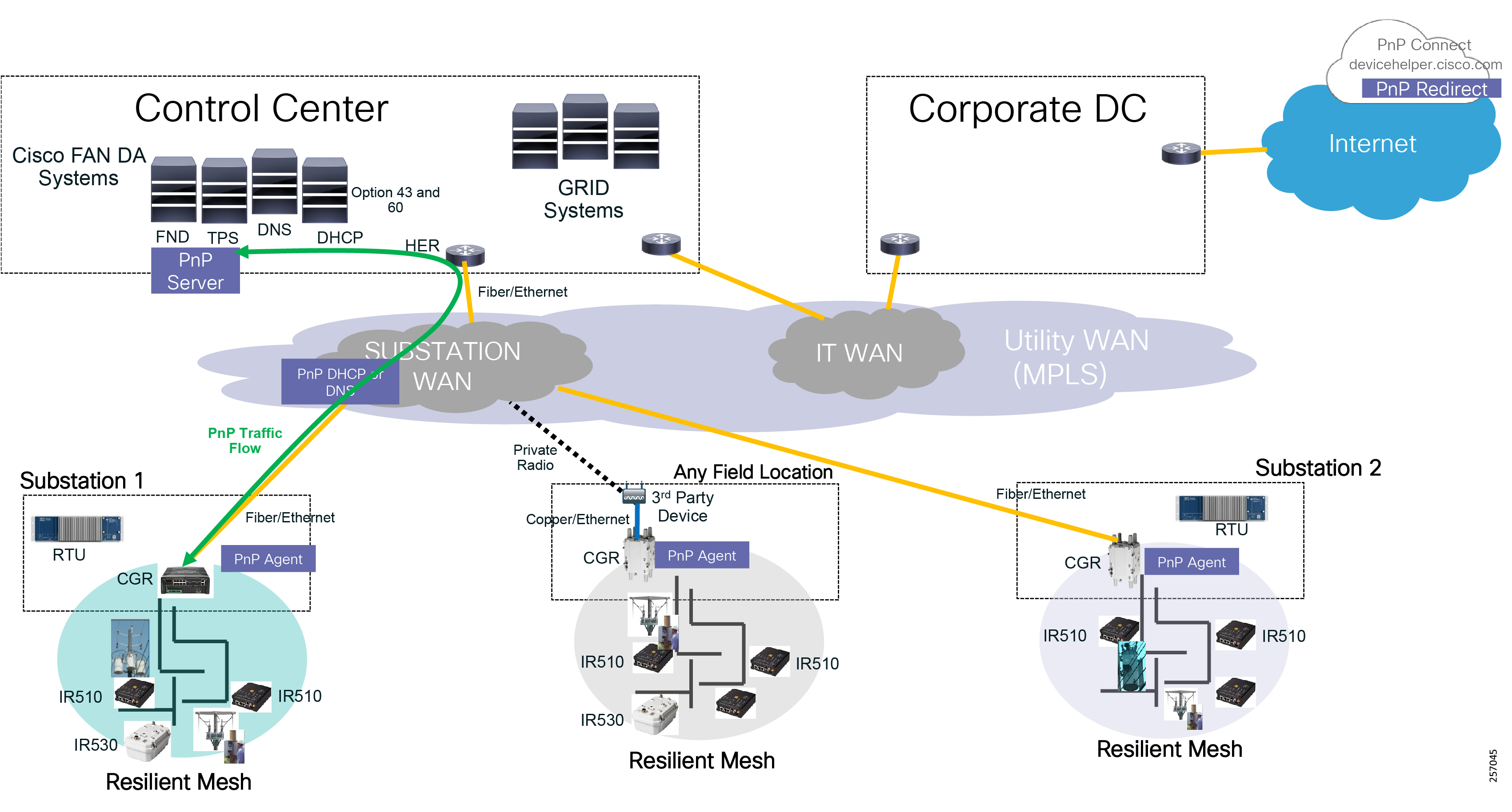

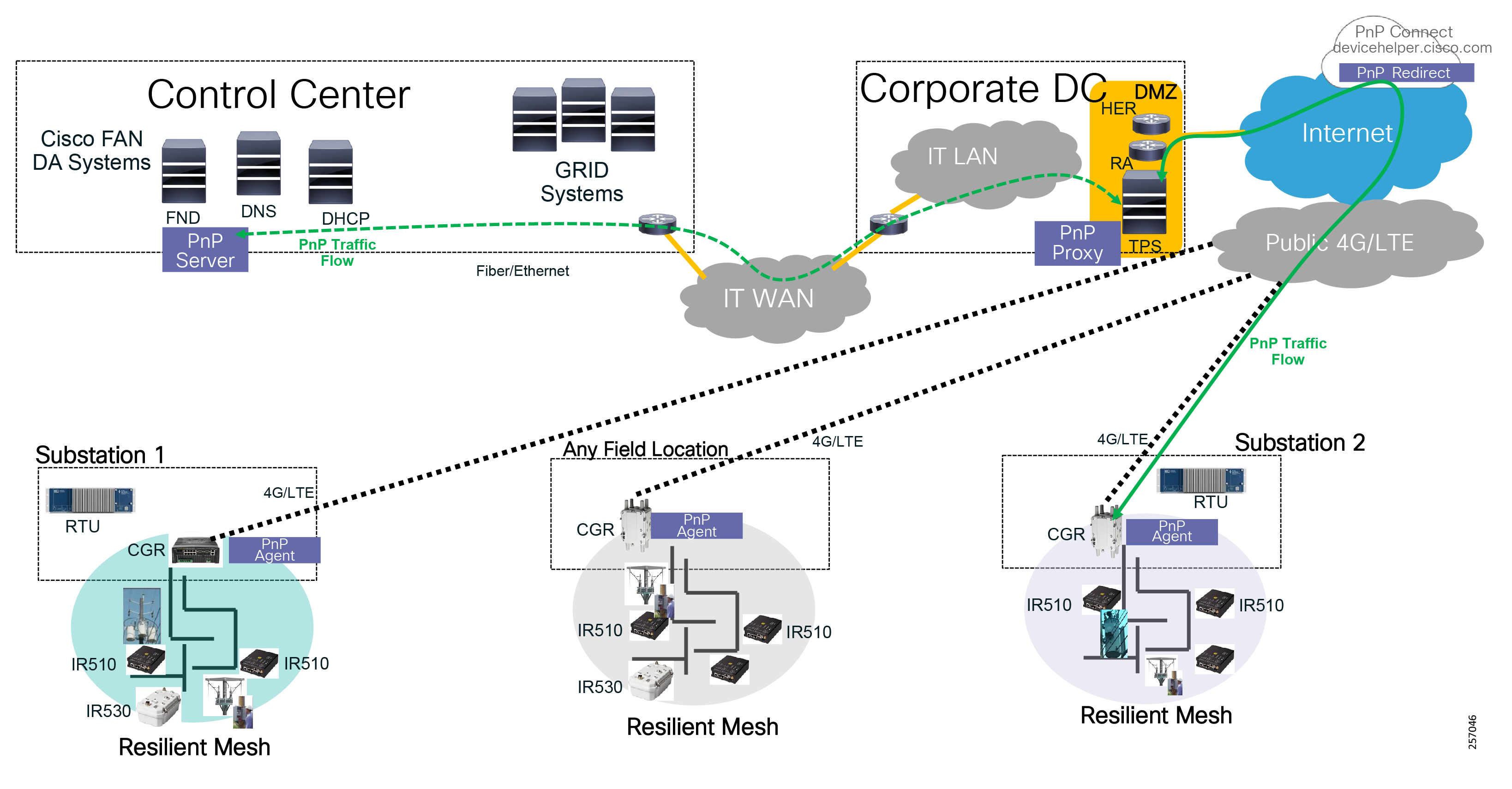

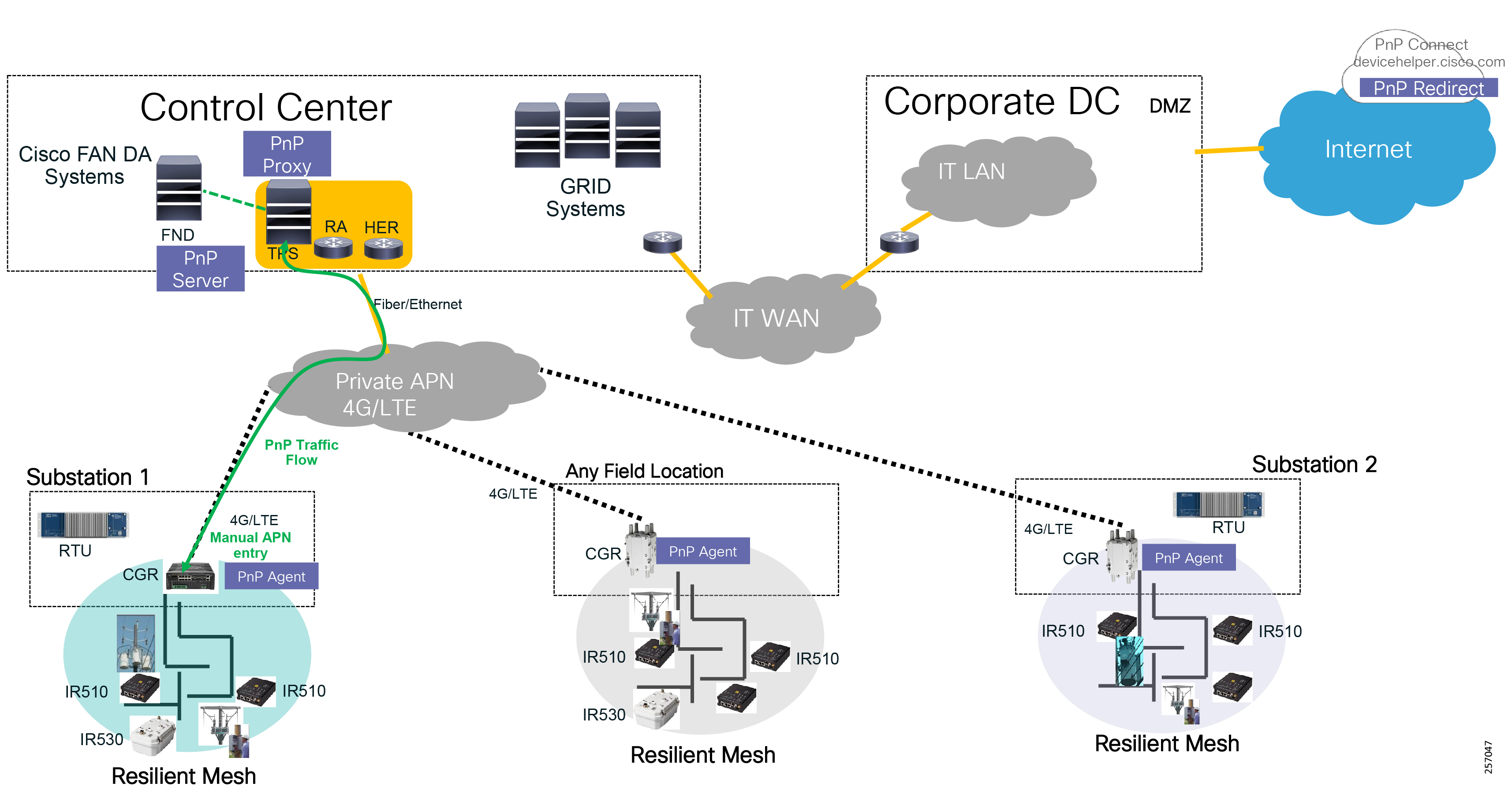

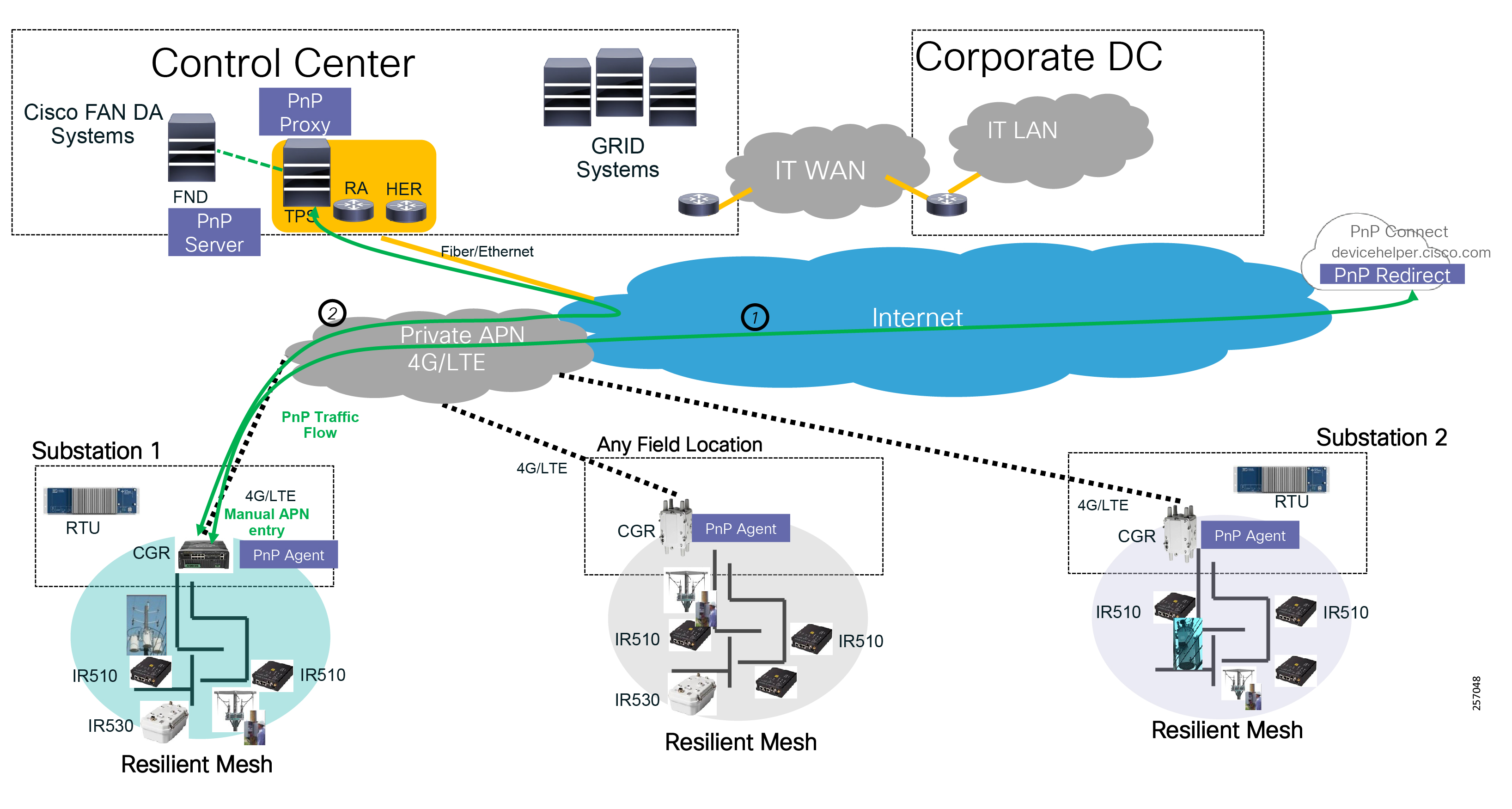

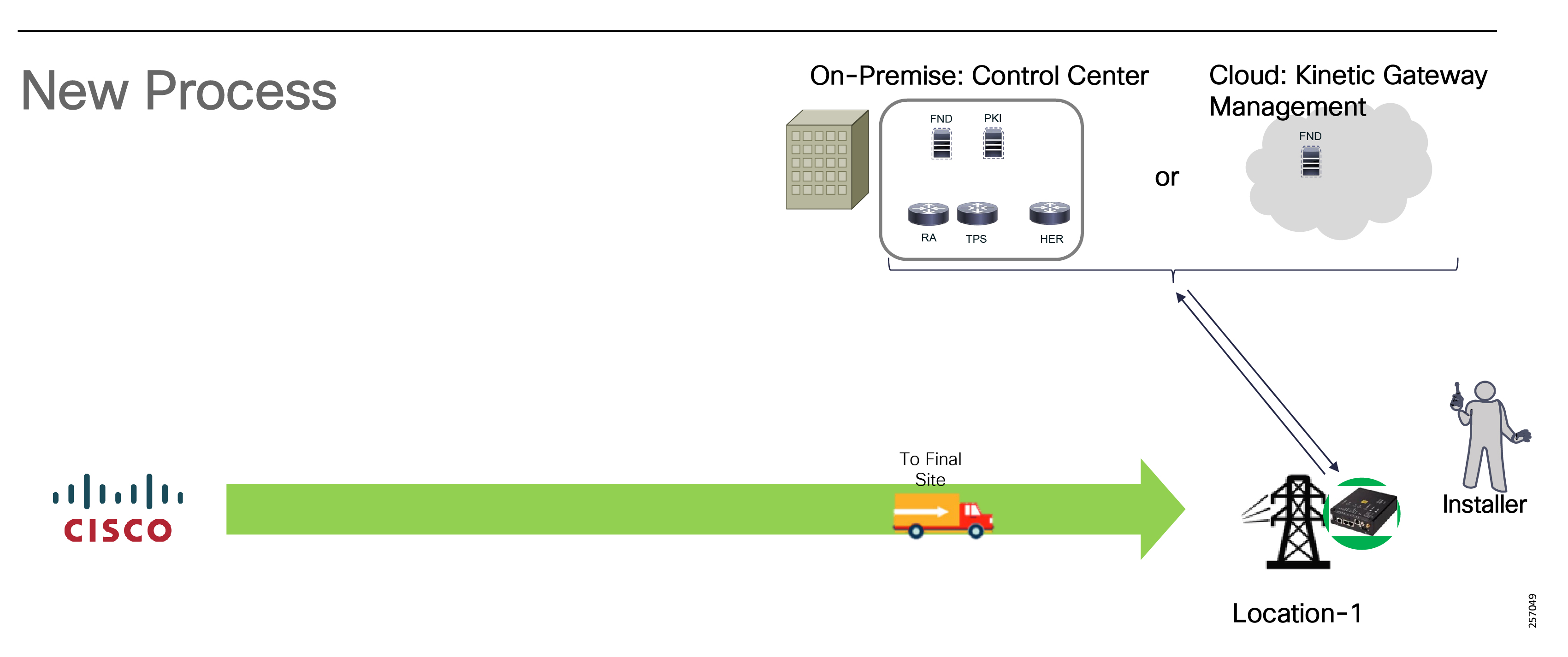

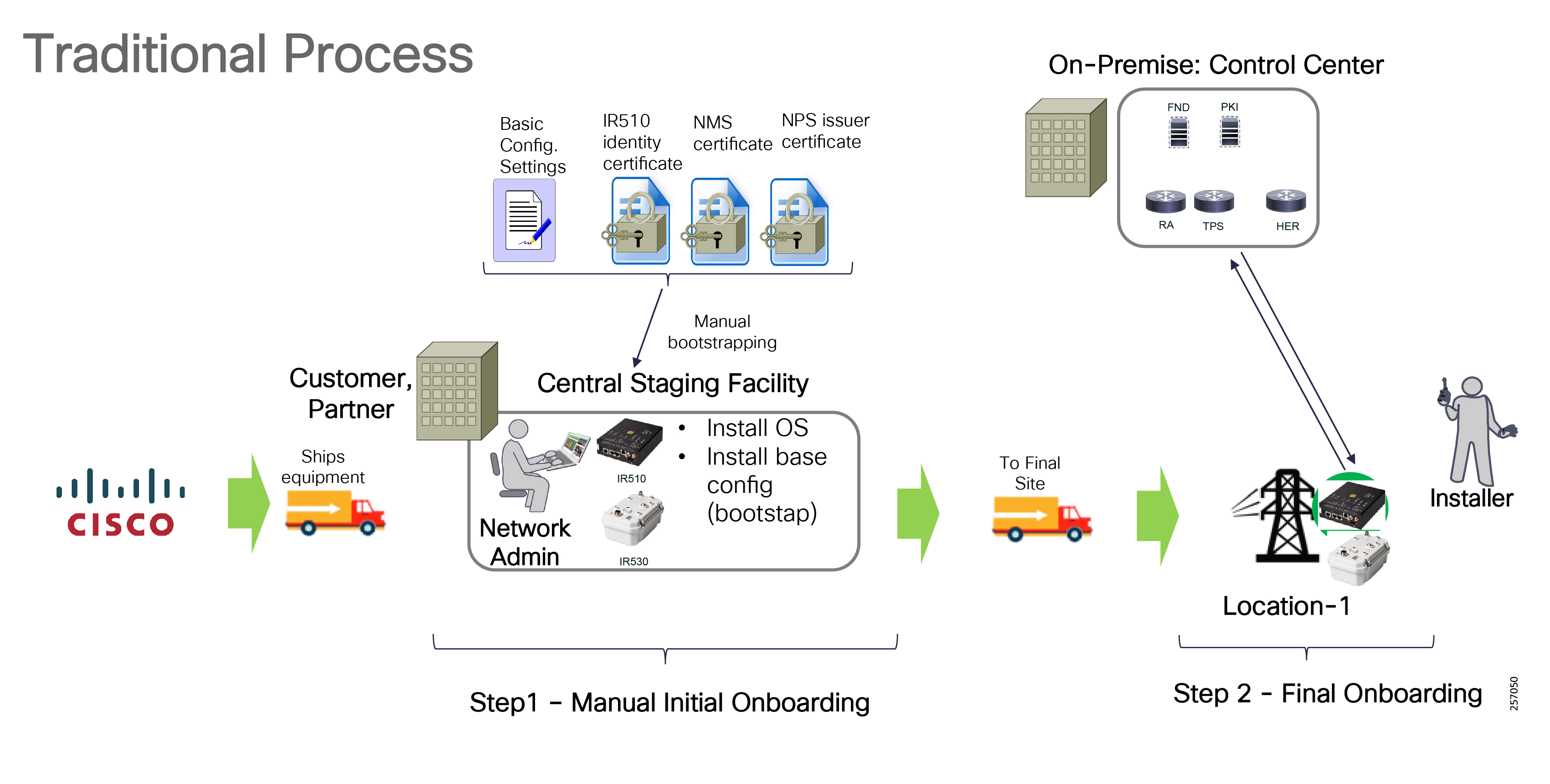

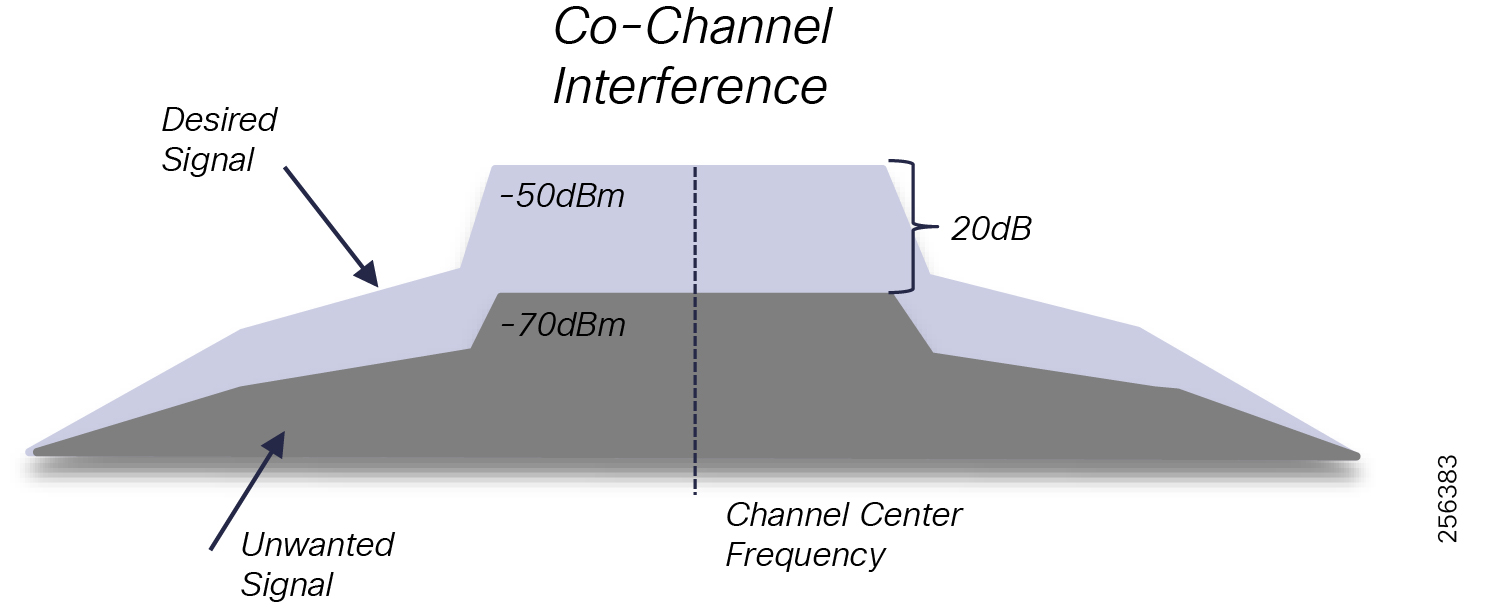

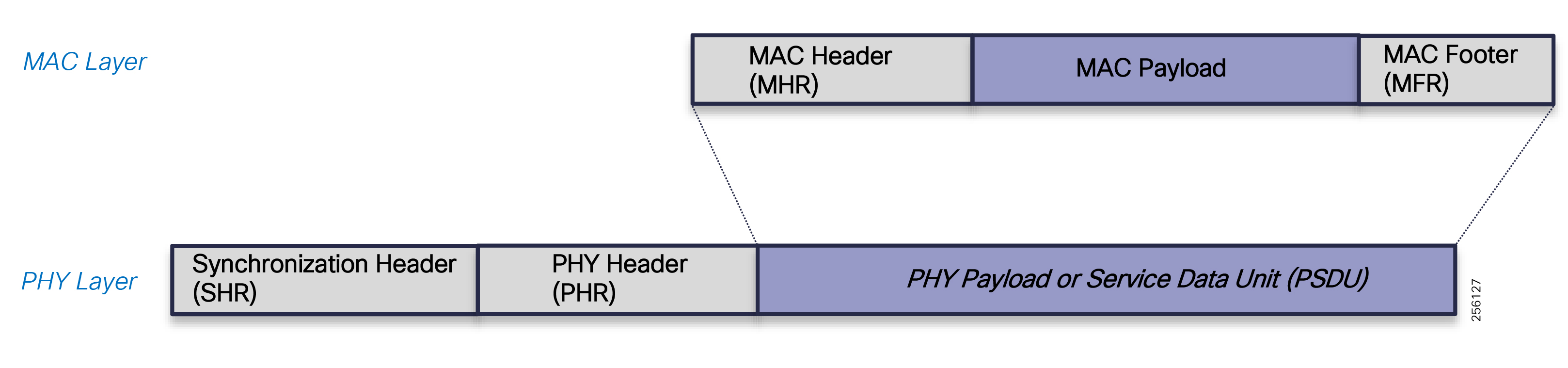

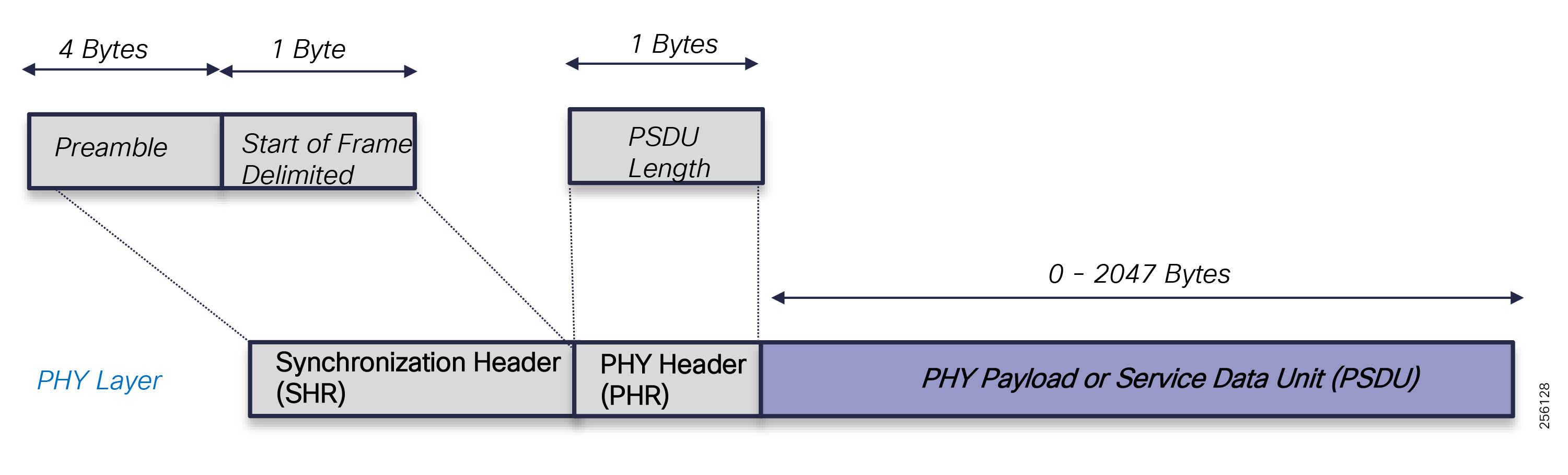

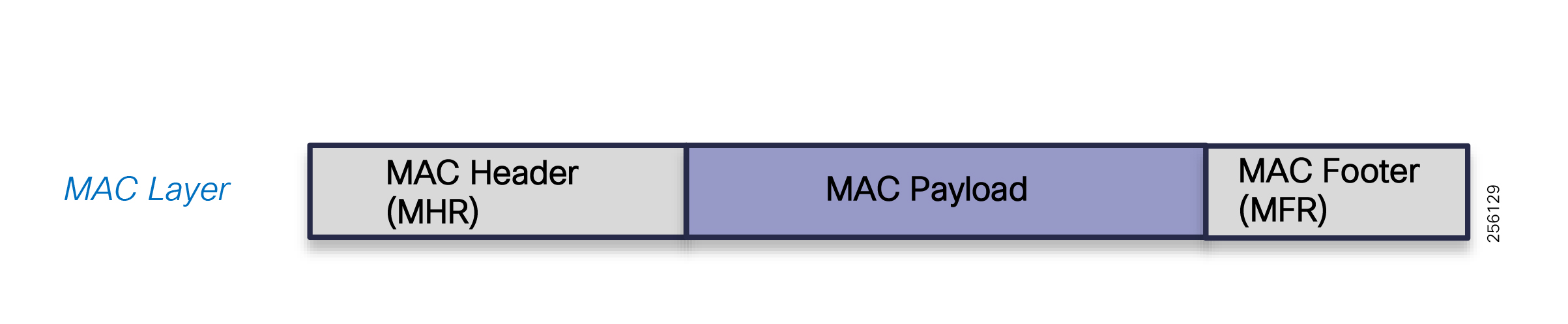

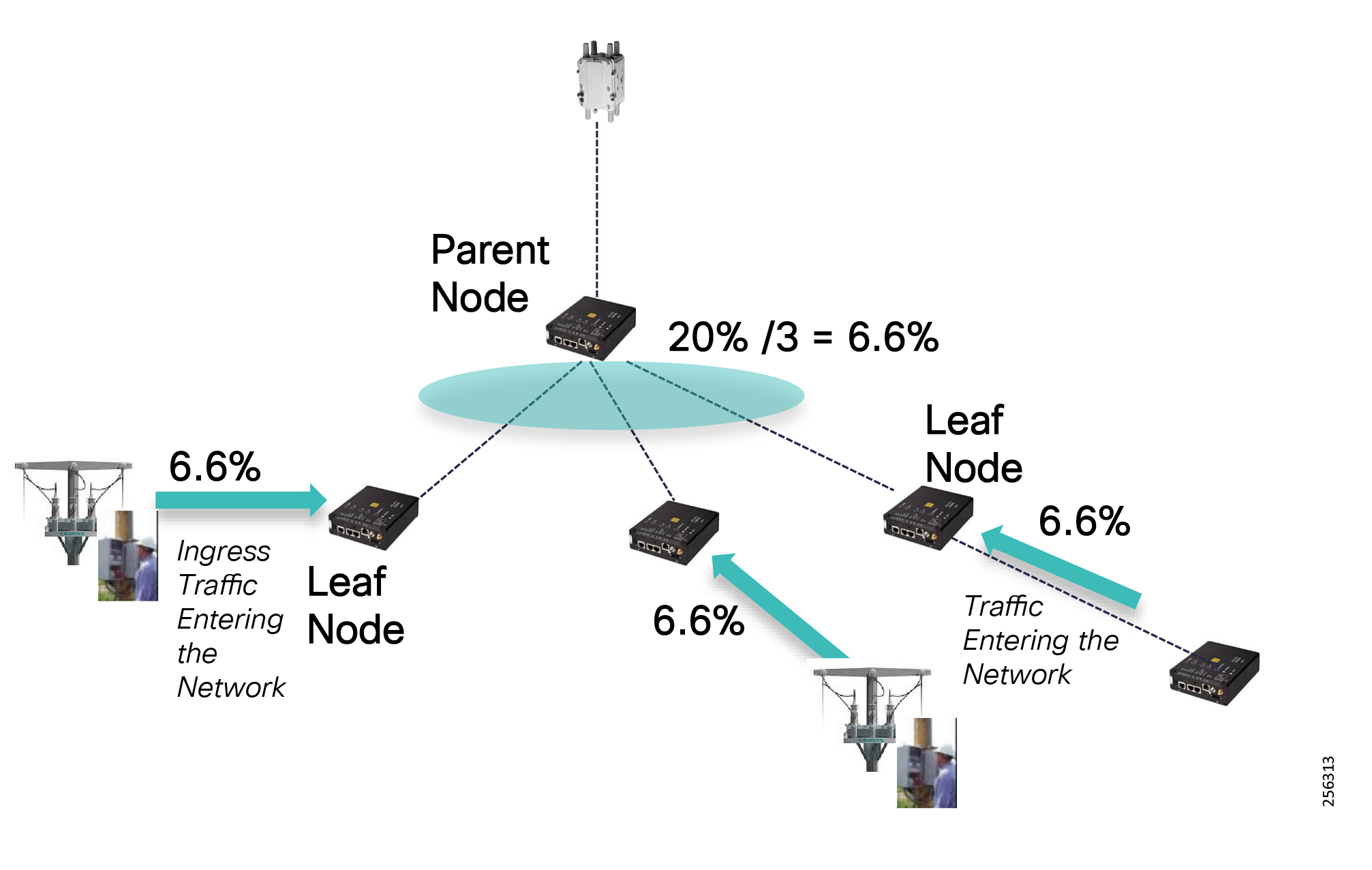

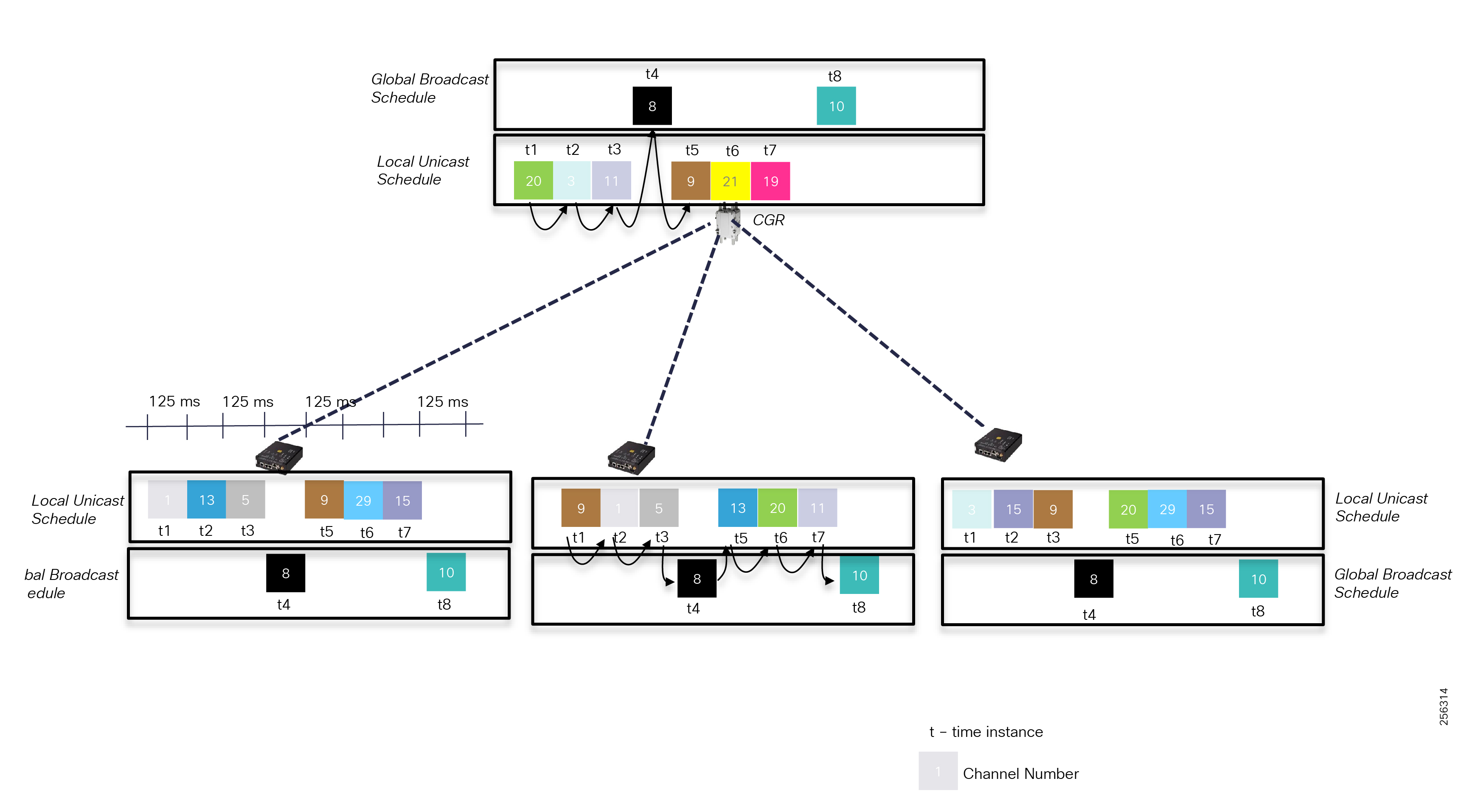

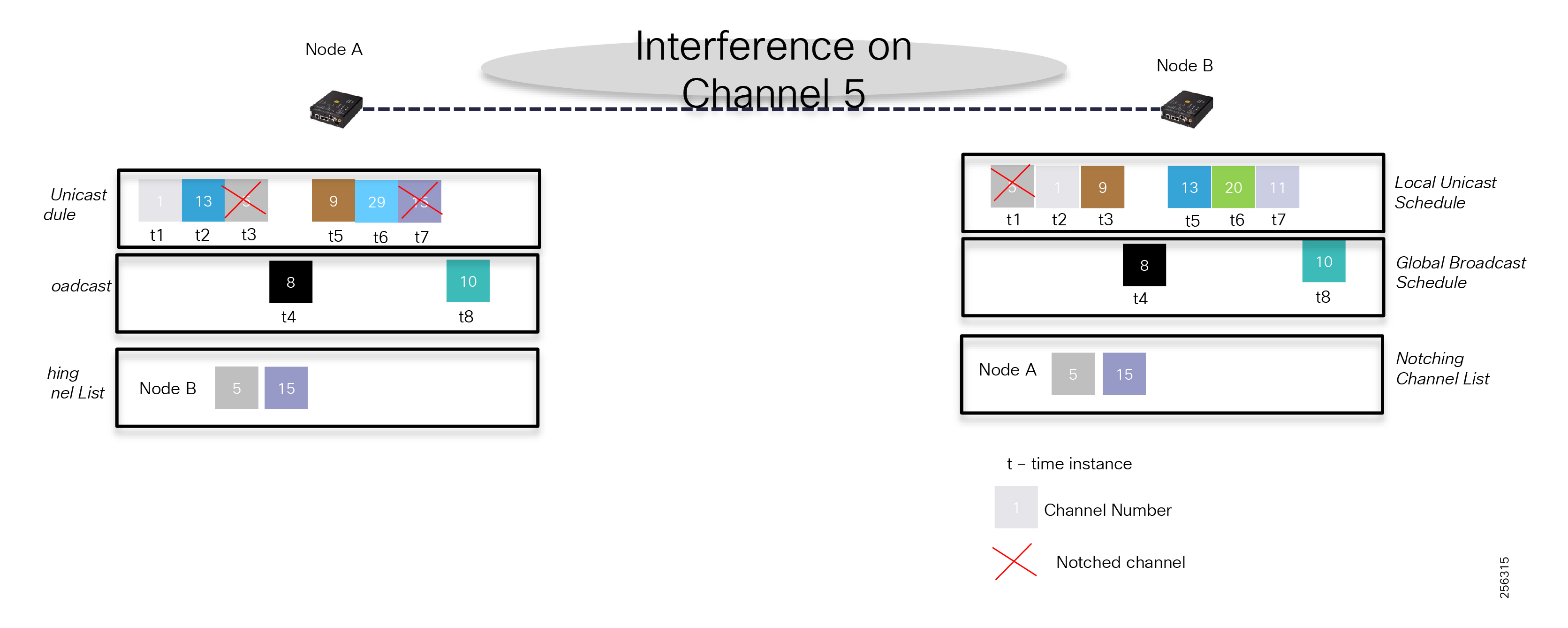

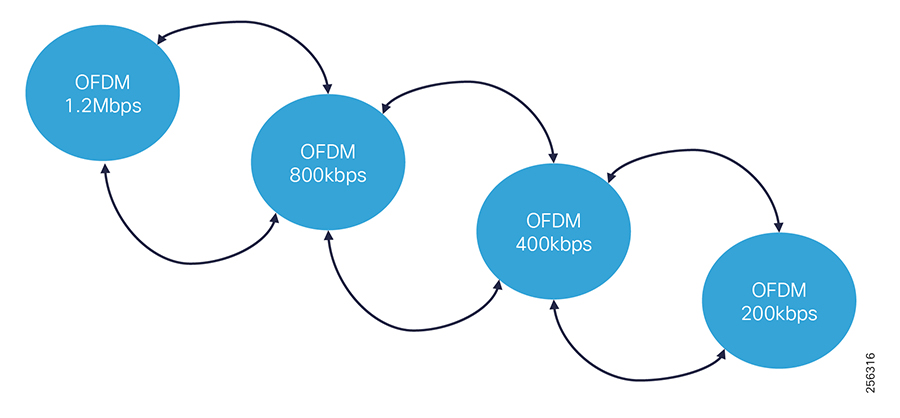

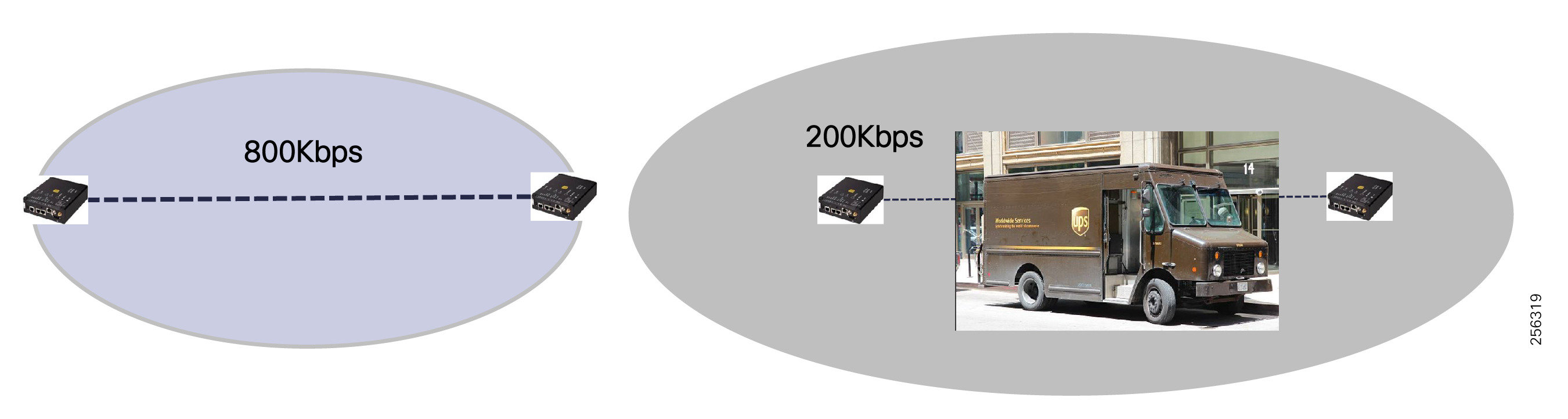

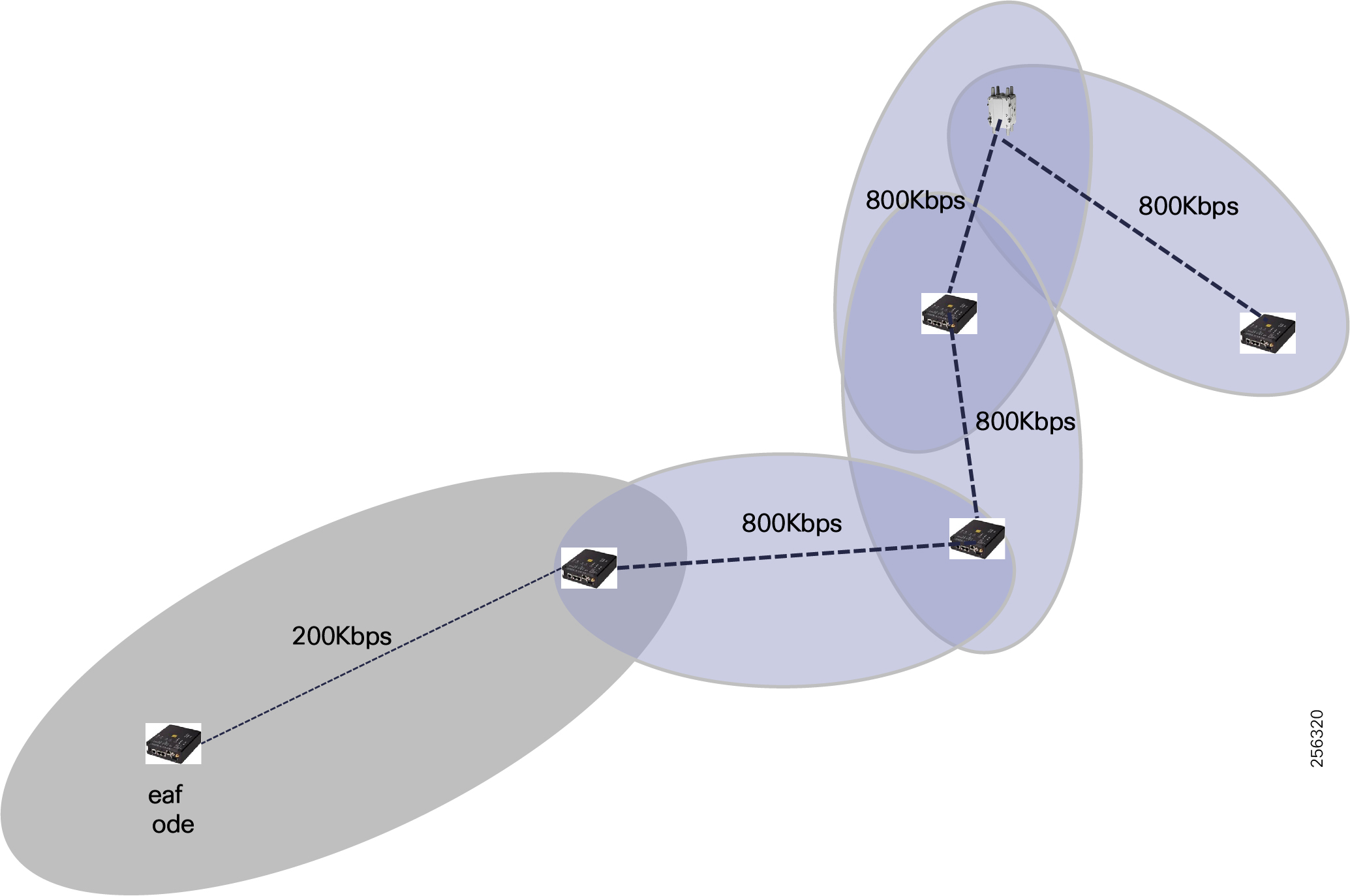

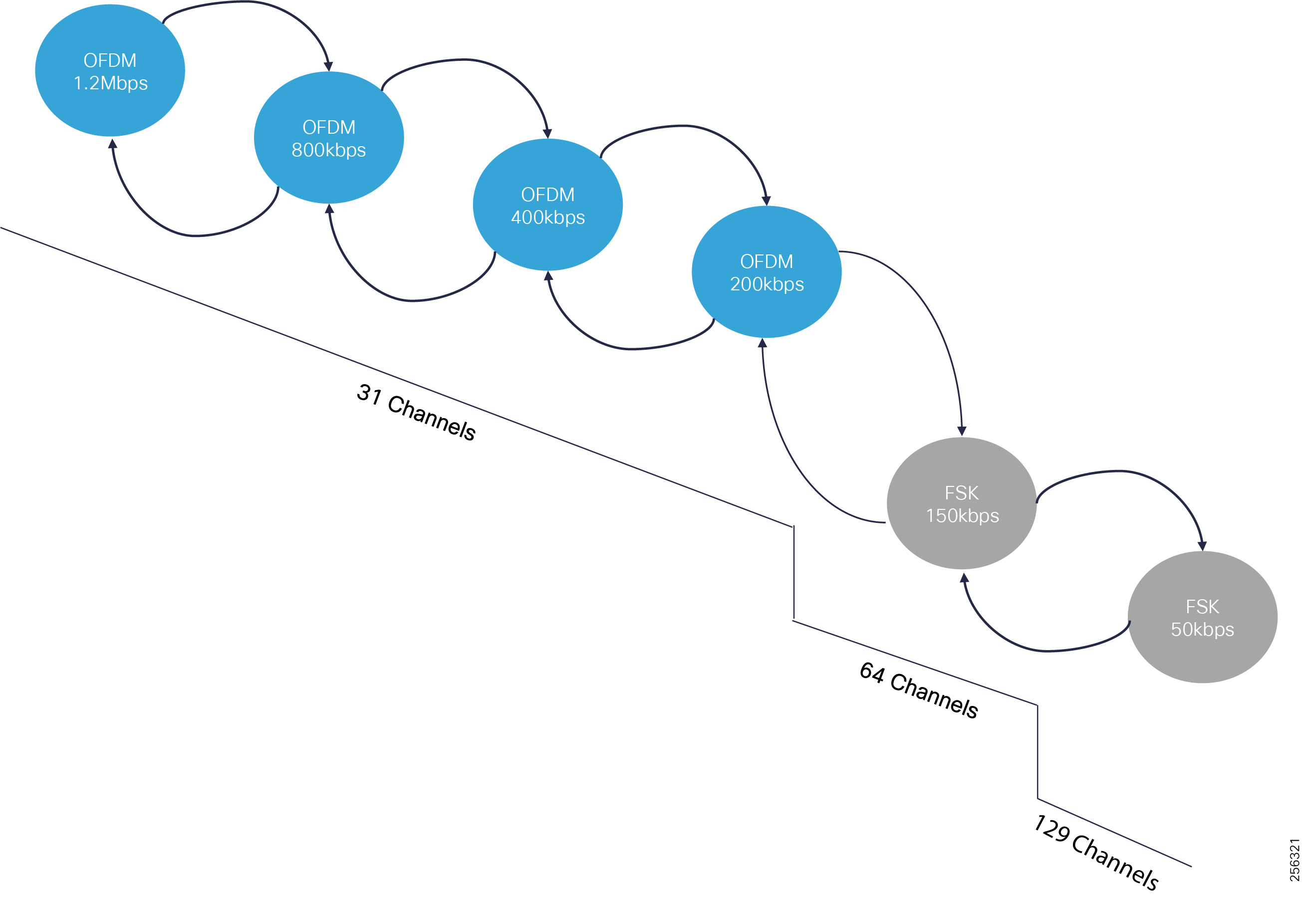

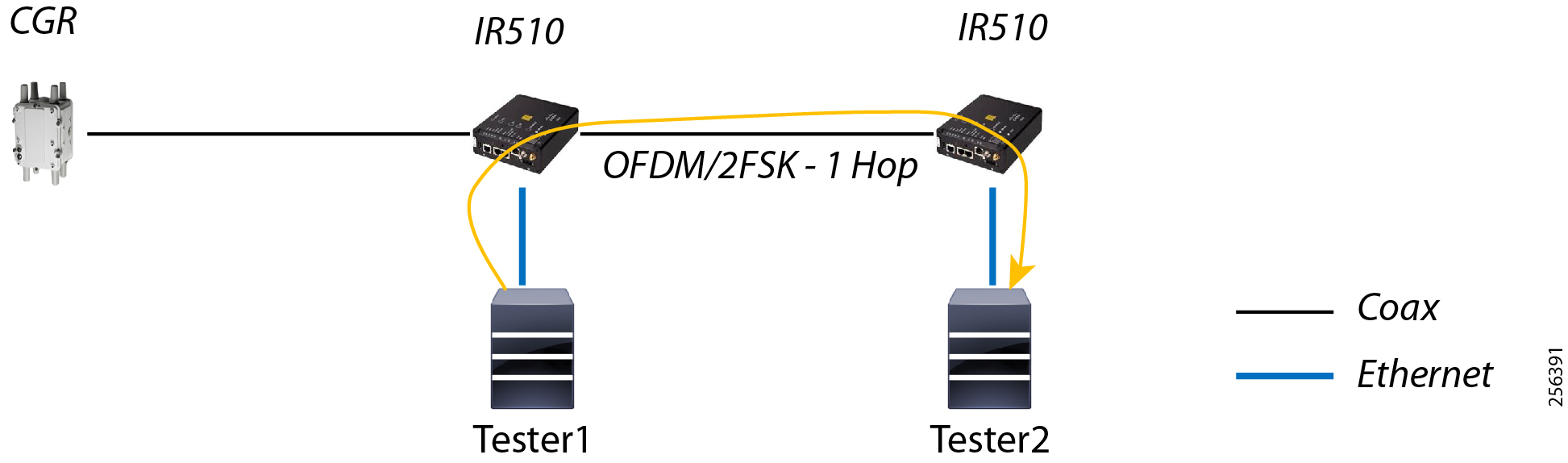

Cisco Resilient (CR) Mesh - Design Considerations for Centralized FLISR use case