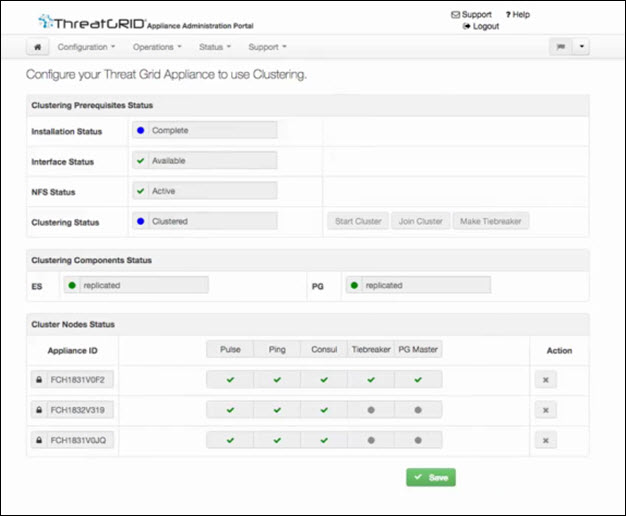

About Clustering Threat Grid Appliances

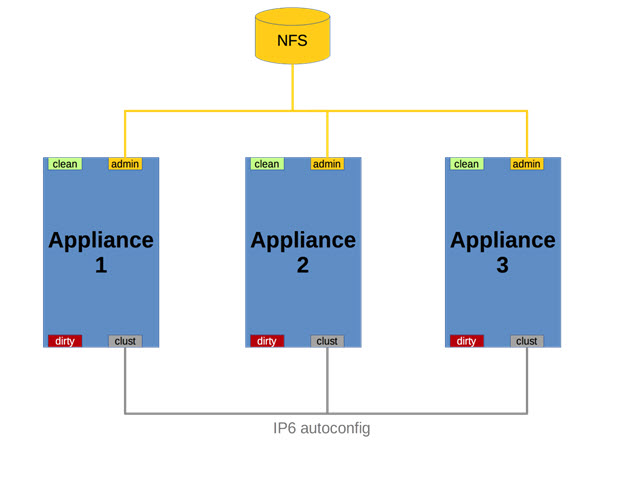

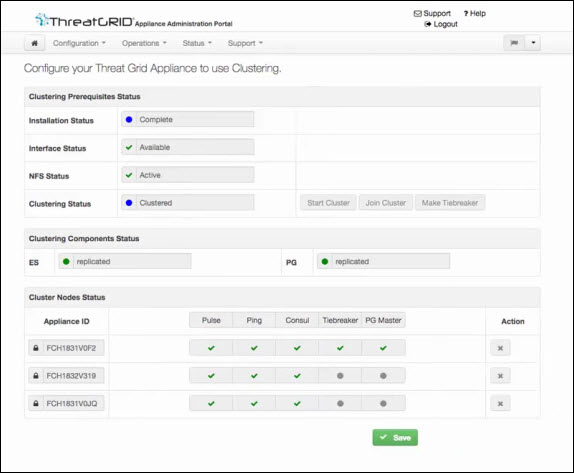

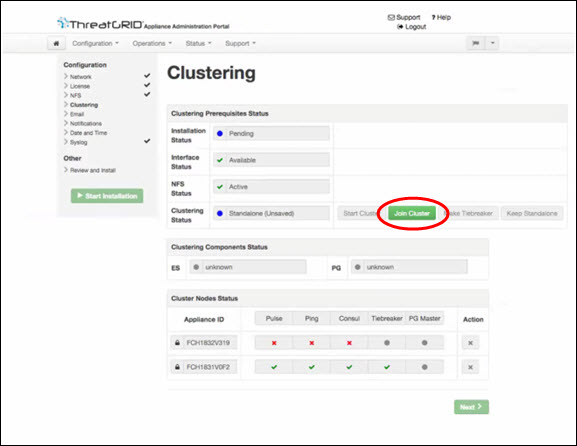

The ability to cluster multiple Threat Grid Appliances is available in v2.4.2 and later. Each Threat Grid Appliance in a cluster saves data in the shared file system, and has the same data as the other nodes in the cluster.

The main goal of clustering is to increase the capacity of a single system by joining several Threat Grid Appliances together into a cluster (consisting of 2 to 7 nodes). Clustering also helps support recovery from failure of one or more machines in the cluster, depending on the cluster size.

If you have questions about installing or reconfiguring clusters, contact Cisco Support for assistance to avoid possible destruction of data.

Clustering Features

Clustering Threat Grid Appliances offers the following features:

-

Shared Data - Every Threat Grid Appliance in a cluster can be used as if it a standalone; each one is accessing and presenting the same data.

-

Sample Submissions Processing - Submitted samples are processed on any one of the cluster members, with any other member able to see the analysis results.

-

Rate Limits - The submission rate limits of each member are added up to become the cluster's limit.

-

Cluster Size - The preferred cluster sizes are 3, 5, or 7 members; 2-, 4- and 6-node clusters are supported, but with availability characteristics similar to a degraded cluster (a cluster in which one or more nodes are not operational) of the next size up.

-

Tiebreaker - When a cluster is configured to contain an even number of nodes, the one designated as the tiebreaker gets a second vote in the event of an election to decide which node has the primary database.

Each node in a cluster contains a database, but only the database on the primary node is actually used; the others just have to be able to take over if and when the primary node goes down. Having a tiebreaker can prevent the cluster from being down when exactly half the nodes have failed, but only when the tiebreaker is not among the failed nodes.

Odd-numbered clusters won't have a tied vote. In an odd-numbered cluster, the tiebreaker role only becomes relevant if a node (not the tiebreaker) is dropped from the cluster; it then becomes even-numbered.

Note

This feature is fully tested only for clusters with two nodes.

Clustering Limitations

Clustering Threat Grid Appliances has the following limitations:

-

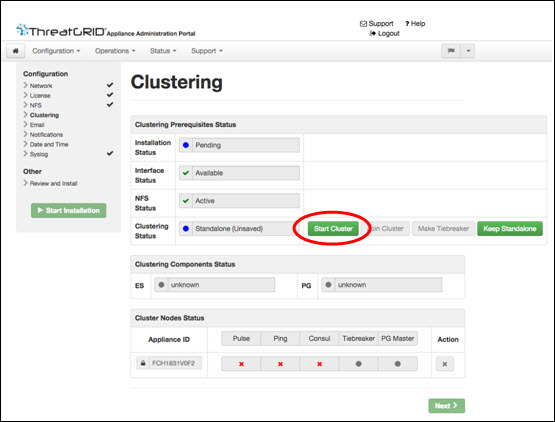

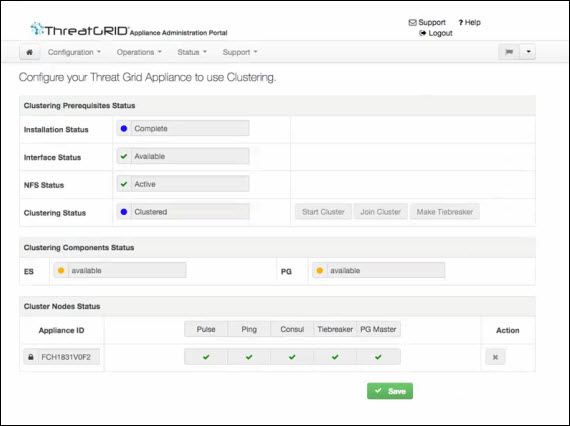

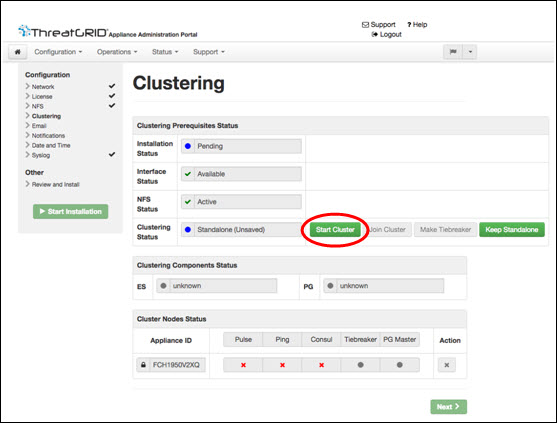

When building a cluster of existing standalone Threat Grid Appliances, only the first node (the initial node) can retain its data. The other nodes must be manually reset because merging existing data into a cluster is not allowed.

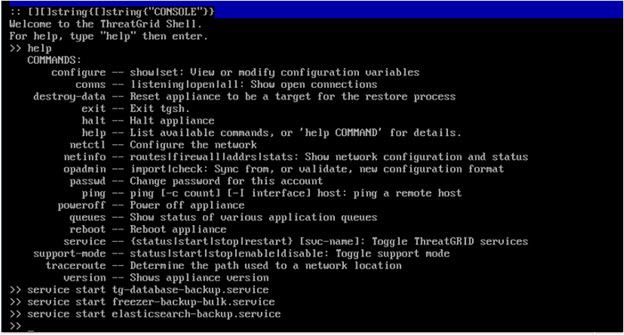

Remove existing data with the destroy-data command, as documented in Reset Threat Grid Appliance as Backup Restore Target

Important

Do not use the Wipe Appliance feature as it will render the appliance inoperable until it's returned to Cisco for reimaging.

-

Adding or removing nodes can result in brief outages, depending on cluster size and the role of the member nodes.

-

Clustering on the M3 server is not supported. Contact Threat Grid Support if you have any questions.

Clustering Requirements

The following requirements must be met when clustering Threat Grid Appliances:

-

Version - All Threat Grid Appliances must be running the same version to set up a cluster in a supported configuration; it should always be the latest available version.

-

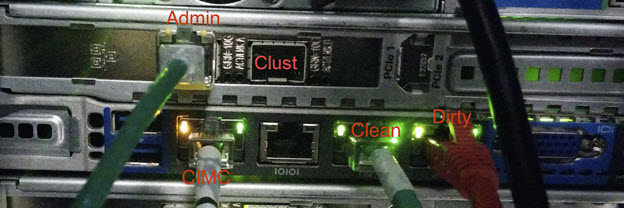

Clust Interface - Each Threat Grid Appliance requires a direct interconnect to the other Threat Grid Appliances in the cluster; a SFP+ must be installed in the Clust interface slot on each Threat Grid Appliance in the cluster (not relevant in a standalone configuration).

Direct interconnect means that all Threat Grid Appliances must be on the same layer-two network segment, with no routing required to reach other nodes and no significant latency or jitter. Network topologies where the nodes are not on a single physical network segment are not supported.

-

Airgapped Deployments Discouraged - Due to the increased complexity of debugging, appliance clustering is strongly discouraged in airgapped deployments or other scenarios where a customer is unable or unwilling to provide L3 support access to debug.

-

Data - A Threat Grid Appliance can only be joined to a cluster when it does not contain data (only the initial node can contain data). Moving an existing Threat Grid Appliance into a data-free state requires the use of the database reset process (available in v2.2.4 or later).

Important

Do not use the destructive Wipe Appliance process, which removes all data and renders the application inoperable until it's returned to Cisco for reimaging.

-

SSL Certificates - If you are installing SSL certificates signed by a custom CA on one cluster node, then the certificates for all of the other nodes should be signed by the same CA.

Networking and NFS Storage

Clustering Threat Grid Appliances requires the following networking and NFS storage considerations:

-

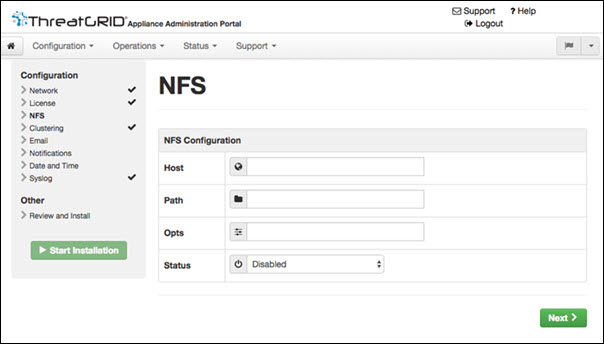

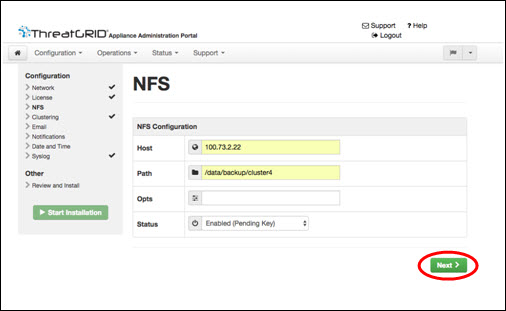

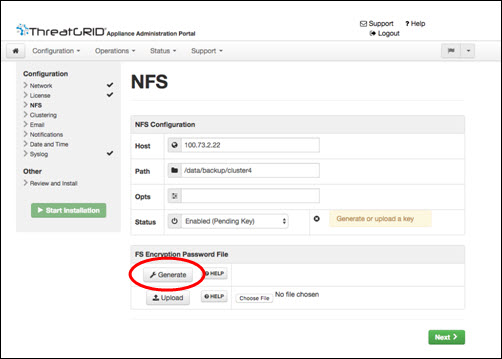

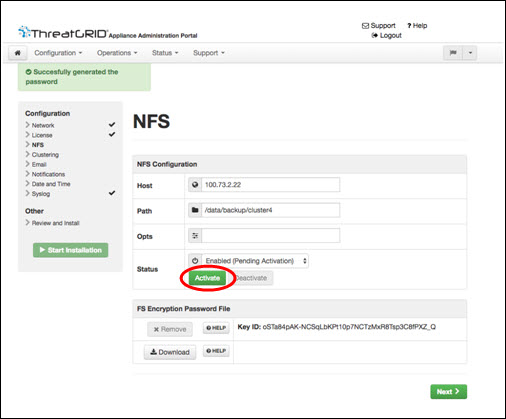

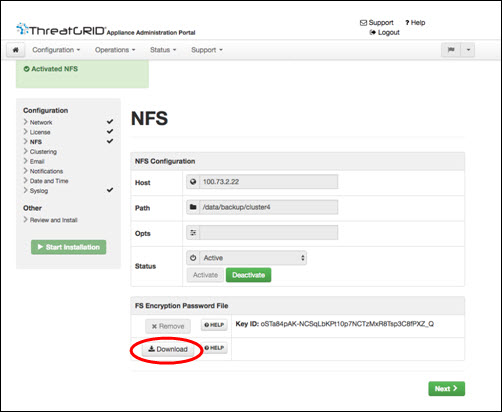

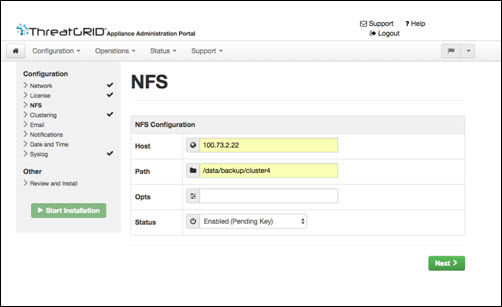

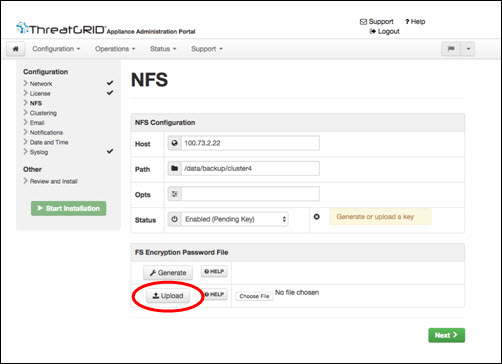

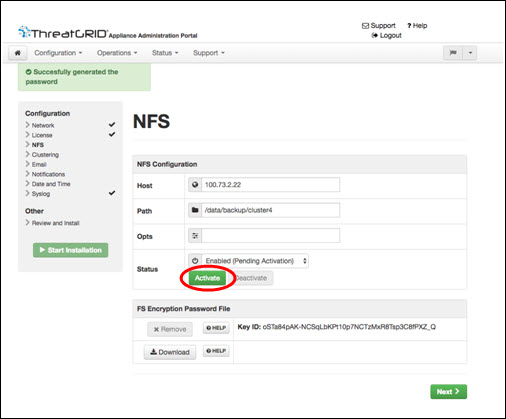

Threat Grid Appliance clusters require a NFS store to be enabled and configured. It must be available via the Admin interface and accessible from all cluster nodes.

-

Each cluster must be backed by a single NFS store with a single key. While that NFS store may be initialized with data from a pre-existing Threat Grid Appliance, it must not be accessed by any system that is not a member of the cluster while the cluster is in operation.

-

The NFS store is a single point of failure, and the use of redundant, highly reliable equipment for that role is essential.

-

The NFS store used for clustering must keep its latency consistently low.

Feedback

Feedback