Introduction

This document describes how to initiate the Diagnostic Tool embedded in UCS Manager to perform memory diagnostics on servers.

Prerequisites

Requirements

Components Used

Diag Test is available in the UCS Manager 3.1

It is only available for the servers that are integrated into it (B-Series and C-Series).

The information in this document was created from the devices in a specific lab environment. All of the devices used in this document started with a cleared (default) configuration. If your network is live, ensure that you understand the potential impact of any command.

Procedure / Configure

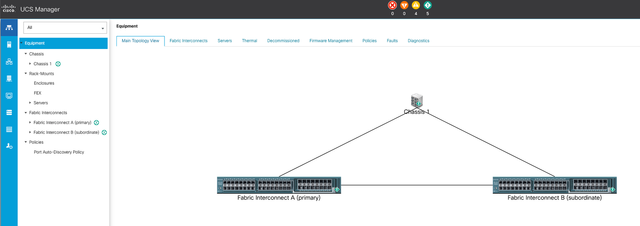

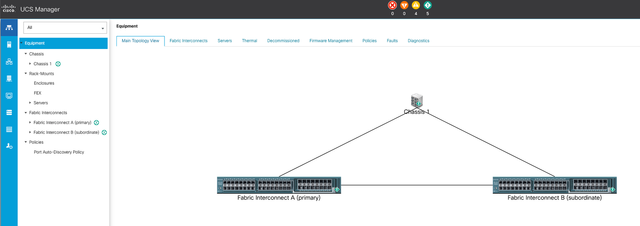

Navigate to the Servers section.

1 Main Topology

1 Main Topology

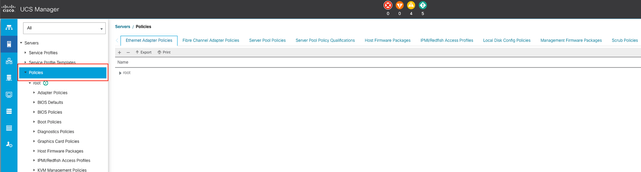

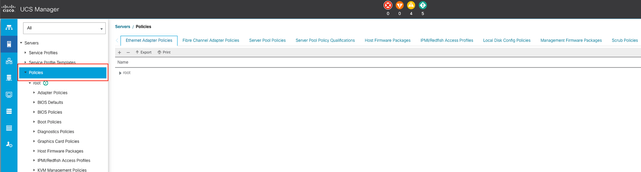

Select Policies.

2 Policies

2 Policies

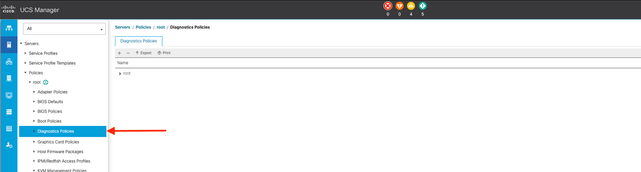

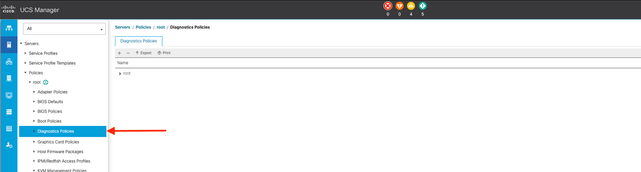

Within Policies, select and open Diagnostics Policies.

3 Diagnostics Policies

3 Diagnostics Policies

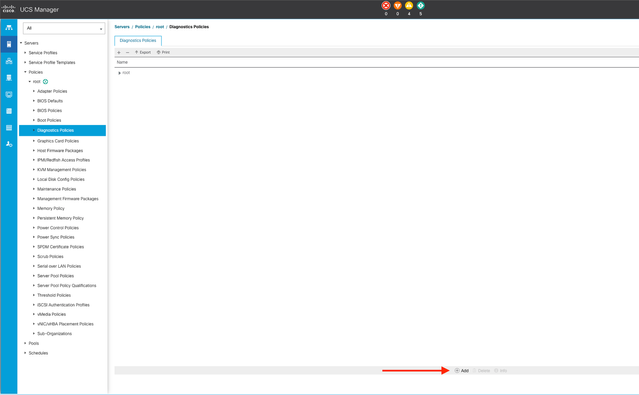

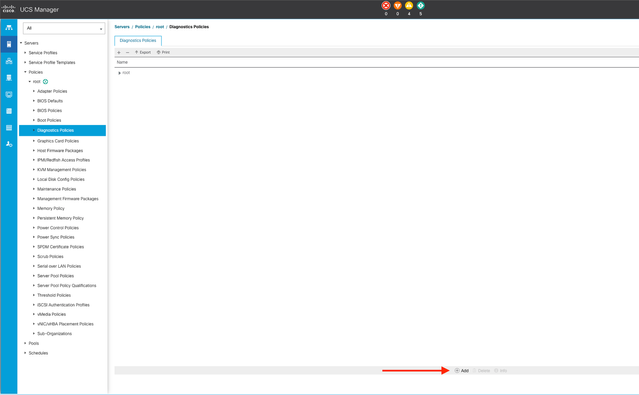

At the bottom, click Add to create a new diagnostic policy.

4 Add

4 Add

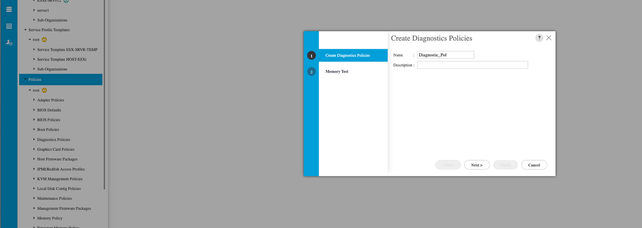

In the new window, provide a name for the diagnostic policy. The Description field is optional.

5 Create Diagnostics Policies

5 Create Diagnostics Policies

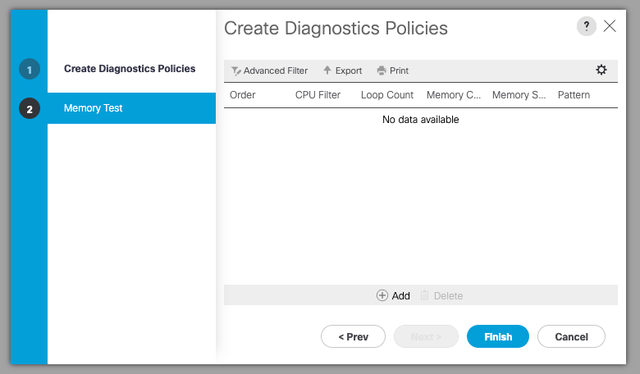

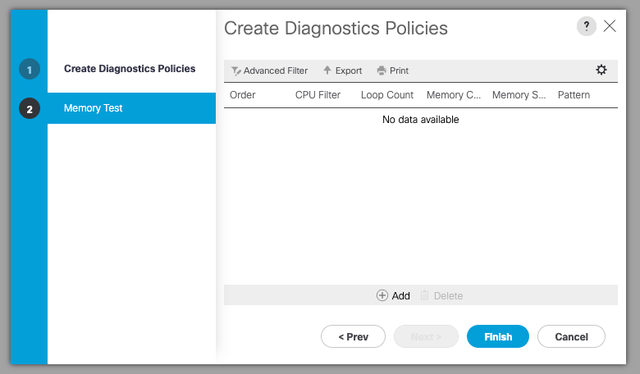

Configure the details of the memory test, then click Add at the bottom of the window.

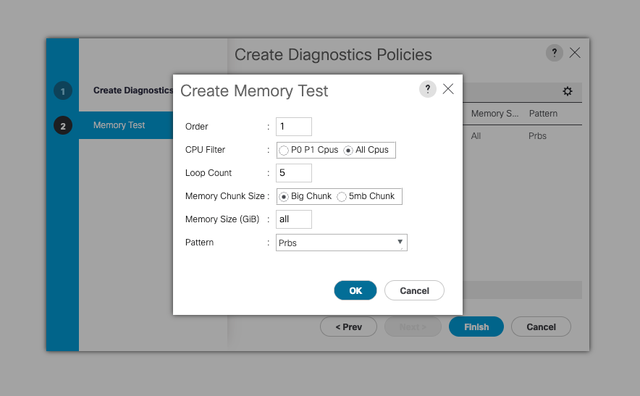

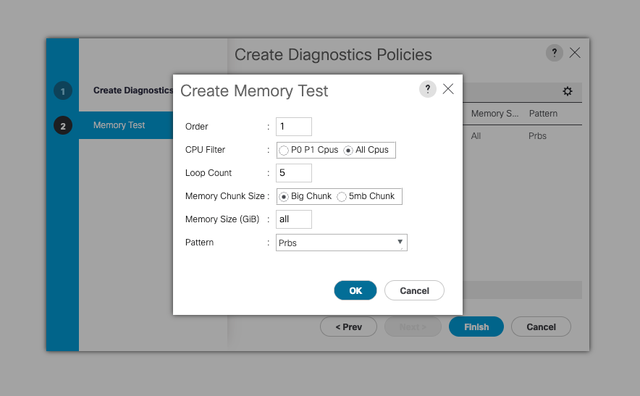

In the pop-up window, populate the fields according to your needs:

- Order: Defines the order of test execution.

- CPU Filter: Choose to configure for all CPUs or a specific CPU.

- Loop Count: Set the number of test iterations (minimum 1, maximum 1000).

- Memory Chunk Size: Set the memory chunk to 'big chunk' or '5mb-chunk.'

- Memory Size: Specify the tested memory size.

- Pattern: Choose from butterfly, killer, PRBS, PRBS-addr, or PRBS-killer tests.

7 Create Memory Test

7 Create Memory Test

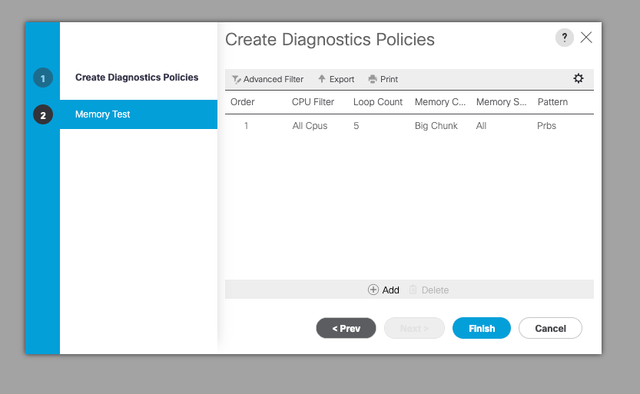

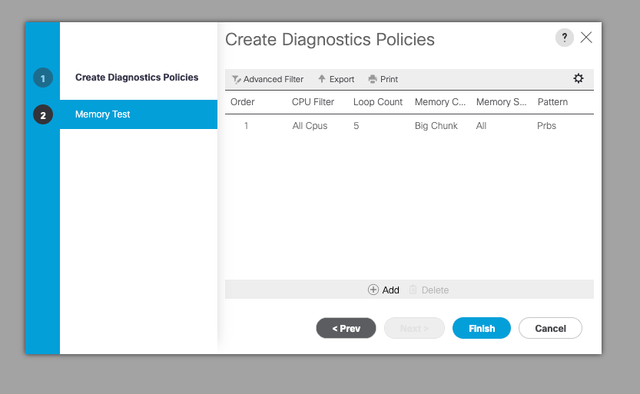

When all fields are entered, click OK and then Finish.

8 Finish Memory Test

8 Finish Memory Test

After creating the Diagnostic Policy, assign it to a blade server, an integrated rack server, or all servers.

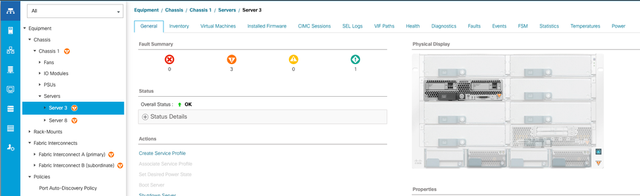

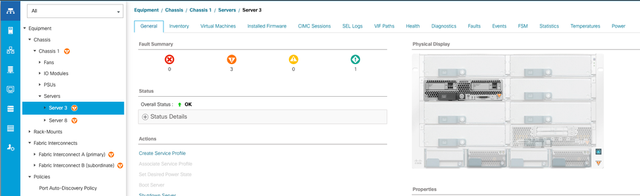

To assign the policy to a specific server, navigate to the desired server by accessing Equipment and then Chassis.

9 Status

9 Status

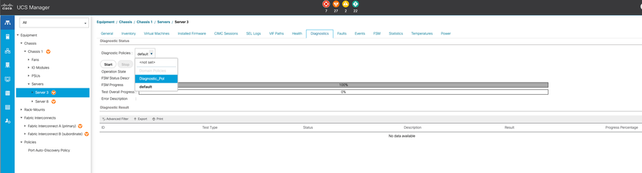

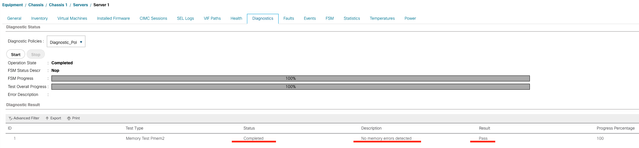

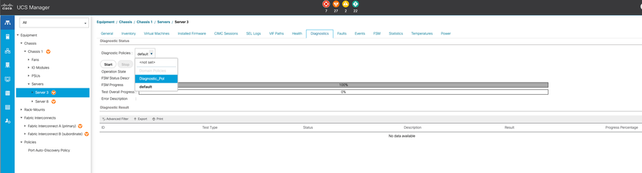

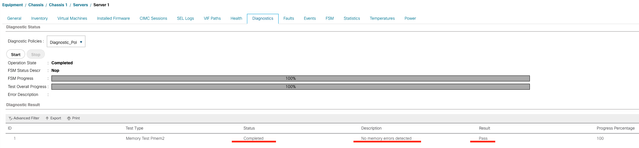

At the top of the screen, open the Diagnostics tab.

Go to Diagnostic Policies, and select the policy you created from the drop-down menu.

10 Diagnostic

10 Diagnostic

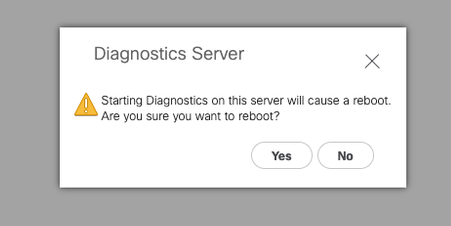

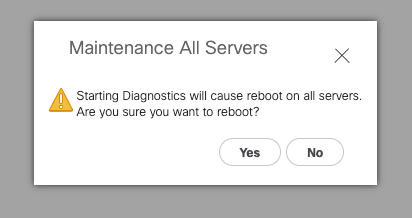

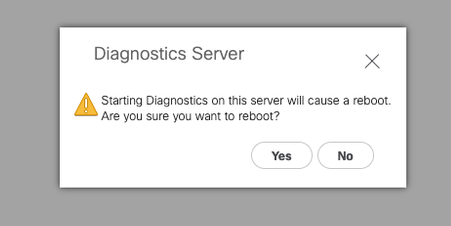

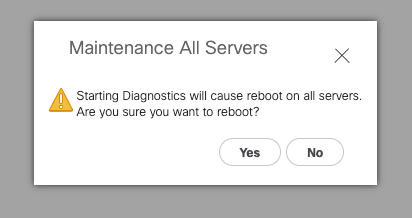

To initiate the Diagnostic Test, click Start button. A pop-up alert informs you that this diagnostic causes a server reboot.

Caution: This activity is highly intrusive and must be performed during a maintenance window as it reboots all servers.

If ready, press Yes to continue, or No to cancel.

11 Reboot Alert

11 Reboot Alert

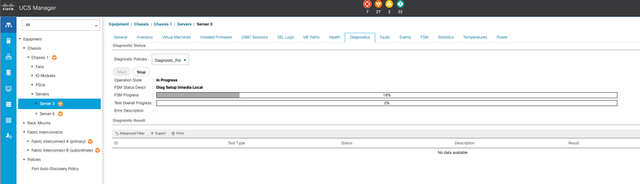

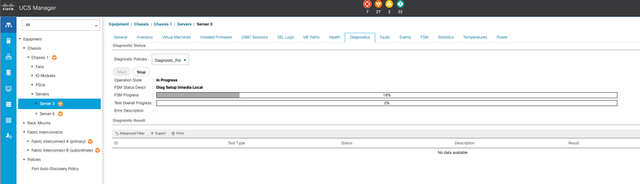

Under the Start and Stop buttons, a progress bar shows the current task description and overall progress.

To stop the diagnostic at any time, click Stop.

12 Progress bar

12 Progress bar

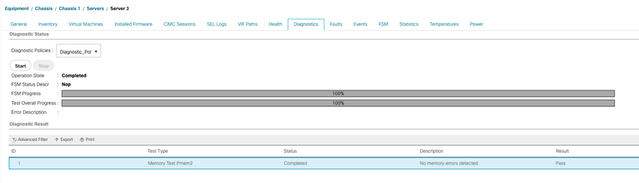

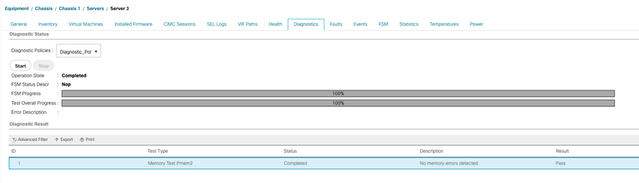

When the diagnostic is complete, the Diagnostic Result is displayed.

In this test, no memory issues were found. If the result returns Fail, generate the logs for the server and contact TAC for assistance.

13 Overall Progress

13 Overall Progress

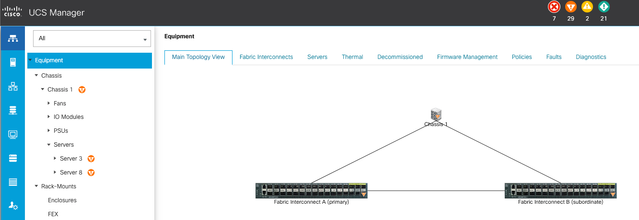

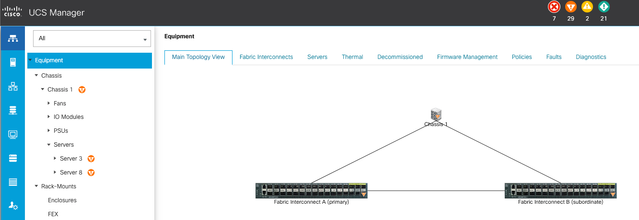

To run diagnostics on all servers, access Equipment and click Diagnostics on the far right.

Caution: This activity is highly intrusive and must be performed during a maintenance window as it reboots all servers.

14 Main Topology

14 Main Topology

This opens a new screen where you can select to run diagnostics on Blade Servers or Rack Servers.

This process allows for simultaneous execution of multiple diagnostic tests on servers with different configurations.

15 Blade Servers

15 Blade Servers

By clicking Start, a pop-up alert appears informing you that the servers are going to be rebooted.

Caution: This activity is highly intrusive and must be performed during a maintenance window as it reboots all servers.

Choose Yes to proceed with the diagnostic test or No to cancel.

16 Maintenance alert

16 Maintenance alert

After confirming the diagnostic test, the progress for multiple servers is reflected in the Operation State column and the Overall Progress Percentage column, indicating the current test percentage.

17 Server Progress

17 Server Progress

18 Operation state

18 Operation state

Double-click on any server to investigate further. This action opens the diagnostic result for that specific server.

19 Completed

19 Completed

If the Result column reports Fail instead of Pass , please open a case with TAC.

Collect the server logs or, if you have Intersight available, collect the serial number of the server.

Include this information in the case to help our engineers start investigating the issue immediately.

Feedback

Feedback